-

摘要:

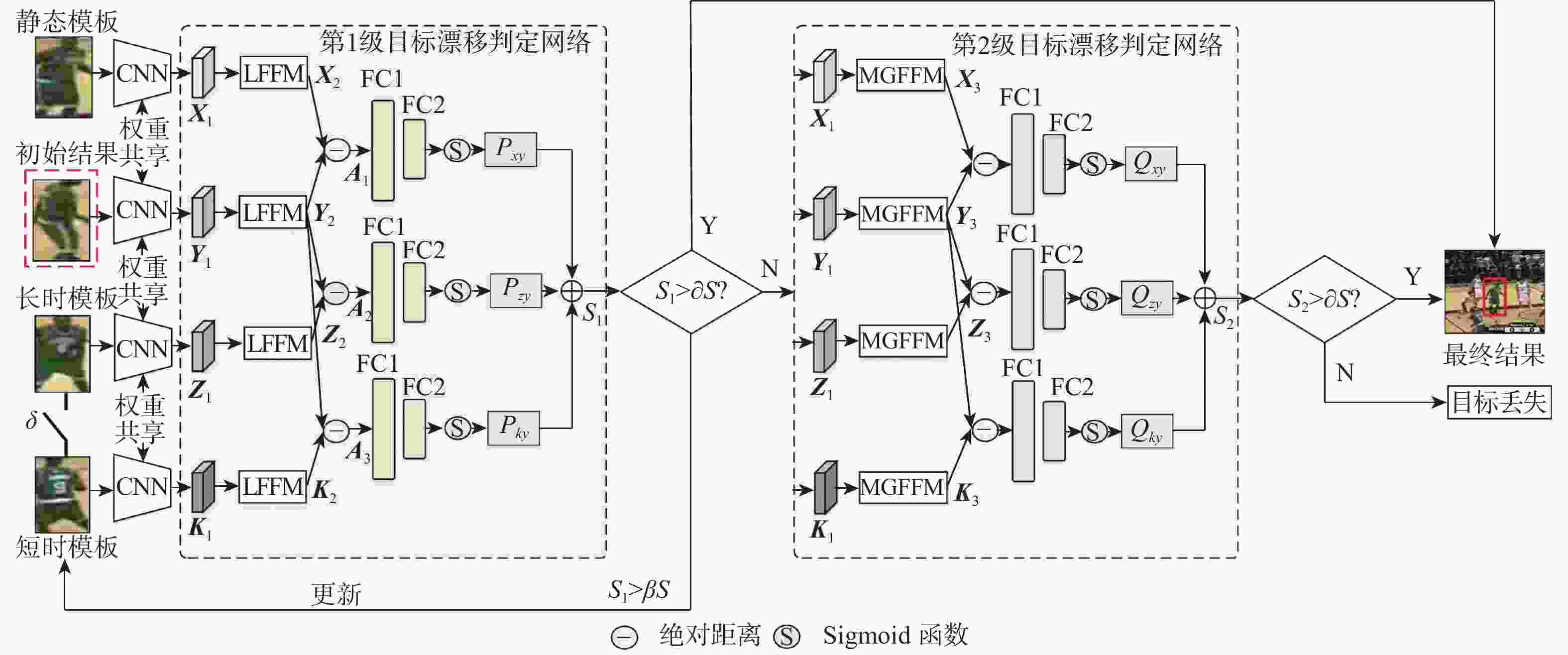

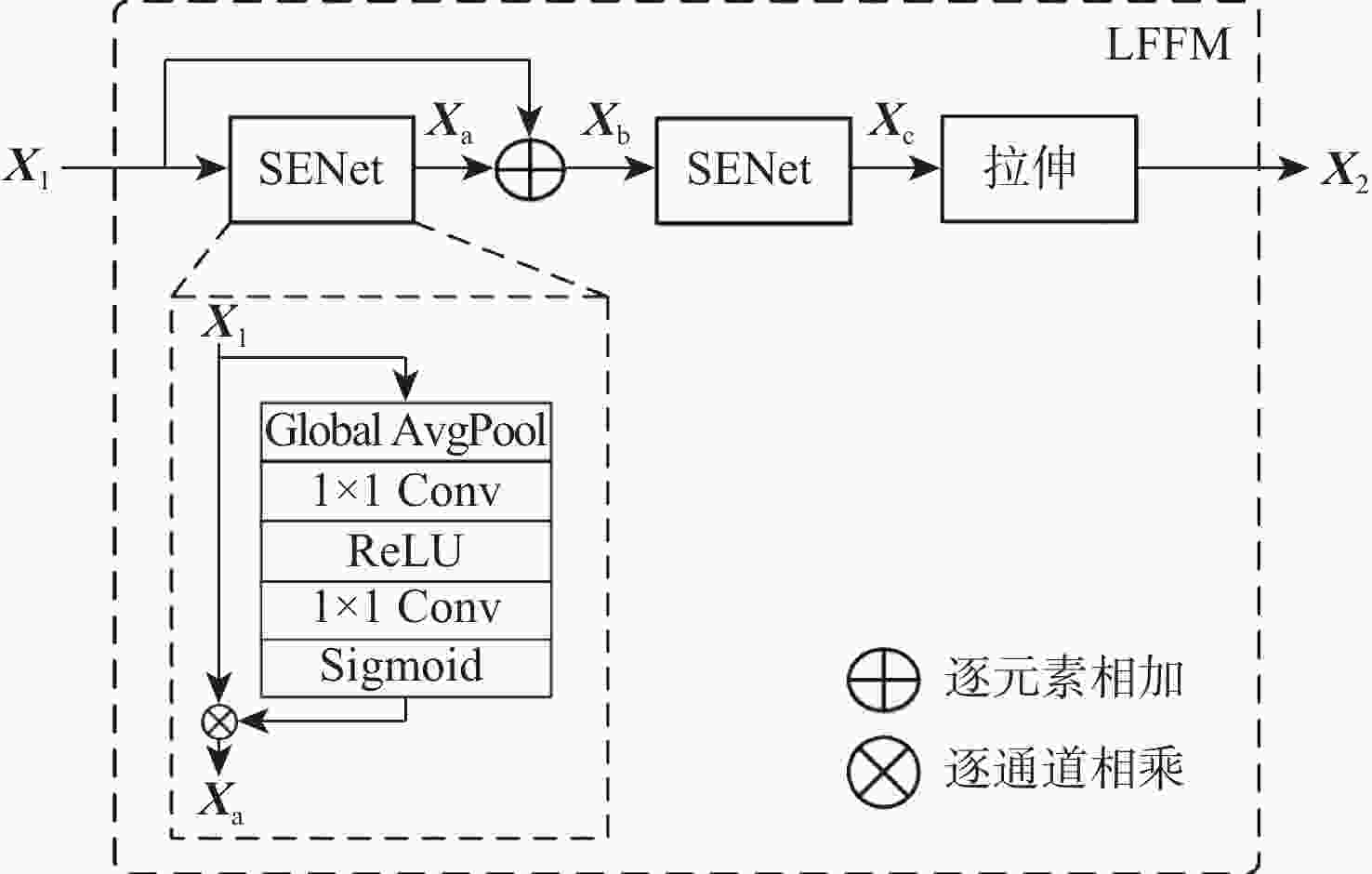

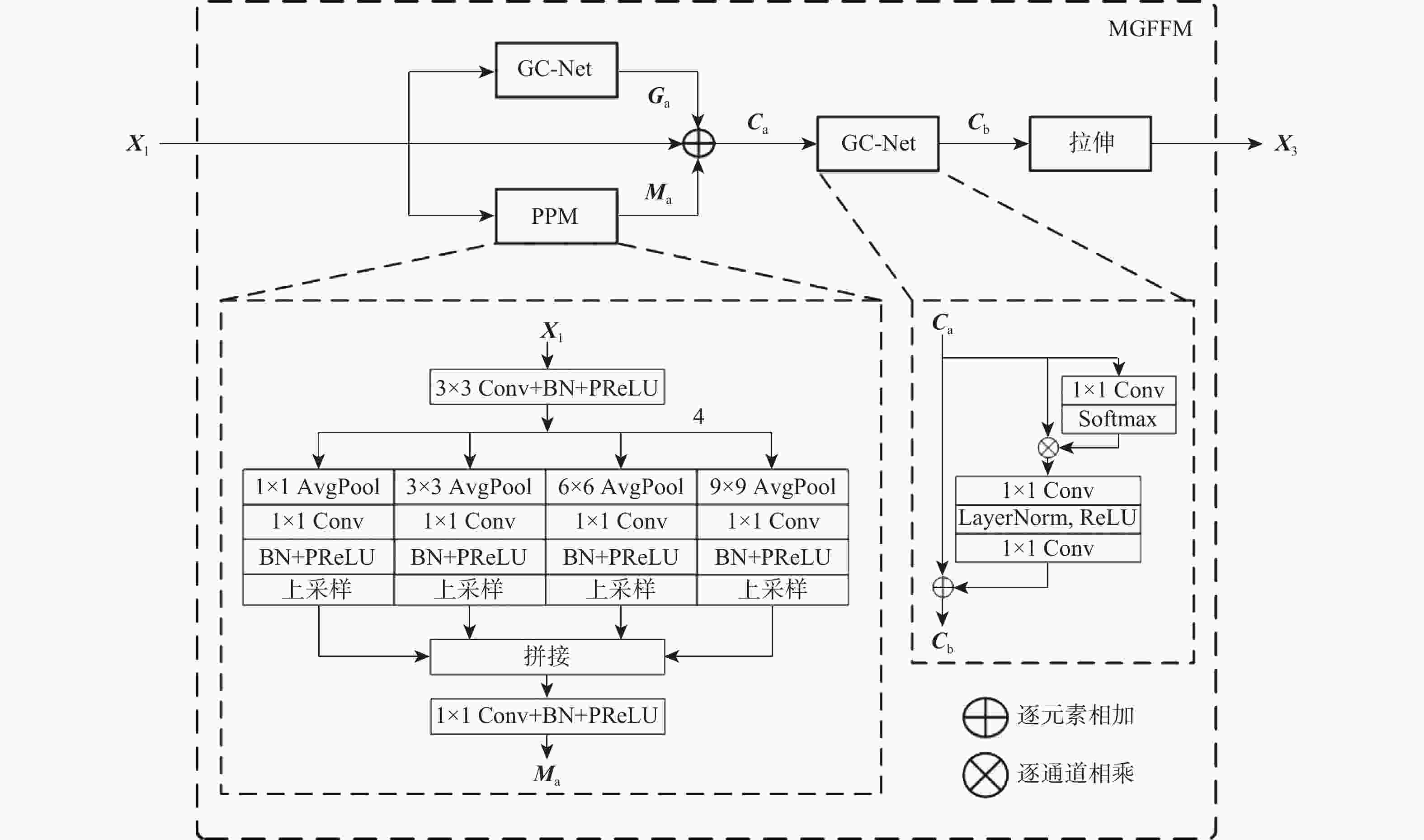

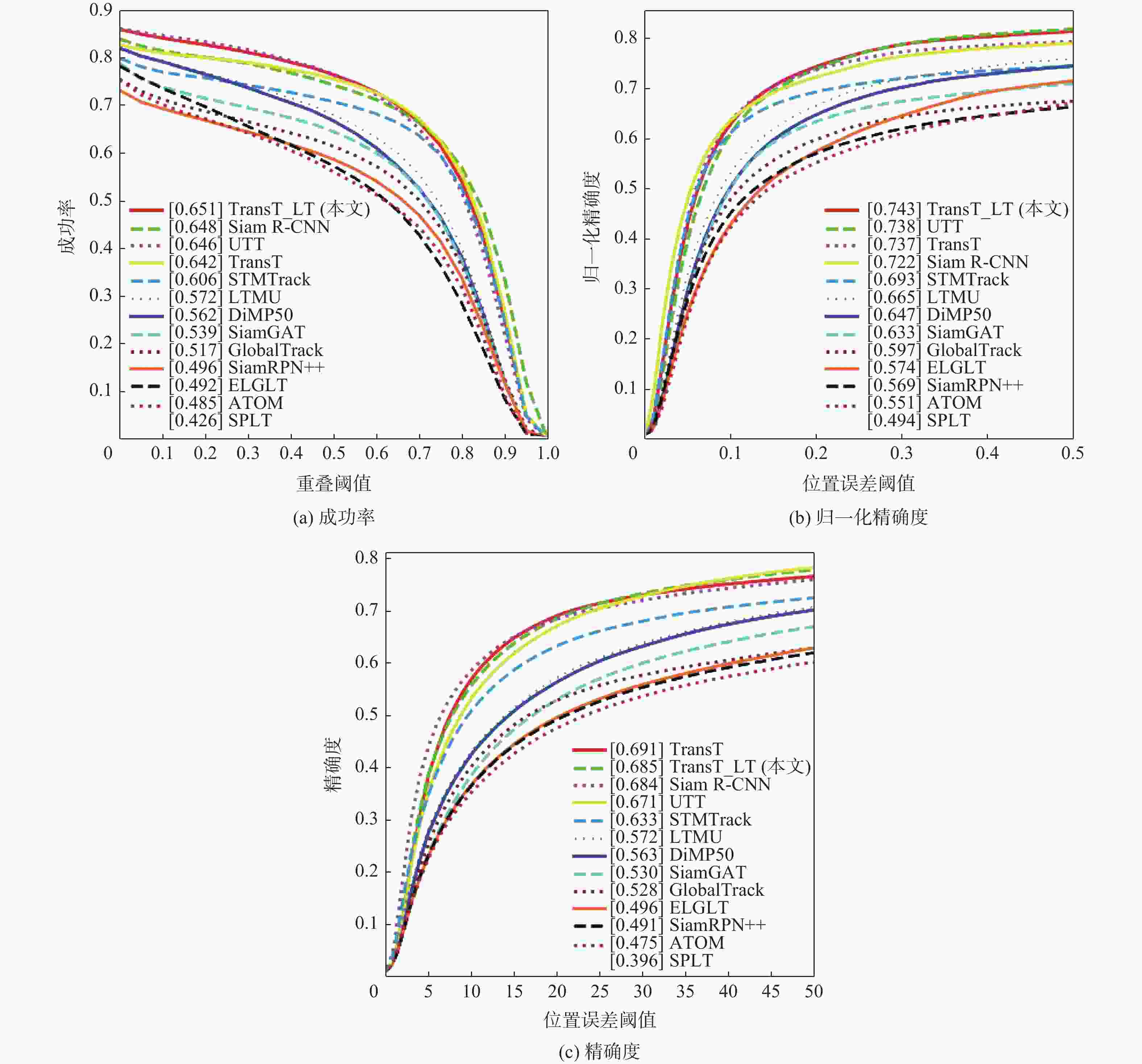

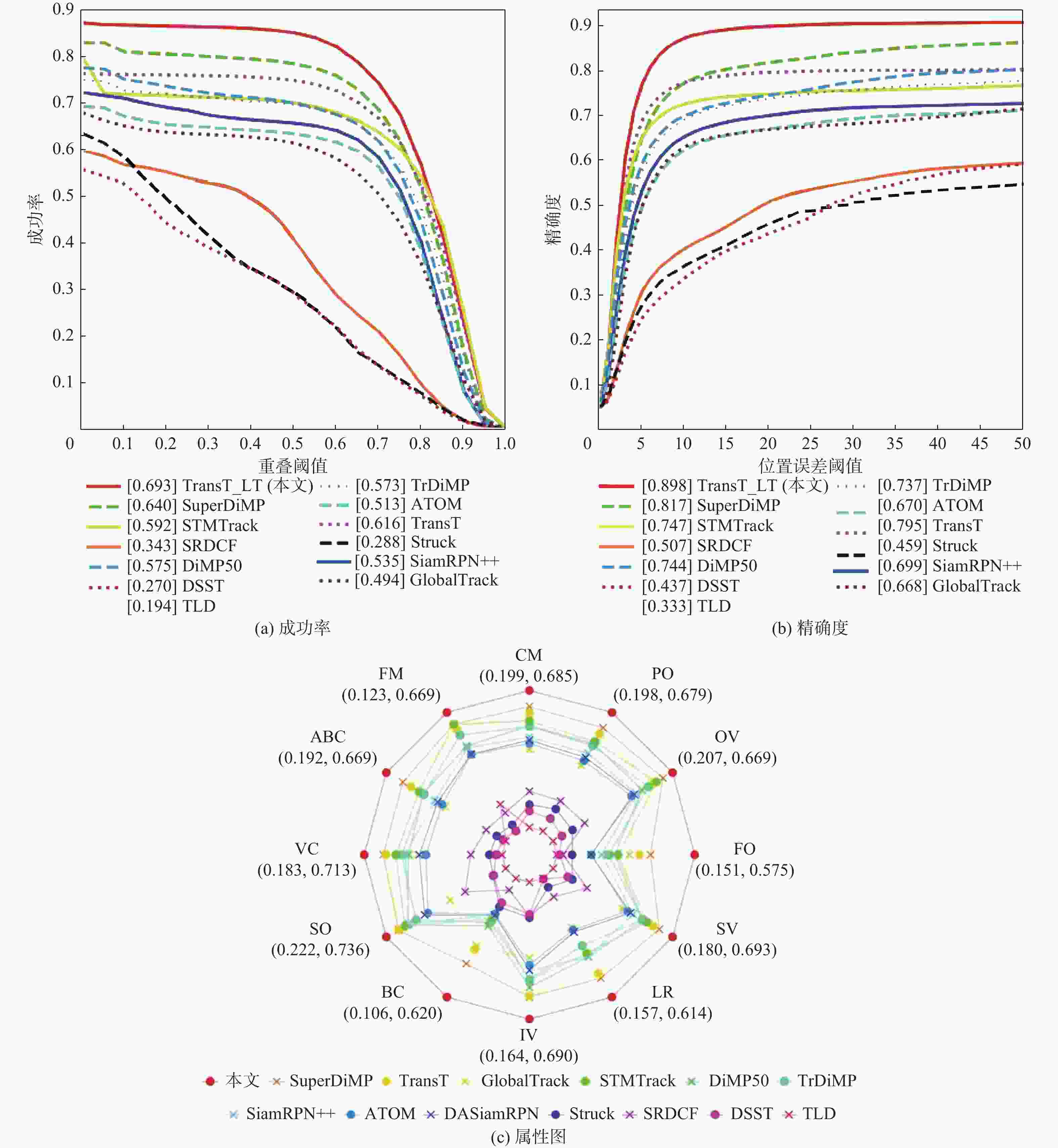

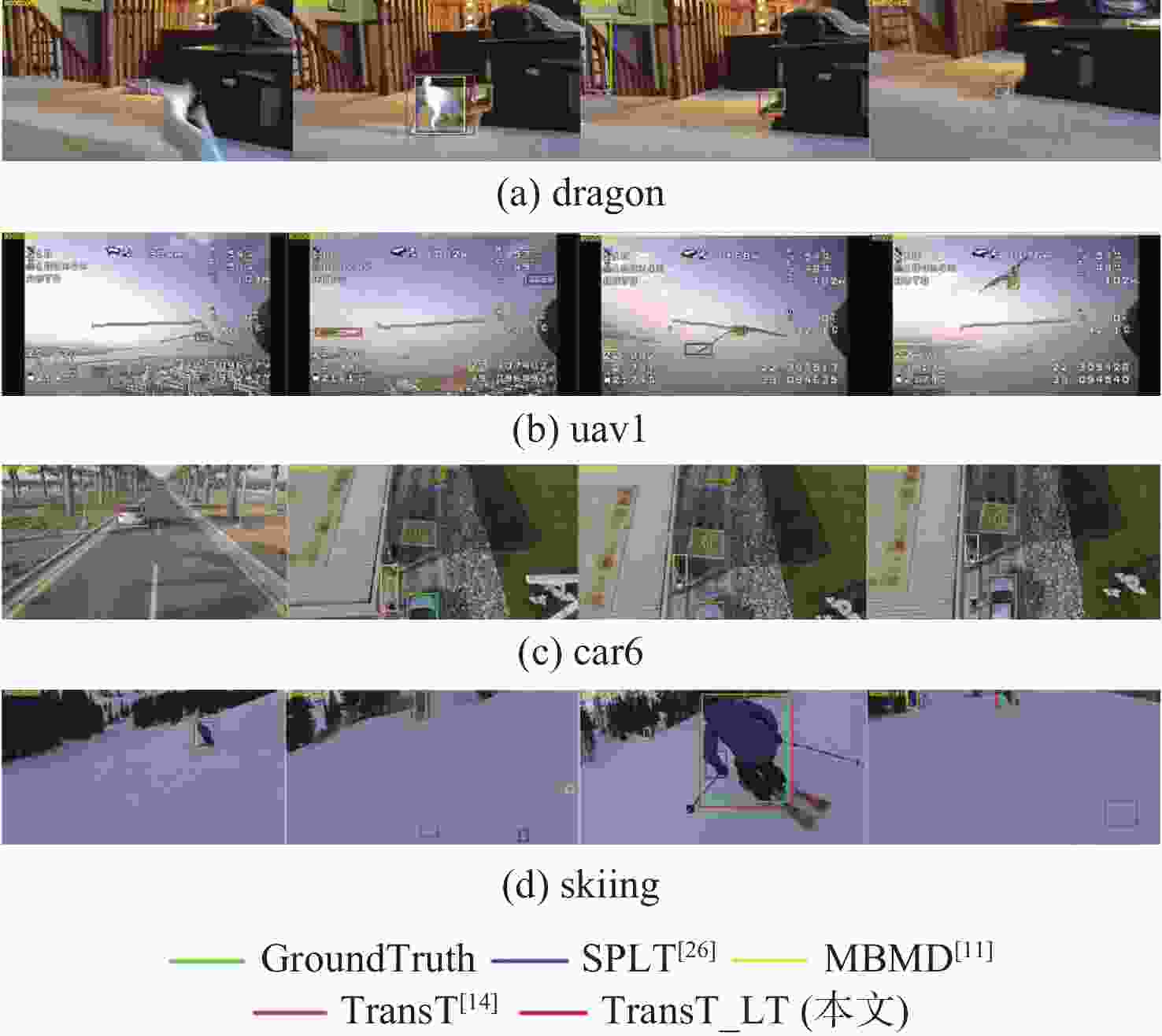

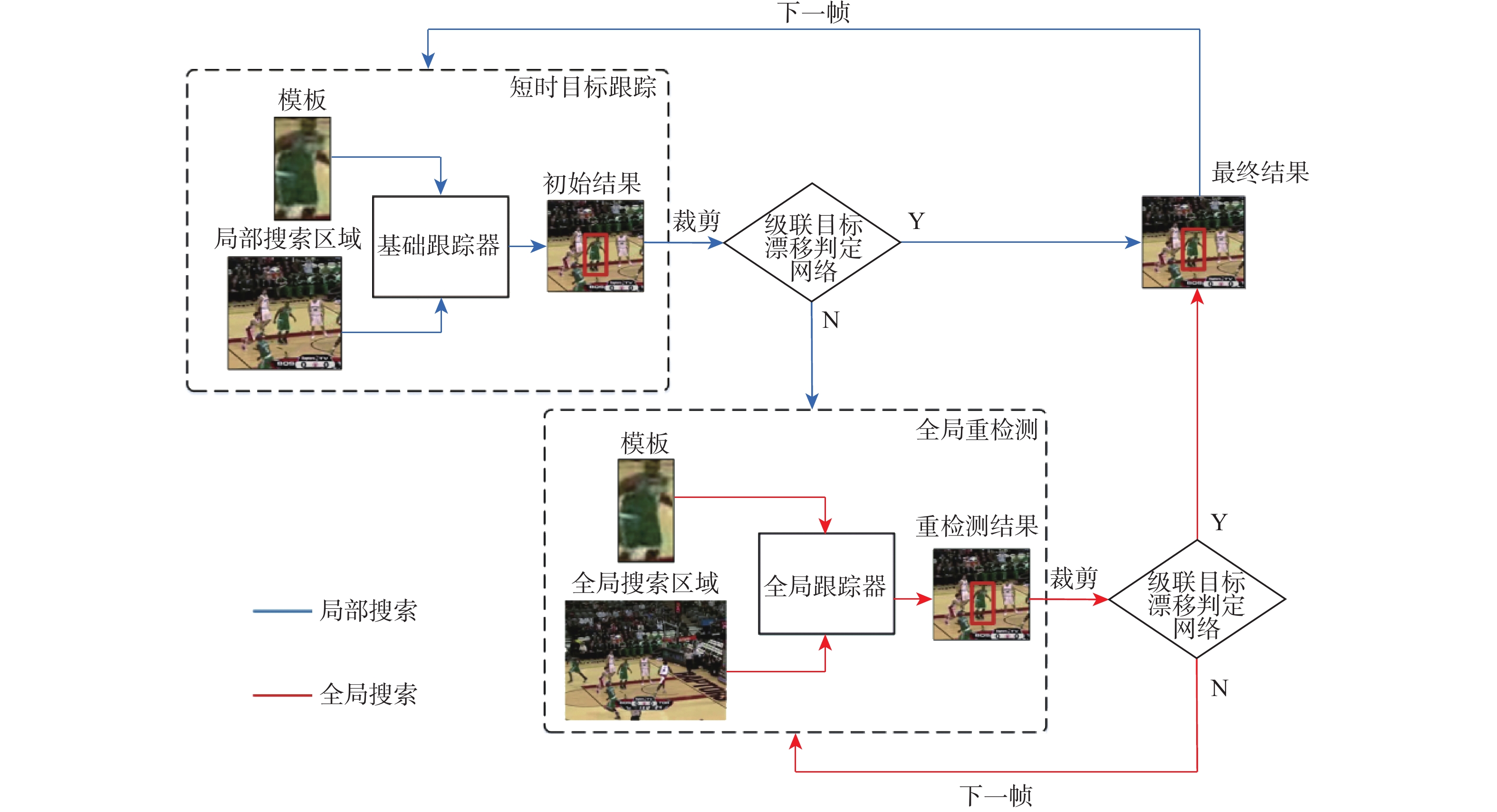

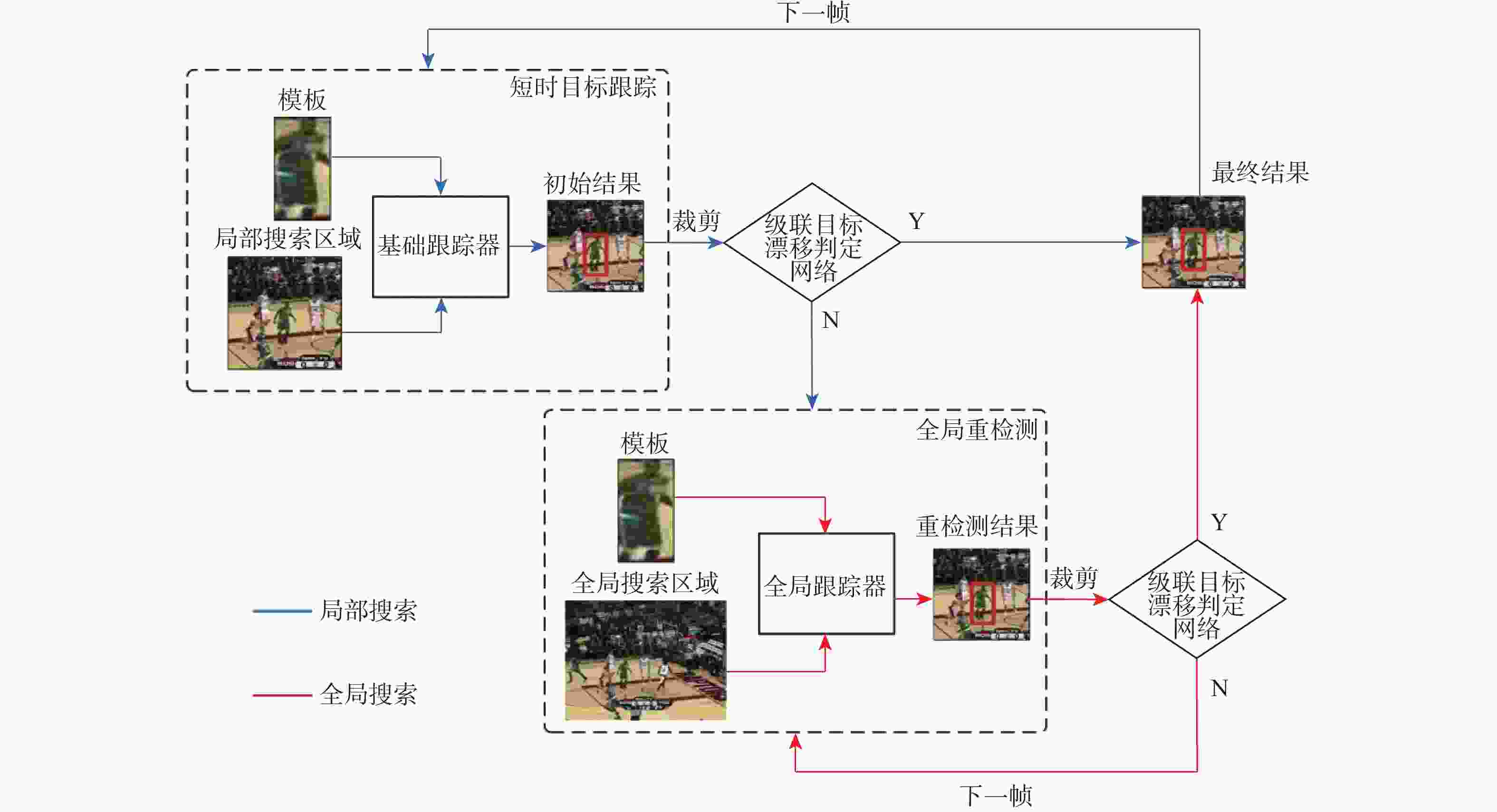

针对现有目标漂移判定准则中需要人为选定阈值和判定性能不佳的问题,提出一种自适应选取阈值的级联目标漂移判定网络。通过2个子判定网络的级联设计,判定跟踪结果是否漂移;在所提网络中使用静态模板、长时模板和短时模板联合判定跟踪结果,提高判定的准确性,为使模板适应判定过程中目标的外观变化,设计长短时模板更新策略以保证模板质量;将所提级联目标漂移判定网络联合短时跟踪器TransT与全局重检测方法GlobalTrack,搭建长时视觉跟踪算法TransT_LT。在UAV20L、LaSOT、VOT2018-LT和VOT2020-LT等4个长时视觉跟踪数据集上对所提算法进行性能测试,实验结果表明:所提长时视觉跟踪算法具有优越的长时视觉跟踪性能,特别是在UAV20L数据集上,相较于基准算法,跟踪成功率和精度分别提升了7.7%和10.3%。所提目标漂移判定网络的判定速度为100帧/s,对长时视觉跟踪算法的速度影响不大。

-

关键词:

- 长时视觉跟踪 /

- 深度学习 /

- 级联目标漂移判定网络 /

- 模板更新 /

- 多尺度特征融合

Abstract:Aiming at the problems of artificially selecting the threshold and poor determination performance in the existing object drift determination criteria, this paper proposes a cascaded object drift determination network with adaptive threshold selection. Firstly, through the cascade design of the two sub-networks, determine whether the tracking results drift. The results are then jointly determined by the proposed network using the static template, long-term template, and short-term template. A long-term and short-term template update strategy is then designed to guarantee the quality of the template and adapt it to the object’s changing appearance during the determination process. Finally, the proposed network is combined with the short-term tracker TransT and the global re-detection method GlobalTrack to build a long-term tracking algorithm TransT_LT. The proposed algorithm’s performance test on four datasets (UAV20L, LaSOT, VOT2018-LT, and VOT2020-LT) demonstrates that it performs better over the long term in tracking, particularly on the UAV20L dataset, where it outperforms the benchmark algorithm by 7.7% and 10.3%, respectively, in tracking success rate and accuracy. The determination speed of the proposed network is 100 frames per second, which has little effect on the speed of the long-term tracking algorithm.

-

表 1 不同$ \partial $与$ \beta $参数值对长时视觉跟踪性能的影响

Table 1. Impact of different parameter values on long-term visual tracking performance for $ \partial $ and $ \beta $

$ \left( {\partial ,\beta } \right) $ UAV20L VOT2020-LT 成功率 精确度 F分数 精确度 召回率 (0.5,0.6) 0.635 0.826 0.648 0.693 0.608 (0.5,0.7) 0.649 0.839 0.660 0.703 0.623 (0.5,0.8) 0.654 0.862 0.661 0.711 0.618 (0.5,0.9) 0.666 0.866 0.664 0.653 0.675 (0.6,0.7) 0.671 0.870 0.664 0.709 0.625 (0.6,0.8) 0.672 0.872 0.665 0.653 0.677 (0.6,0.9) 0.693 0.898 0.671 0.715 0.632 (0.7,0.8) 0.673 0.870 0.665 0.713 0.624 (0.7,0.9) 0.672 0.875 0.665 0.711 0.622 注:加粗字体表示最优值。 表 2 长时模板更新时间间隔参数$\delta $对长时视觉跟踪性能的影响

Table 2. Performance impact of long-term template update interval parameter on long-term visual tracking

$ \delta $/帧 UAV20L VOT2020-LT 成功率 精确度 F分数 精确度 召回率 50 0.666 0.859 0.665 0.713 0.623 100 0.693 0.898 0.671 0.715 0.632 150 0.689 0.893 0.669 0.711 0.631 200 0.686 0.888 0.664 0.713 0.622 250 0.685 0.886 0.661 0.712 0.618 300 0.672 0.866 0.660 0.715 0.613 注:加粗字体表示最优值。 表 3 不同判定准则在2个数据集上的最优阈值

Table 3. Optimal thresholds for different determination criteria on two datasets

表 4 不同判定准则的最优阈值在2个数据集上的性能

Table 4. Performance of the optimal thresholds of different determination criteria on two datasets

表 5 消融实验结果

Table 5. Ablation experiment results

TransT TTD LTUS CDDN UAV20L VOT2020-LT 成功率 精确度 F分数 精确度 召回率 √ 0.616 0.795 0.638 0.682 0.599 √ √ 0.658 0.857 0.657 0.669 0.645 √ √ √ 0.688 0.892 0.668 0.660 0.676 √ √ √ √ 0.693 0.898 0.671 0.715 0.632 表 6 VOT2018-LT数据集上不同视觉跟踪算法的跟踪结果

Table 6. Tracking results of different visual tracking algorithms on VOT2018-LT dataset

算法 F分数 精确度 召回率 TANet[4] 0.586 0.649 0.535 LTMU[5] 0.690 0.710 0.672 ELGLT[6] 0.638 0.669 0.610 LGST[7] 0.630 0.637 0.622 MBMD[11] 0.610 0.634 0.588 TransT[14] 0.670 0.714 0.631 GlobalTrack[15] 0.555 0.503 0.528 SPLT[26] 0.616 0.633 0.600 Siam R-CNN[27] 0.668 0.667 0.675 LTST[39] 0.636 0.653 0.620 MTTNet[40] 0.621 0.634 0.609 TransT_LT(本文) 0.693 0.736 0.654 表 7 VOT2020-LT数据集上不同视觉跟踪算法的跟踪结果

Table 7. Tracking results of different visual tracking algorithms on VOT2020-LT dataset

算法 F分数 精确度 召回率 TANet[4] 0.515 0.568 0.513 ELGLT[6] 0.607 0.611 0.560 LGST[7] 0.578 0.607 0.552 MBMD[11] 0.575 0.623 0.534 TransT[14] 0.638 0.682 0.599 GlobalTrack[15] 0.555 0.503 0.528 SPLT[26] 0.565 0.587 0.544 Siam R-CNN[27] 0.670 0.658 0.676 LTST[39] 0.587 0.631 0.548 RLT-DiMP[41] 0.670 0.657 0.684 TransT_LT(本文) 0.671 0.715 0.632 表 8 UAV20L数据集上第2级目标漂移判定网络在尺度变换属性上的实验结果

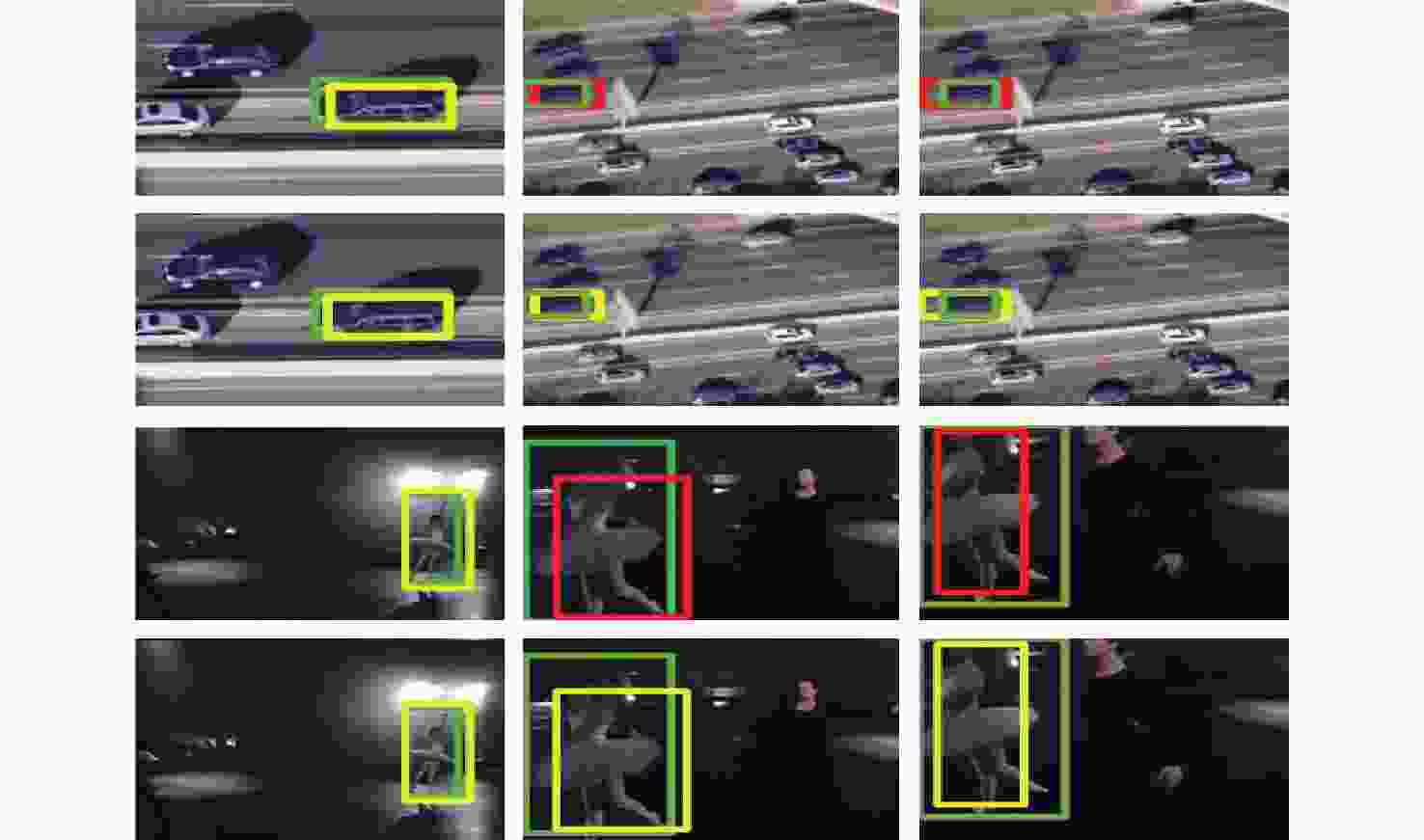

Table 8. Experimental results of the second-level target drift determination network on scale transformation attributes on UAV20L dataset

有无级联 成功率 精确度 TransT_LT(无级联) 0.688 0.886 TransT_LT(有级联) 0.693 0.892 -

[1] 李玺, 查宇飞, 张天柱, 等. 深度学习的目标跟踪算法综述[J]. 中国图象图形学报, 2019, 24(12): 2057-2080. doi: 10.11834/jig.190372LI X, ZHA Y F, ZHANG T Z, et al. Survey of visual object tracking algorithms based on deep learning[J]. Journal of Image and Graphics, 2019, 24(12): 2057-2080(in Chinese). doi: 10.11834/jig.190372 [2] 刘芳, 孙亚楠, 王洪娟, 等. 基于残差学习的自适应无人机目标跟踪算法[J]. 北京亚洲成人在线一二三四五六区学报, 2020, 46(10): 1874-1882.LIU F, SUN Y N, WANG H J, et al. Adaptive UAV target tracking algorithm based on residual learning[J]. Journal of Beijing University of Aeronautics and Astronautics, 2020, 46(10): 1874-1882(in Chinese). [3] 蒲磊, 冯新喜, 侯志强, 等. 基于级联注意力机制的孪生网络视觉跟踪算法[J]. 北京亚洲成人在线一二三四五六区学报, 2020, 46(12): 2302-2310.PU L, FENG X X, HOU Z Q, et al. Siamese network visual tracking algorithm based on cascaded attention mechanism[J]. Journal of Beijing University of Aeronautics and Astronautics, 2020, 46(12): 2302-2310(in Chinese). [4] WANG X, TANG J, LUO B, et al. Tracking by joint local and global search: a target-aware attention-based approach[J]. IEEE Transactions on Neural Networks and Learning Systems, 2022, 33(11): 6931-6945. doi: 10.1109/TNNLS.2021.3083933 [5] DAI K N, ZHANG Y H, WANG D, et al. High-performance long-term tracking with meta-updater[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2020: 6297-6306. [6] ZHAO H J, YAN B, WANG D, et al. Effective local and global search for fast long-term tracking[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2023, 45(1): 460-474. doi: 10.1109/TPAMI.2022.3153645 [7] GAO Z, ZHUANG Y, GU J J, et al. A joint local-global search mechanism for long-term tracking with dynamic memory network[J]. Expert Systems with Applications, 2023, 223: 119890. doi: 10.1016/j.eswa.2023.119890 [8] BOLME D S, BEVERIDGE J R, DRAPER B A, et al. Visual object tracking using adaptive correlation filters[C]//Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2010: 2544-2550. [9] WANG M M, LIU Y, HUANG Z Y. Large margin object tracking with circulant feature maps[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2017: 4800-4808. [10] JUNG I, SON J, BAEK M, et al. Real-time MDNet[C]//Proceedings of the European Conference on Computer Vision. Berlin: Springer, 2018: 83-98. [11] ZHANG Y, WANG D, WANG L, et al. Learning regression and verification networks for long-term visual tracking[EB/OL]. (2018-11-19)[2023-08-01]. http://arxiv.org/abs/1809.04320v1. [12] NAM H, HAN B. Learning multi-domain convolutional neural networks for visual tracking[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2016: 4293-4302. [13] HOU Z Q, WANG Z, PU L, et al. Target drift discriminative network based on deep learning in visual tracking[J]. Journal of Electronic Imaging, 2022, 31: 043052. [14] CHEN X, YAN B, ZHU J, et al. Transformer tracking[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2021: 8126-8135. [15] HUANG L H, ZHAO X, HUANG K Q. GlobalTrack: a simple and strong baseline for long-term tracking[J]. Proceedings of the AAAI Conference on Artificial Intelligence, 2020, 34(7): 11037-11044. doi: 10.1609/aaai.v34i07.6758 [16] MUELLER M, SMITH N, GHANEM B. A benchmark and simulator for UAV tracking[C]//Proceedings of the European Conference on Computer Vision. Berlin: Springer, 2016: 445-461. [17] FAN H, LIN L T, YANG F, et al. LaSOT: a high-quality benchmark for large-scale single object tracking[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2019: 5369-5378. [18] LUKEŽIČ A, ZAJC L Č, VOJÍŘ T, et al. Now you see me: evaluating performance in long-term visual tracking[C]//Proceedings of the European Conference on Computer Vision. Berlin: Springer, 2018: 4991391. [19] KRISTAN M, LEONARDIS A, MATAS J, et al. The eighth visual object tracking VOT2020 challenge results[C]//Proceedings of the European Conference on Computer Vision. Berlin: Springer, 2020: 547-601. [20] HU J, SHEN L, SUN G. Squeeze-and-excitation networks[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2018: 7132-7141. [21] ZHAO H S, SHI J P, QI X J, et al. Pyramid scene parsing network[C]//Proceedings of theIEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2017: 6230-6239. [22] NI J J, WU J H, TONG J, et al. GC-Net: global context network for medical image segmentation[J]. Computer Methods and Programs in Biomedicine, 2020, 190: 105121. doi: 10.1016/j.cmpb.2019.105121 [23] SIMONYAN K, ZISSERMAN A. Very deep convolutional networks for large-scale image recognition[EB/OL]. (2015-04-10)[2023-08-01]. http://arxiv.org/abs/1409.1556. [24] DONG Q J, HE X D, GE H Y, et al. Improving model drift for robust object tracking[J]. Multimedia Tools and Applications, 2020, 79(35): 25801-25815. [25] WU Y, LIM J, YANG M H. Object tracking benchmark[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2015, 37(9): 1834-1848. doi: 10.1109/TPAMI.2014.2388226 [26] YAN B, ZHAO H J, WANG D, et al. ‘skimming-perusal’ tracking: a framework for real-time and robust long-term tracking[C]//Proceedings of the IEEE/CVF International Conference on Computer Vision. Piscataway: IEEE Press, 2019: 2385-2393. [27] VOIGTLAENDER P, LUITEN J, TORR P H S, et al. Siam R-CNN: visual tracking by re-detection[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2020: 6577-6587. [28] MA F, SHOU M Z, ZHU L C, et al. Unified Transformer tracker for object tracking[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2022: 8771-8780. [29] FU Z H, LIU Q J, FU Z H, et al. STMTrack: template-free visual tracking with space-time memory networks[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2021: 13769-13778. [30] BHAT G, DANELLJAN M, VAN GOOL L, et al. Learning discriminative model prediction for tracking[C]//Proceedings of the IEEE/CVF International Conference on Computer Vision. Piscataway: IEEE Press, 2019: 6181-6190. [31] GUO D Y, SHAO Y Y, CUI Y, et al. Graph attention tracking[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2021: 9538-9547. [32] LI B, WU W, WANG Q, et al. Evolution of Siamese visual tracking with very deep networks[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2019: 16-20. [33] DANELLJAN M, BHAT G, KHAN F S, et al. ATOM: accurate tracking by overlap maximization[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2019: 4655-4664. [34] WANG N, ZHOU W G, WANG J, et al. Transformer meets tracker: exploiting temporal context for robust visual tracking[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2021: 1571-1580. [35] DANELLJAN M, HÄGER G, KHAN F S, et al. Learning spatially regularized correlation filters for visual tracking[C]//Proceedings of the IEEE International Conference on Computer Vision. Piscataway: IEEE Press, 2015: 4310-4318. [36] HARE S, GOLODETZ S, SAFFARI A, et al. Struck: structured output tracking with kernels[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2016, 38(10): 2096-2109. doi: 10.1109/TPAMI.2015.2509974 [37] DANELLJAN M, HÄGER G, KHAN F S, et al. Discriminative scale space tracking[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017, 39(8): 1561-1575. doi: 10.1109/TPAMI.2016.2609928 [38] KALAL Z, MIKOLAJCZYK K, MATAS J. Tracking-learning-detection[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2012, 34(7): 1409-1422. doi: 10.1109/TPAMI.2011.239 [39] YU L, QIAO B J, ZHANG H L, et al. LTST: long-term segmentation tracker with memory attention network[J]. Image and Vision Computing, 2022, 119: 104374. doi: 10.1016/j.imavis.2022.104374 [40] SANG H, LI G, ZHAO Z. Multi-scale global retrieval and temporal-spatial consistency matching based long-term tracking network[J]. Chinese Journal of Electronics, 2022, 32: 1-11. [41] CHOI S, LEE J, LEE Y, et al. Robust long-term object tracking via improved discriminative model prediction[C]//Proceedings of the European Conference on Computer Vision. Berlin: Springer, , 2020: 602-617. -

下载:

下载: