-

摘要:

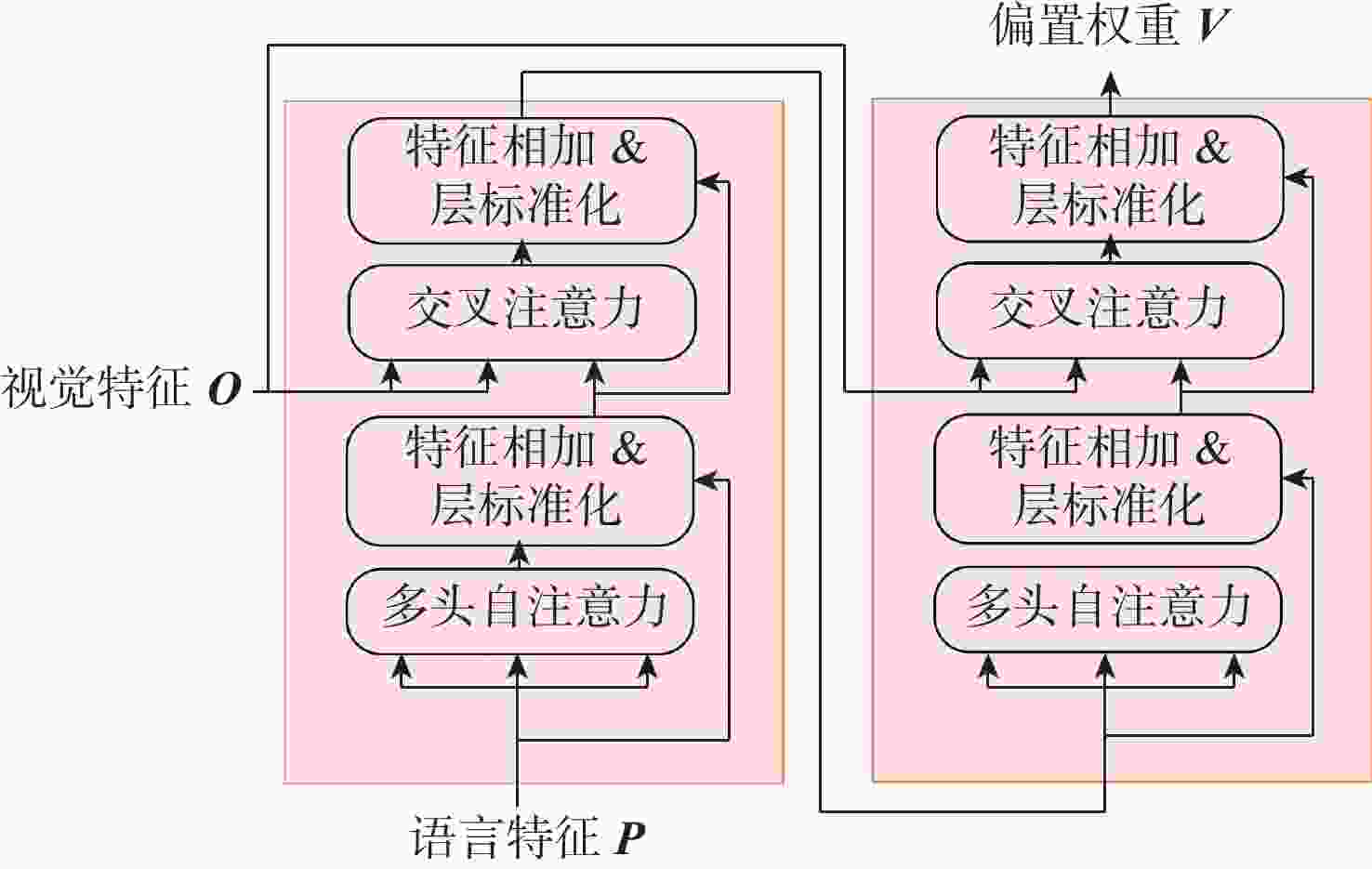

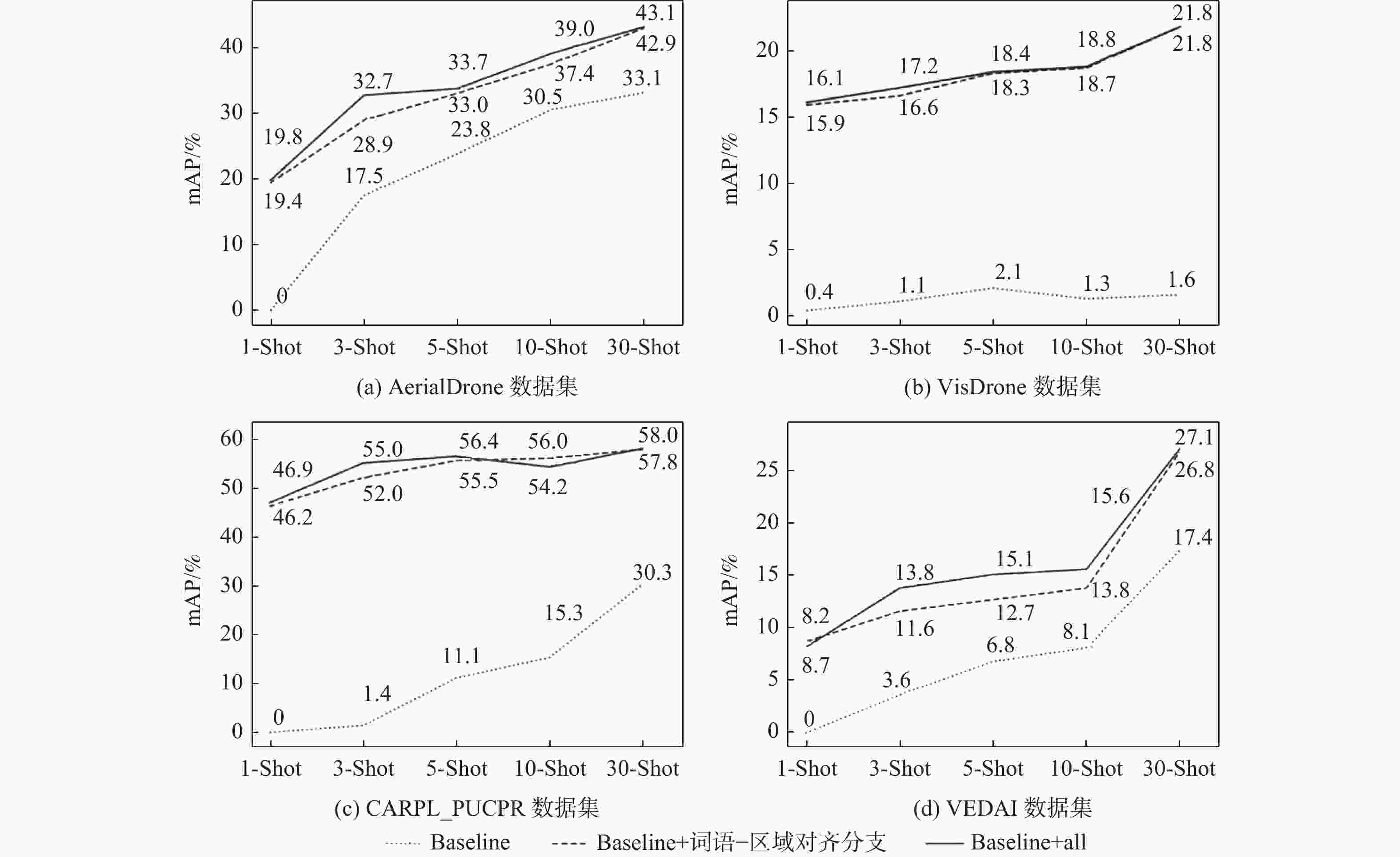

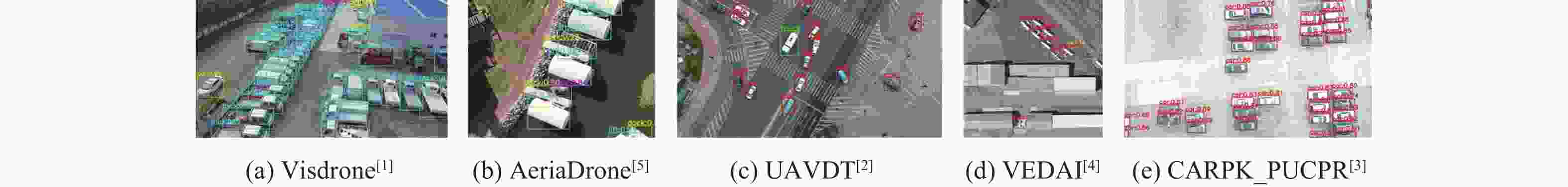

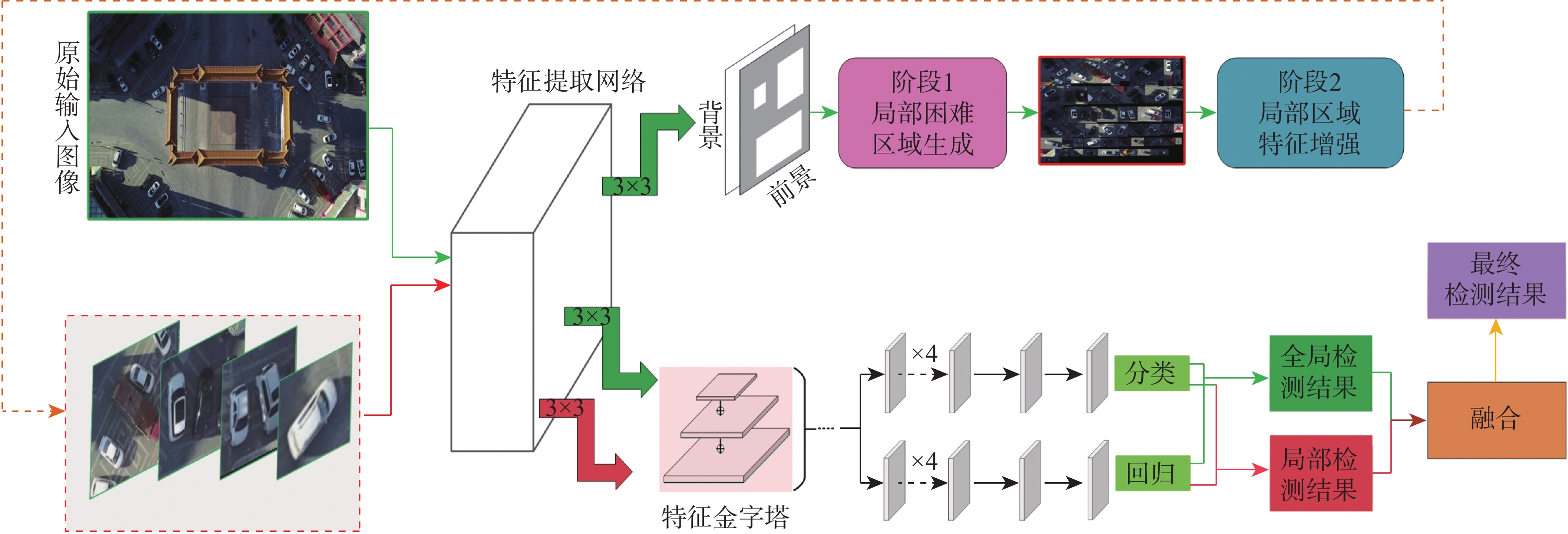

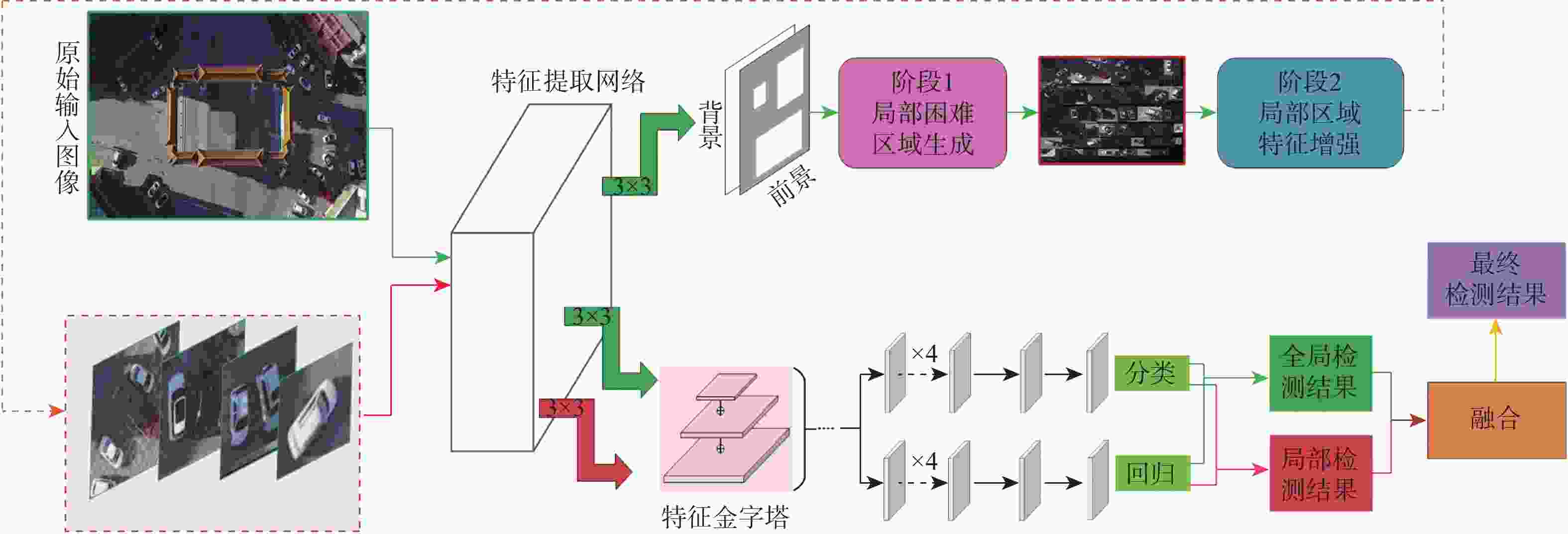

针对现有航拍图像目标检测方法在航拍数据集变化时,即拍摄视角、图像质量、照明条件、背景环境等发生大幅变化,以及目标外观变化明显、目标类别新增时,不经过对新数据集全样本训练,而采用原有数据集直接推理,检测精度大幅下降的问题,提出语言引导视觉的小样本航拍图像目标检测方法。采用词语-区域对齐分支取代传统目标检测网络中的分类分支,得到同时具有语言和视觉信息的词语-区域对齐分类分数作为预测分类结果,进而将目标检测和词语定位统一为一个任务,并利用语言引导提升视觉目标检测精度。针对输入文本语言变化引起小样本目标检测精度波动的情况,设计语言视觉偏置网络,挖掘语言特征和视觉特征的关联关系,提升语言视觉的匹配度,缩小精度波动,并进一步提升小样本目标检测精度。在UAVDT、Visdrone、AeriaDrone、VEDAI、CARPK_PUCPR数据集上的大量实验结果证明了所提方法的优越性能,在UAVDT航拍数据集上所提方法在30样本时平均精度均值(mAP)可达14.6%,相比航拍图像检测方法簇检测器(ClusDet)、密度图引导的目标检测网络(DMNet)、全局-局部自适应网络(GLSAN)和粗粒度密度图网络(CDMNet)在全样本训练的精度,分别提高了0.9%、−0.1%、−2.4%和−2.2%;在CARPK_PUCPR数据集上所提方法在30样本时mAP可达58.0%,相比通用目标检测方法全卷积单阶段目标检测器(FCOS)、自适应训练样本选择(ATSS)、广义焦点损失V2(GFLV2)和交并比感知密集目标检测器(VFNET)在全样本训练的精度,分别提高了1.0%、0.8%、0.1%和0.3%,体现了所提方法强大的小样本泛化和迁移能力。

Abstract:This paper presents a few-shot object detection of aerial images based on language guidance vision in response to the problem of decreased detection accuracy in existing aerial image object detection brought on by a lack of training data and changes in the aerial image dataset, such as changes in shooting angle, image quality, lighting conditions, background environment, and significant changes in object appearance and the addition of new object categories. First, a word-region alignment branch replaces the classification branch in conventional object detection networks, and a word-region alignment classification score with both language and visual information is obtained as a prediction classification result. Then, object detection and phrase grounding are unified into a single task, leveraging language information to enhance visual detection accuracy. Furthermore, to address the challenge of accuracy fluctuations caused by changes in textual prompts during few-shot object detection, a language visual bias network is designed to mine the association between language features and visual features. This network aims to improve the alignment between language and vision, mitigate accuracy fluctuations, and further enhance the accuracy of few-shot detection. Extensive experimental results on UAVDT, Visdrone, AeriaDrone, VEDAI, and CARPK_PUCPR demonstrate the superior performance of the proposed method. Particularly, on the UAVDT dataset, the method achieves an impressive mAP of 14.6% at 30-shot. Compared with aerial image detection algorithms clustered detection (ClusDet), density map guided object detection network (DMNet), global-local self-adaptive network (GLSAN) and coarse-grained density map network (CDMNet), the detection accuracy is 0.9%, −0.1%, −2.4% and −2.2% higher during full data training. The mAP of the approach can achieve 58.0% on the PUCPR dataset at 30-shot, which is 1.0%, 0.8%, 0.1% and 0.3% higher than the accuracy of full data trained generic object detection methods fully convolutional one-stage object detector (FCOS), adaptive training sample selection (ATSS), generalized focal loss V2 (GFLV2) and VarifocalNet (VFNET), respectively. These results highlight the robust few-shot generalization and transfer capabilities of the proposed method.

-

Key words:

- aerial images /

- few-shot /

- word-region aligned branch /

- language visual bias network /

- object detection

-

表 1 无人机数据集类别分析

Table 1. Classification analysis of drone datasets

表 2 消融实验

Table 2. Ablation experiment

数据集 方法 mAP/% 1-shot 3-shot 5-shot 10-shot 30-shot AerialDrone[5] Baseline 0 17.5 23.8 30.5 33.1 Baseline + 词语-区域对齐分支 19.4 28.9 33.0 37.4 42.9 Baseline + all 19.8 32.7 33.7 39.0 43.1 VisDrone-DET[1] Baseline 0.4 1.1 2.1 1.3 1.6 Baseline + 词语-区域对齐分支 15.9 16.6 18.3 18.7 21.8 Baseline + all 16.1 17.2 18.4 18.8 21.8 CARPK_PUCPR[3] Baseline 0 1.4 11.1 15.3 30.3 Baseline + 词语-区域对齐分支 46.2 52.0 55.5 56.0 57.8 Baseline + all 46.9 55.0 56.4 54.2 58.0 VEDAI[4] Baseline 0 3.6 6.8 8.1 17.4 Baseline + 词语-区域对齐分支 8.7 11.6 12.7 13.8 26.8 Baseline + all 8.2 13.8 15.1 15.6 27.1 表 3 与经典航拍图像目标检测方法对比

Table 3. Comparison with aerial image object detection

-

[1] DU D W, WEN L Y, ZHU P F, et al. VisDrone-DET2020: the vision meets drone object detection in image challenge results[C]//Proceedings of the Computer Vision – ECCV 2020 Workshops. Berlin: Springer, 2020: 692-712. [2] DU D W, QI Y K, YU H Y, et al. The unmanned aerial vehicle benchmark: object detection and tracking[M]//Computer Vision – ECCV 2018. Berlin: Springer, 2018: 375-391. [3] HSIEH M R, LIN Y L, HSU W H. Drone-based object counting by spatially regularized regional proposal network[C]//Proceedings of the IEEE International Conference on Computer Vision. Piscataway: IEEE Press, 2017: 4165-4173. [4] RAZAKARIVONY S, JURIE F. Vehicle detection in aerial imagery: a small target detection benchmark[J]. Journal of Visual Communication and Image Representation, 2016, 34: 187-203. doi: 10.1016/j.jvcir.2015.11.002 [5] LI L H, ZHANG P C, ZHANG H T, et al. Grounded language-image pre-training[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2022: 10955-10965. [6] YANG F, FAN H, CHU P, et al. Clustered object detection in aerial images[C]//Proceedings of the IEEE/CVF International Conference on Computer Vision. Piscataway: IEEE Press, 2019: 8310-8319. [7] LI C L, YANG T, ZHU S J, et al. Density map guided object detection in aerial images[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops. Piscataway: IEEE Press, 2020: 737-746. [8] DENG S T, LI S, XIE K, et al. A global-local self-adaptive network for drone-view object detection[J]. IEEE Transactions on Image Processing, 2020, 30: 1556-1569. [9] DUAN C Z, WEI Z W, ZHANG C, et al. Coarse-grained density map guided object detection in aerial images[C]//Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops. Piscataway: IEEE Press, 2021: 2789-2798. [10] 宋闯, 赵佳佳, 王康, 等. 面向智能感知的小样本学习研究综述[J]. 航空学报, 2020, 41(S1): 723756.SONG C, ZHAO J J, WANG K, et al. A review of small sample learning for intelligent perception[J]. Acta Aeronautica et Astronautica Sinica, 2020, 41(S1): 723756(in Chinese). [11] 段立娟, 袁蓥, 王文健, 等. 基于多模态联合语义感知的零样本目标检测[J]. 北京亚洲成人在线一二三四五六区学报, 2024, 50(2): 368-375.DUAN L J, YUAN Y, WANG W J, et al. Zero-shot object detection based on multi-modal joint semantic perception[J]. Journal of Beijing University of Aeronautics and Astronautics, 2024, 50(2): 368-375(in Chinese). [12] YAN X P, CHEN Z L, XU A N, et al. Meta R-CNN: towards general solver for instance-level low-shot learning[C]//Proceedings of the IEEE/CVF International Conference on Computer Vision. Piscataway: IEEE Press, 2019: 9576-9585. [13] REN S Q, HE K M, GIRSHICK R, et al. Faster R-CNN: towards real-time object detection with region proposal networks[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017, 39(6): 1137-1149. doi: 10.1109/TPAMI.2016.2577031 [14] FAN Q, ZHUO W, TANG C K, et al. Few-shot object detection with attention-RPN and multi-relation detector[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2020: 4012-4021. [15] SUN B, LI B H, CAI S C, et al. FSCE: few-shot object detection via contrastive proposal encoding[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2021: 7348-7358. [16] HAN G X, MA J W, HUANG S Y, et al. Few-shot object detection with fully cross-transformer[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2022: 5311-5320. [17] DENG J Y, LI X, FANG Y. Few-shot object detection on remote sensing images[EB/OL]. (2020-06-14)[2023-02-01]. http://arxiv.org/abs/2006.07826v2. [18] CHENG G, YAN B W, SHI P Z, et al. Prototype-CNN for few-shot object detection in remote sensing images[J]. IEEE Transactions on Geoscience and Remote Sensing, 2021, 60: 5604610. [19] HUANG X, HE B K, TONG M, et al. Few-shot object detection on remote sensing images via shared attention module and balanced fine-tuning strategy[J]. Remote Sensing, 2021, 13(19): 3816. doi: 10.3390/rs13193816 [20] 李红光, 王玉峰, 杨丽春. 基于元学习的小样本遥感图像目标检测[J]. 北京亚洲成人在线一二三四五六区学报, 2024, 50(8): 2503-2513.LI H G, WANG Y F, YANG L C. Meta-learning-based few-shot object detection for remote sensing images[J]. Journal of Beijing University of Aeronautics and Astronautics, 2024, 50(8): 2503-2513(in Chinese). [21] ZHOU Y, HU H, ZHAO J Q, et al. Few-shot object detection via context-aware aggregation for remote sensing images[J]. IEEE Geoscience and Remote Sensing Letters, 2022, 19: 6509605. [22] RADFORD A, KIM J W, HALLACY C, et al. Learning transferable visual models from natural language supervision[EB/OL]. (2021-02-26)[2023-02-01]. http://arxiv.org/abs/2103.00020. [23] ZHONG Y W, YANG J W, ZHANG P C, et al. RegionCLIP: region-based language-image pretraining[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2022: 16772-16782. [24] ZHAO S Y, ZHANG Z X, SCHULTER S, et al. Exploiting unlabeled data with vision and language models for object detection[M]//Computer Vision – ECCV 2022. Berlin: Springer, 2022: 159-175. [25] DEVLIN J, CHANG M W, LEE K, et al. BERT: pre-training of deep bidirectional transformers for language understanding[EB/OL]. (2019-05-24)[2023-02-01]. http://arxiv.org/abs/1810.04805v2. [26] DAI X Y, CHEN Y P, XIAO B, et al. Dynamic head: unifying object detection heads with attentions[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2021: 7369-7378. [27] ZHOU K Y, YANG J K, LOY C C, et al. Conditional prompt learning for vision-language models[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2022: 16795-16804. [28] TIAN Z, SHEN C H, CHEN H, et al. FCOS: a simple and strong anchor-free object detector[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022, 44(4): 1922-1933. [29] ZHANG S F, CHI C, YAO Y Q, et al. Bridging the gap between anchor-based and anchor-free detection via adaptive training sample selection[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2020: 9756-9765. [30] LI X, WANG W H, HU X L, et al. Generalized focal loss V2: learning reliable localization quality estimation for dense object detection[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2021: 11627-11636. [31] ZHANG H Y, WANG Y, DAYOUB F, et al. VarifocalNet: an IoU-aware dense object detector[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2021: 8510-8519. -

下载:

下载: