Long-term infrared object tracking algorithm based on dynamic region focusing for anti-UAV

-

摘要:

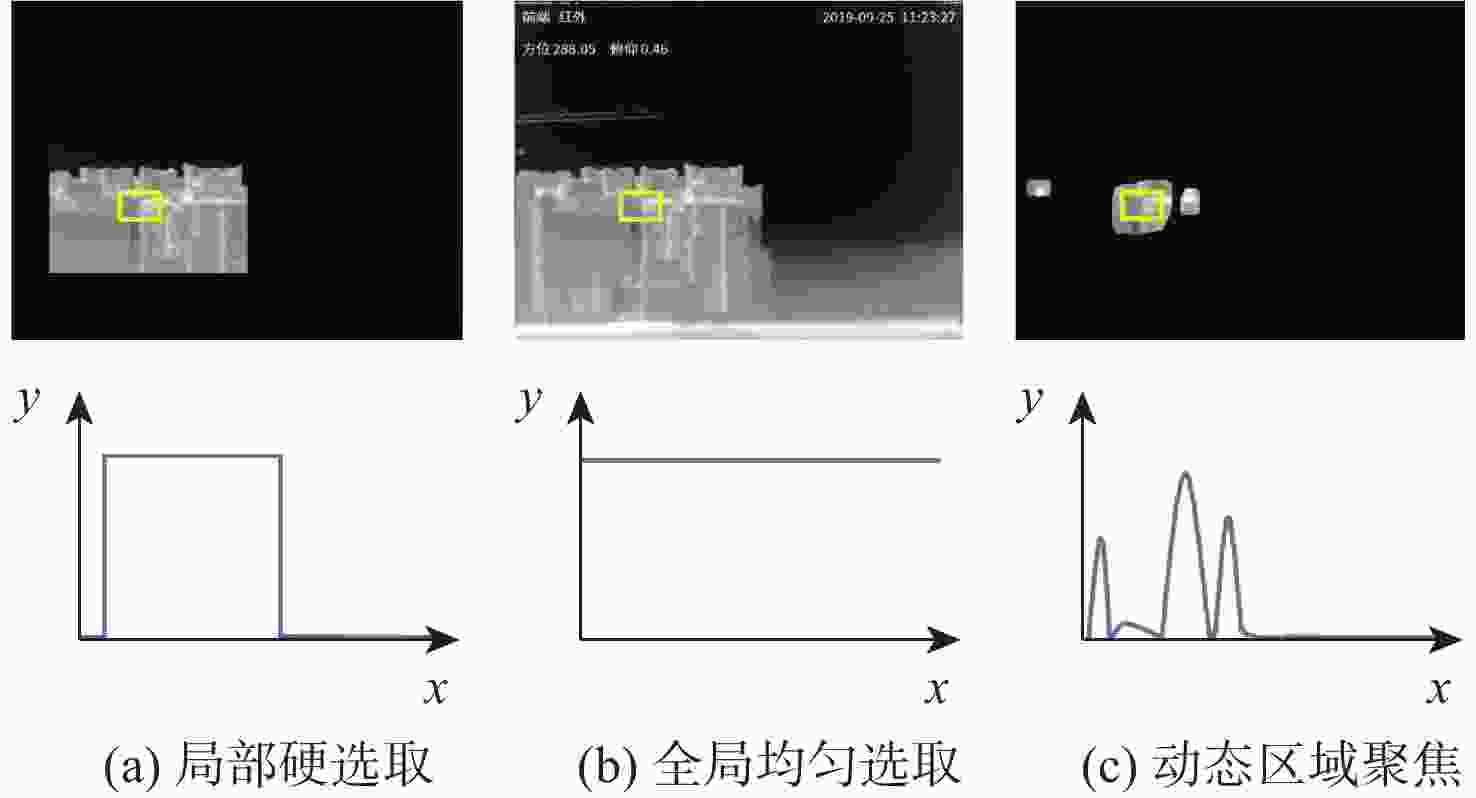

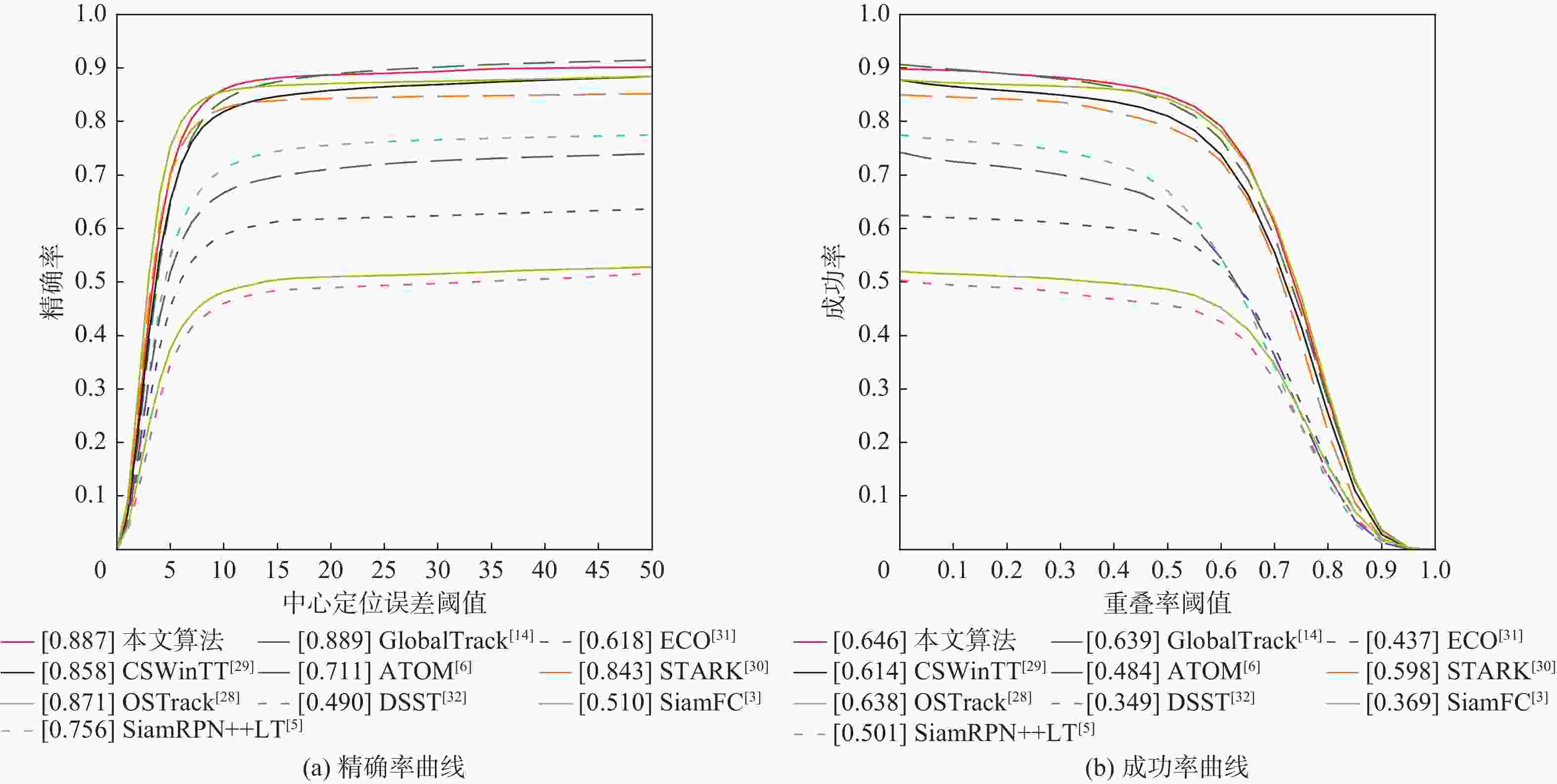

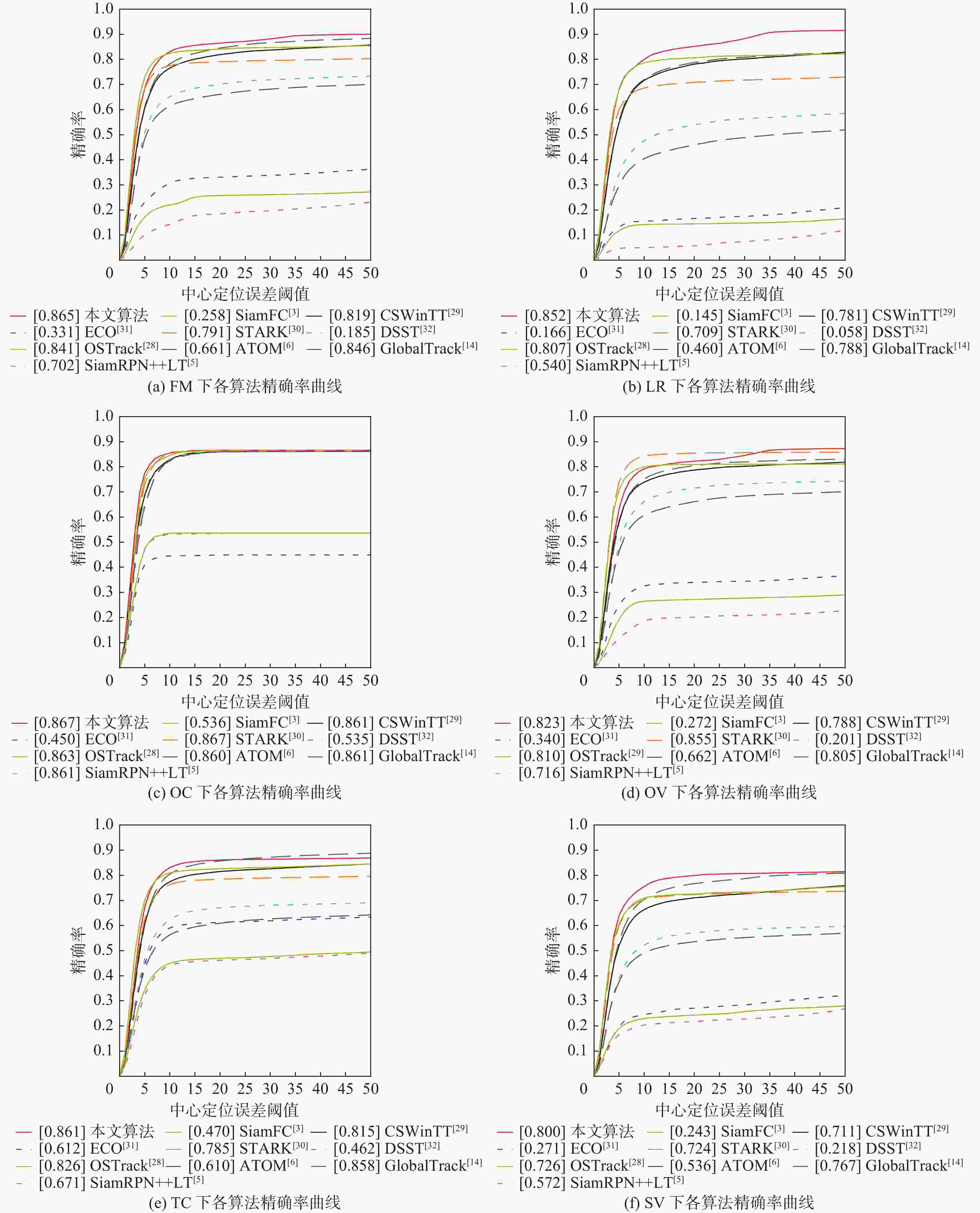

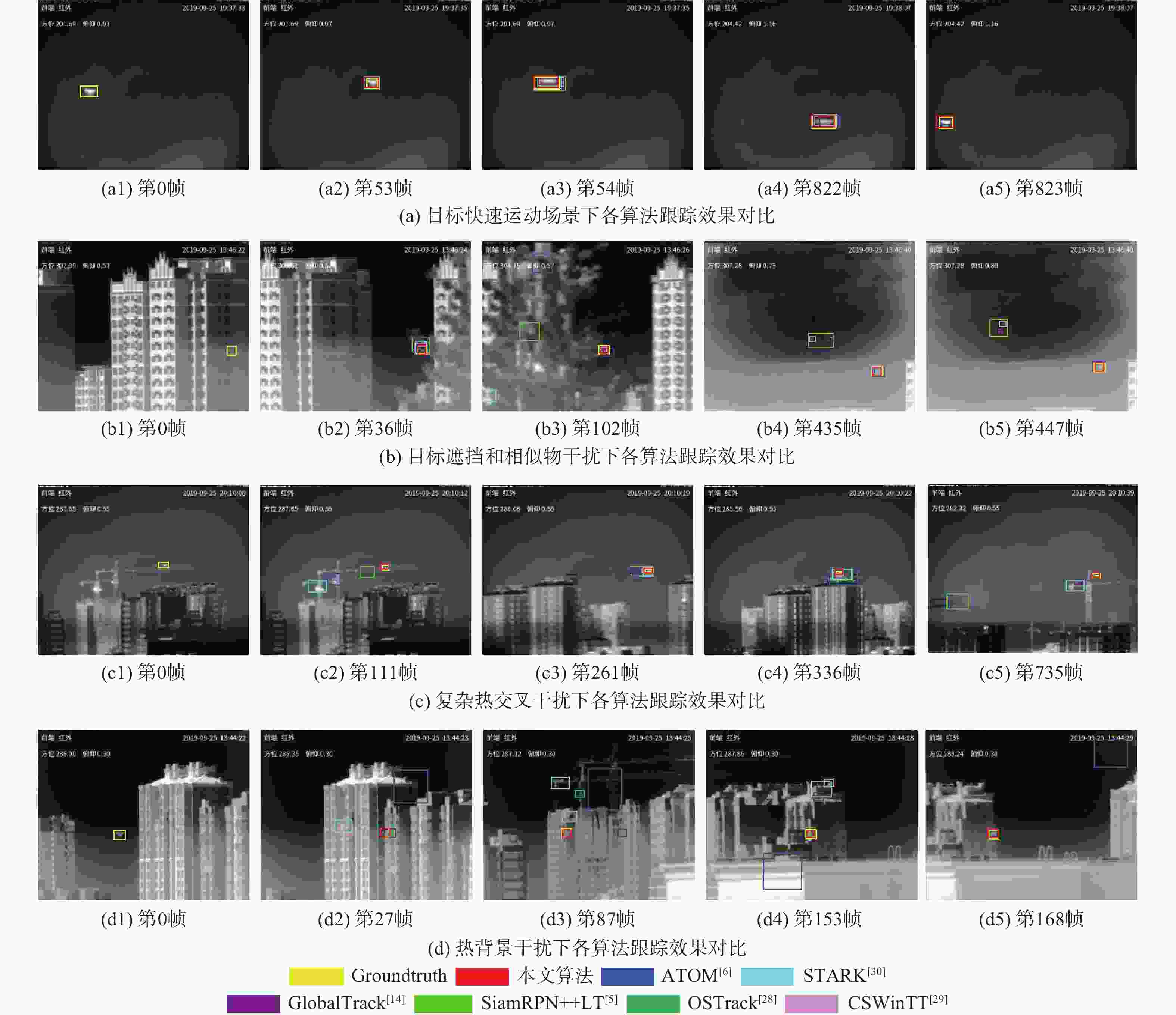

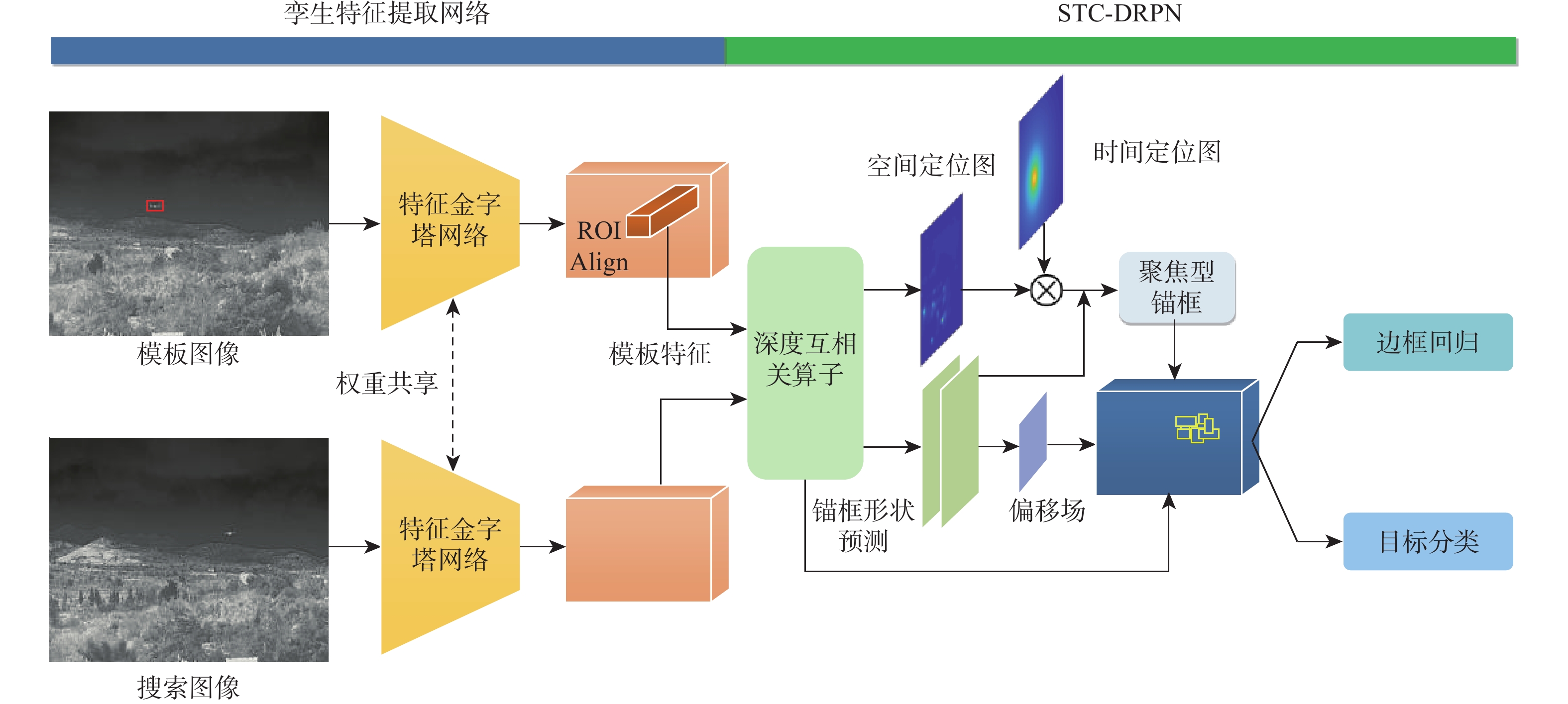

无人机(UAV)的滥用催促着反无人机(anti-UAV)技术发展。基于红外探测器的反无人机目标跟踪技术成为反无人机领域的研究热点,但仍面临着因背景干扰所导致的跟踪失败问题。为提高复杂环境下红外反无人机跟踪的准确性和稳定性,提出一种动态区域聚焦的反无人机红外长时跟踪算法。构建基于特征金字塔的孪生主干网络,通过跨尺度特征融合,增强网络对红外无人机的特征提取能力。提出基于时空联合约束的动态区域建议网络,通过联合目标模板表观特征和目标运动约束,在全图范围内预测目标的定位概率分布,并引导先验锚框聚焦于候选区域,实现一种动态搜索区域选取。通过聚焦搜索区域,所提算法融合了局部搜索的抗背景干扰能力与全局搜索的重捕获能力,有效缓解全局搜索带来的负样本干扰,进一步增强目标特征的可辨别性。在Anti-UAV数据集上进行评测,与其他先进跟踪算法相比,所提算法具有更高的性能指标,跟踪精确率、成功率和平均准确度分别达到0.895、0.649和0.656,运行速度达到18.5 帧/s,在快速运动、热交叉干扰和相似物干扰等复杂场景下,也具有优越的跟踪效果。

Abstract:The misuse of unmanned aerial vehicles (UAV) is accelerating the development of anti-UAV technologies. Infrared detector-based tracking methods have gained special attention in the anti-UAV field, which, however, still face the problem of tracking failures caused by background interference. To enhance the precision and stability of infrared anti-UAV tracking in complex environments, this paper proposed a long-term infrared object tracking algorithm based on dynamic region focusing. Firstly, the Siamese backbone network based on feature pyramid was constructed to improve the feature extraction capability of the model for infrared UAV by the fusion of cross-scale features. Secondly, a dynamic region proposal network based on spatio-temporal joint constraints was proposed. Under the constraints of template appearance features and target motion information, the location probability distribution of the object was predicted over the entire image, and then the prior anchor box was guided to focus on the candidate regions, realizing a dynamic search region selection mechanism. The anti-background interference capability of local search and the recapture ability of global search were subtly integrated by focusing on the search area, which effectively mitigated the negative sample interference caused by global search and further enhanced the discriminability of target features. Experiments on the Anti-UAV dataset show that the proposed algorithm achieves precision of 0.895, a success rate of 0.649, and average accuracy of 0.656 with a tracking speed of 18.5 FPS. Compared with other advanced tracking algorithms, the proposed algorithm exhibits superior performance and demonstrates its effectiveness in handling complex tracking scenarios such as fast motion, thermal crossover, and similar distractors.

-

表 1 各算法在Anti-UAV上的定量对比结果

Table 1. Quantitative comparison results of tracking algorithms on Anti-UAV

算法 精确率 成功率 平均准确度 运行速率/(帧·s−1) DSST[32] 0.490 0.349 0.354 31.2 SiamFC[3] 0.510 0.369 0.375 60.2 ECO[31] 0.618 0.437 0.444 7.5 ATOM[6] 0.711 0.484 0.490 28.7 SiamRPN++LT[5] 0.756 0.501 0.507 26.3 STARK[30] 0.843 0.588 0.607 33.5 CSWinTT[29] 0.858 0.614 0.623 8.5 OSTrack[28] 0.871 0.638 0.647 22.6 GlobalTrack[14] 0.889 0.639 0.648 10.2 本文算法 0.887 0.646 0.656 18.5 表 2 模型组件性能对比

Table 2. Performance comparison of model components

变体 FPN STC-DRPN QG-RPN 精确率 成功率 平均准确度 1 √ 0.854 0.612 0.617 2 √ 0.877 0.632 0.638 3 √ √ 0.871 0.627 0.632 4 √ √ 0.895 0.649 0.656 表 3 不同目标位置预测策略的性能对比

Table 3. Performance comparison of different target location prediction strategies

空间定位预测 时间定位预测 精确率 成功率 平均准确度 √ 0.875 0.632 0.638 √ 0.713 0.474 0.485 √ √ 0.895 0.649 0.656 -

[1] 张瑞鑫, 黎宁, 张夏夏, 等. 基于优化CenterNet的低空无人机检测方法[J]. 北京亚洲成人在线一二三四五六区学报, 2022, 48(11): 2335-2344.ZHANG R X, LI N, ZHANG X X, et al. Low-altitude UAV detection method based on optimized CenterNet[J]. Journal of Beijing University of Aeronautics and Astronautics, 2022, 48(11): 2335-2344(in Chinese). [2] 罗俊海, 王芝燕. 无人机探测与对抗技术发展及应用综述[J]. 控制与决策, 2022, 37(3): 530-544.LUO J H, WANG Z Y. A review of development and application of UAV detection and counter technology[J]. Control and Decision, 2022, 37(3): 530-544(in Chinese). [3] BERTINETTO L, VALMADRE J, HENRIQUES J F, et al. Fully-convolutional siamese networks for object tracking[M]//Computer Vision-ECCV 2016 Workshops. Berlin: Springer, 2016: 850-865. [4] LI B, YAN J J, WU W, et al. High performance visual tracking with siamese region proposal network[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2018: 8971-8980. [5] LI B, WU W, WANG Q, et al. SiamRPN: evolution of siamese visual tracking with very deep networks[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2019: 4277-4286. [6] DANELLJAN M, BHAT G, KHAN F S, et al. ATOM: accurate tracking by overlap maximization[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2019: 4655-4664. [7] BHAT G, DANELLJAN M, VAN GOOL L, et al. Learning discriminative model prediction for tracking[C]//Proceedings of the IEEE/CVF International Conference on Computer Vision. Piscataway: IEEE Press, 2019: 6181-6190. [8] FAN H, LING H B. SANet: structure-aware network for visual tracking[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops. Piscataway: IEEE Press, 2017: 2217-2224. [9] BHAT G, DANELLJAN M, VAN GOOL L, et al. Know your surroundings: exploiting scene information for object tracking[M]// Computer Vision-ECCV 2020. Berlin: Springer , 2020: 205-221. [10] 柏罗, 张宏立, 王聪. 基于高效注意力和上下文感知的目标跟踪算法[J]. 北京亚洲成人在线一二三四五六区学报, 2022, 48(7): 1222-1232.BAI L, ZHANG H L, WANG C. Target tracking algorithm based on efficient attention and context awareness[J]. Journal of Beijing University of Aeronautics and Astronautics, 2022, 48(7): 1222-1232(in Chinese). [11] KALAL Z, MIKOLAJCZYK K, MATAS J. Tracking-learning-detection[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2012, 34(7): 1409-1422. doi: 10.1109/TPAMI.2011.239 [12] YAN B, ZHAO H J, WANG D, et al. ‘Skimming-perusal’ tracking: a framework for real-time and robust long-term tracking[C]//Proceedings of the IEEE/CVF International Conference on Computer Vision. Piscataway: IEEE Press, 2019: 2385-2393. [13] DAI K N, ZHANG Y H, WANG D, et al. High-performance long-term tracking with meta-updater[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2020: 6297-6306. [14] HUANG L H, ZHAO X, HUANG K Q. GlobalTrack: a simple and strong baseline for long-term tracking[J]. Proceedings of the AAAI Conference on Artificial Intelligence, 2020, 34(7): 11037-11044. doi: 10.1609/aaai.v34i07.6758 [15] VOIGTLAENDER P, LUITEN J, TORR P H S, et al. Siam R-CNN: visual tracking by re-detection[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2020: 6577-6587. [16] FANG H Z, WANG X L, LIAO Z K, et al. A real-time anti-distractor infrared UAV tracker with channel feature refinement module[C]//Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops. Piscataway: IEEE Press, 2021: 1240. [17] CHEN J J, HUANG B, LI J N, et al. Learning spatio-temporal attention based siamese network for tracking UAVs in the wild[J]. Remote Sensing, 2022, 14(8): 1797. doi: 10.3390/rs14081797 [18] LIN T Y, DOLLÁR P, GIRSHICK R, et al. Feature pyramid networks for object detection[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2017: 936-944. [19] SHI X R, ZHANG Y, SHI Z G, et al. GASiam: graph attention based Siamese tracker for infrared anti-UAV[C]//Proceedings of the 3rd International Conference on Computer Vision, Image and Deep Learning & International Conference on Computer Engineering and Applications. Piscataway: IEEE Press, 2022: 986-993. [20] 肖佳平, 蒋建春, 佘春东. 新息序列驱动的无人机控制系统数据攻击检测[J]. 控制理论与应用, 2017, 34(12): 1575-1582. doi: 10.7641/CTA.2017.70053XIAO J P, JIANG J C, SHE C D. Data attack detection for an unmanned aerial vehicle control system using innovation sequences[J]. Control Theory & Applications, 2017, 34(12): 1575-1582(in Chinese). doi: 10.7641/CTA.2017.70053 [21] WANG J Q, CHEN K, YANG S, et al. Region proposal by guided anchoring[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2019: 2960-2969. [22] REN S Q, HE K M, GIRSHICK R, et al. Faster R-CNN: towards real-time object detection with region proposal networks[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017, 39(6): 1137-1149. doi: 10.1109/TPAMI.2016.2577031 [23] SONG G L, LIU Y, JIANG M, et al. Beyond trade-off: accelerate FCN-based face detector with higher accuracy[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2018: 7756-7764. [24] LIN T Y, GOYAL P, GIRSHICK R, et al. Focal loss for dense object detection[C]//Proceedings of the IEEE International Conference on Computer Vision. Piscataway: IEEE Press, 2017: 2999-3007. [25] TYCHSEN-SMITH L, PETERSSON L. Improving object localization with fitness NMS and bounded IoU loss[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2018: 6877-6885. [26] JIANG N, WANG K R, PENG X K, et al. Anti-UAV: a large-scale benchmark for vision-based UAV tracking[J]. IEEE Transactions on Multimedia, 2021, 25: 486-500. [27] LIU Q, LI X, YUAN D, et al. LSOTB-TIR: a large-scale high-diversity thermal infrared single object tracking benchmark[J]. IEEE Transactions on Neural Networks and Learning Systems, 2024, 35(7): 9844-9857. doi: 10.1109/TNNLS.2023.3236895 [28] YE B T, CHANG H, MA B P, et al. Joint feature learning and relation modeling for tracking: a one-stream framework[M]//Computer Vision-ECCV 2022. Berlin: Springer, 2022: 341-357. [29] SONG Z K, YU J Q, CHEN Y P, et al. Transformer tracking with cyclic shifting window attention[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2022: 8781-8790. [30] YAN B, PENG H W, FU J L, et al. Learning spatio-temporal transformer for visual tracking[C]//Proceedings of the IEEE/CVF International Conference on Computer Vision. Piscataway: IEEE Press, 2021: 10428-10437. [31] ZOLFAGHARI M, SINGH K, BROX T. ECO: efficient convolutional network for online video understanding[M]//Computer Vision-ECCV 2018. Berlin: Springer, 2018: 713-730. [32] DANELLJAN M, HÄGER G, SHAHBAZ KHAN F, et al. Accurate scale estimation for robust visual tracking[C]//Proceedings of the British Machine Vision Conference. Brighton: British Machine Vision Association, 2014: 1-11. -

下载:

下载: