-

摘要:

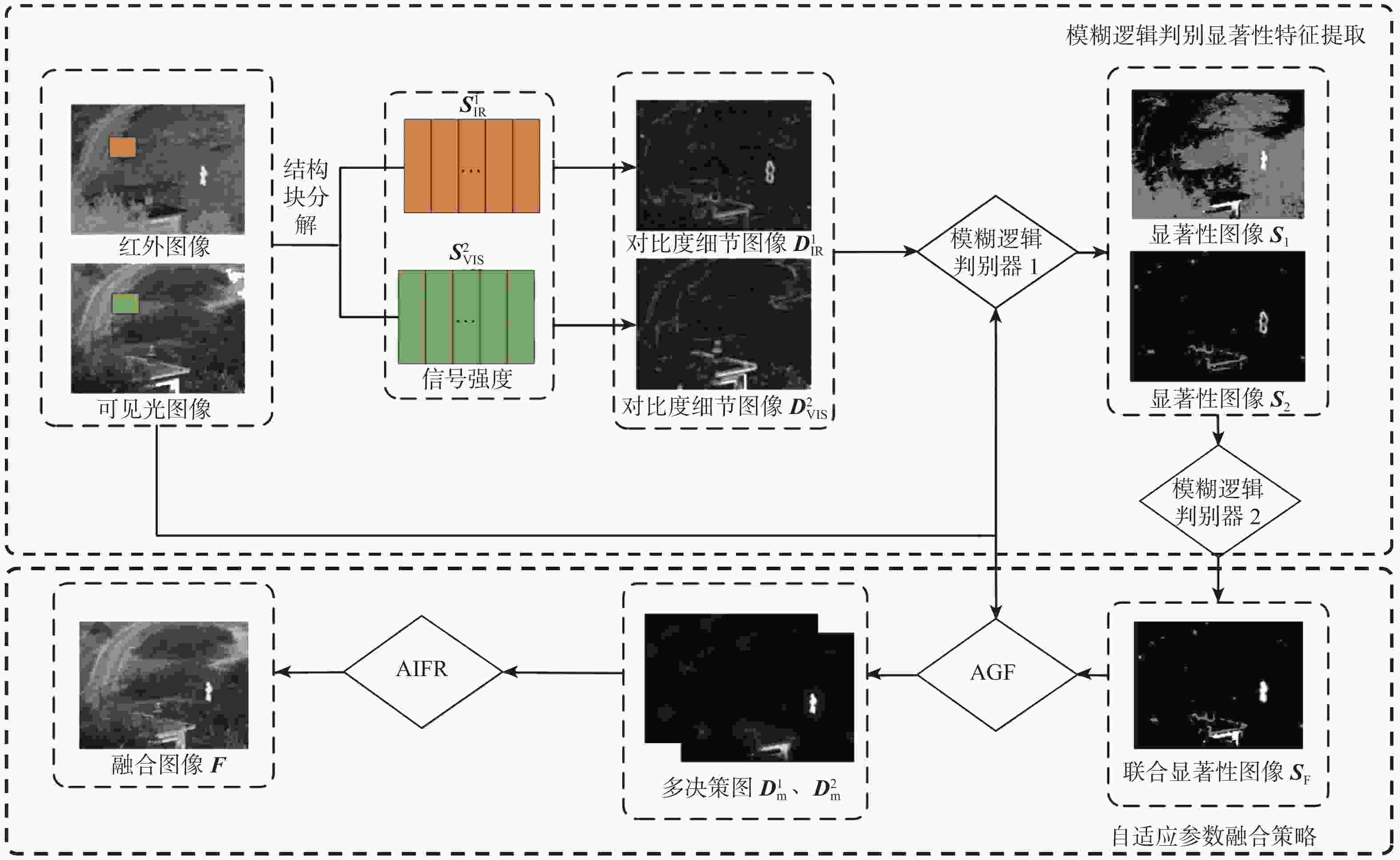

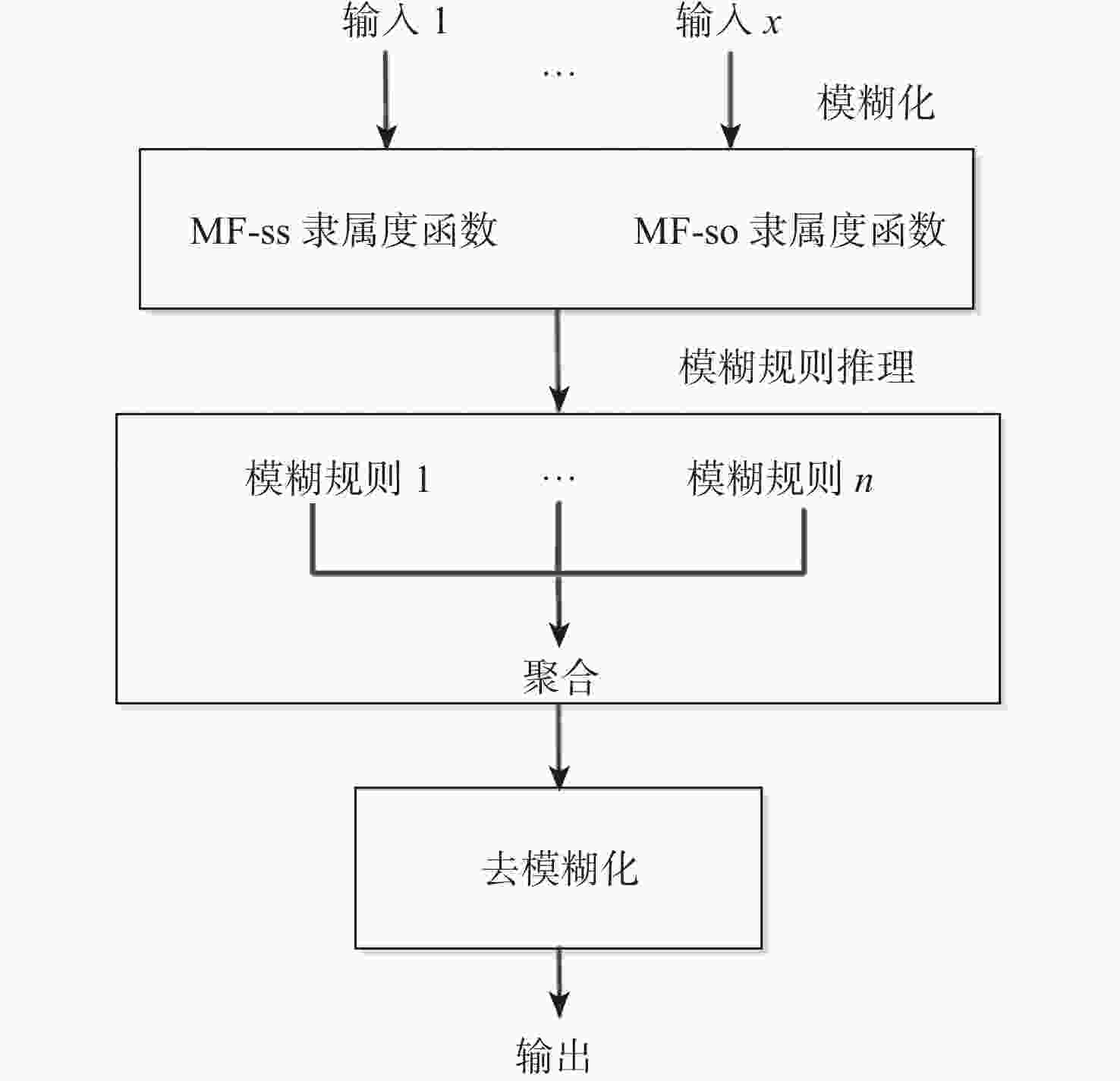

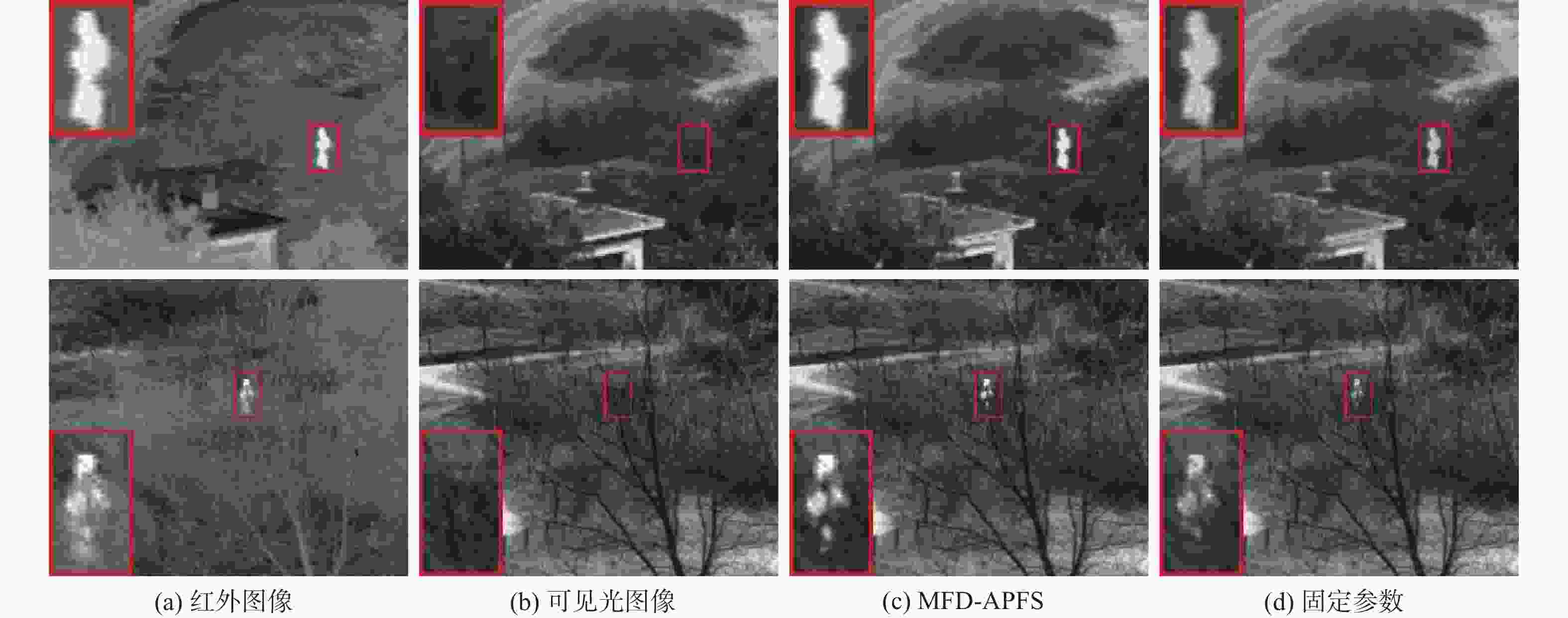

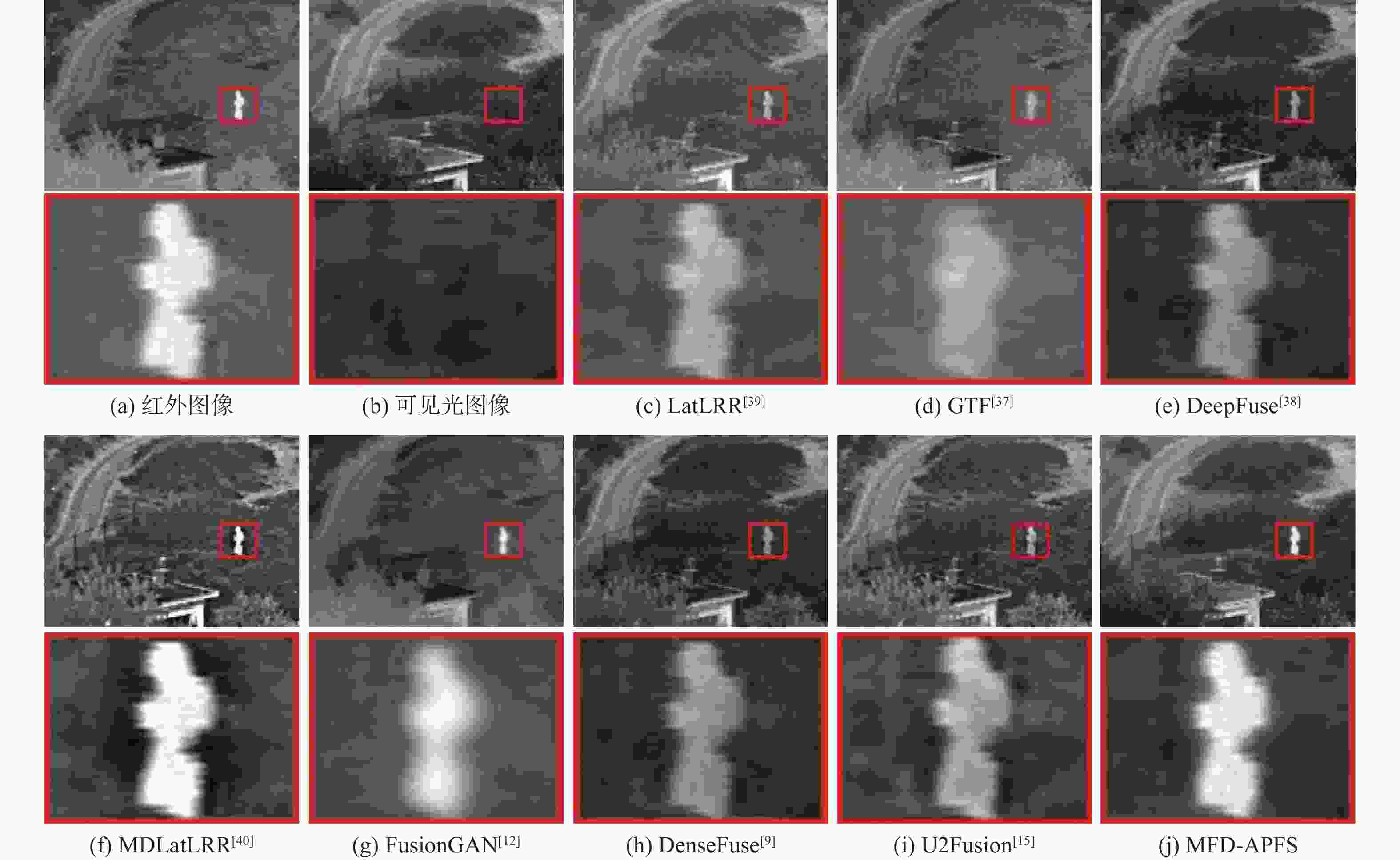

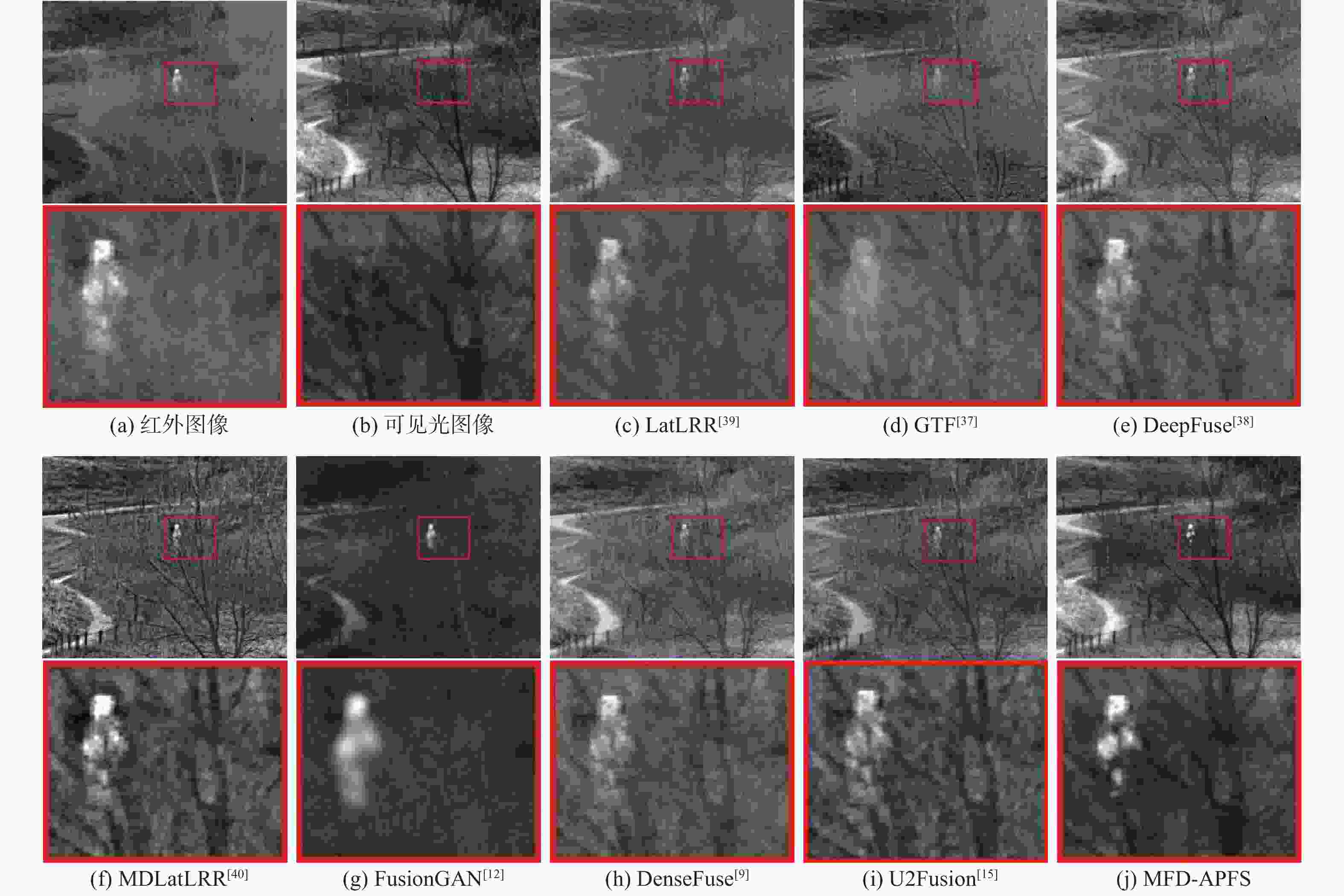

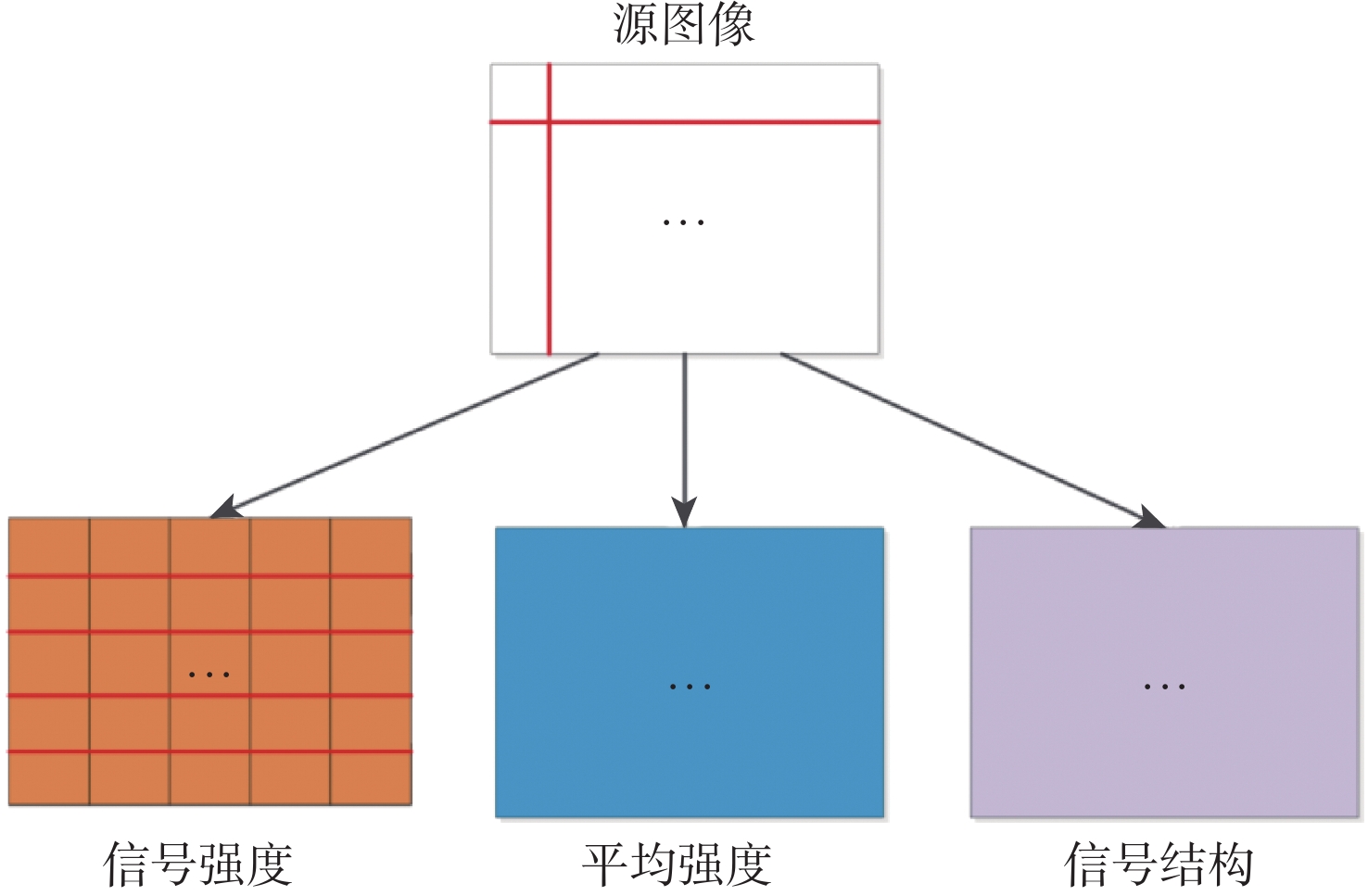

由于成像机制不同,红外图像能捕捉目标信息,可见光图像提供纹理细节,需融合两者以提升视觉感知与机器识别效果。基于模糊逻辑理论,提出一种多级模糊逻辑判别与自适应参数融合策略(MFD-APFS)的红外与可见光图像融合方法。将红外图像与可见光图像分别进行结构块分解,得到由信号强度分量重构的对比度细节图像组;将源图像组与对比度细节图像组分别输入设计的模糊逻辑判别系统,对图像组进行模糊逻辑判别得到各自的显著性图像,并对得到的显著性图像组进行二次模糊逻辑判别,得到联合的显著性图像;利用引导滤波技术,将显著性图像引导源图像,得到多幅决策图,通过自适应参数的融合策略,得到最终的融合图像。将MFD-APFS方法在红外和可见光图像公开数据集上进行实验测试,结果表明,相比7种主流的融合方法,对于客观度量指标SSIM-F和

Q AB /F ,在TNO数据集上分别提升了0.169和0.1403 ,在RoadScenes数据集上分别提升了0.1753 和0.0537; 主观视觉效果表明,所提方法可以生成目标清晰、细节丰富的融合图像,较好地保留了红外图像目标信息及可见光图像纹理信息。-

关键词:

- 红外与可见光图像融合 /

- 自适应参数融合策略 /

- 多级模糊逻辑 /

- 引导滤波 /

- 决策图

Abstract:Due to different imaging mechanisms, infrared imaging can capture target information under special conditions where the targetis obstructed, while visible light imaging can capture the texture details of the scenarios. Therefore, to obtain a fusion image containing both target information and texture details, infrared imaging and visible light imaging are generally combined to facilitate visual perception and machine recognition. Based on fuzzy logic theory, an infrared and visible light image fusion method was proposed,combining multistage fuzzy discrimination and adaptive parameter fusion strategy (MFD-APFS). First, the infrared and visible light images were decomposed into structural patches, obtaining the contrast-detail image set reconstructed by the signal intensity component. Second, the source image stand contrast-detail image set were processed through a designed fuzzy discrimination system, generating saliency maps for each set. A second-stage fuzzy discrimination was then applied to produce a unified saliency map. Finally, the guided filtering technique was used, with the saliency map guiding the source image to obtain multiple decision graphs. The final fusion image was obtained through the adaptive parameter fusion strategy. The proposed MFD-APFS method was experimentally evaluated on publicly available infrared and visible light datasets. Compared to the seven mainstream fusion methods, the proposed method shows improvements in objective metrics. On the TNO dataset, SSIM-F and

Q AB /F were improved by 0.169 and0.1403 , respectively, and on the RoadScenes dataset, they were improved by0.1753 and0.0537 , respectively. Furthermore, the subjective visual analysis indicates that the proposed method is capable of generating fusion images with clear targets and enriched details while retaining infrared target information and visible texture information. -

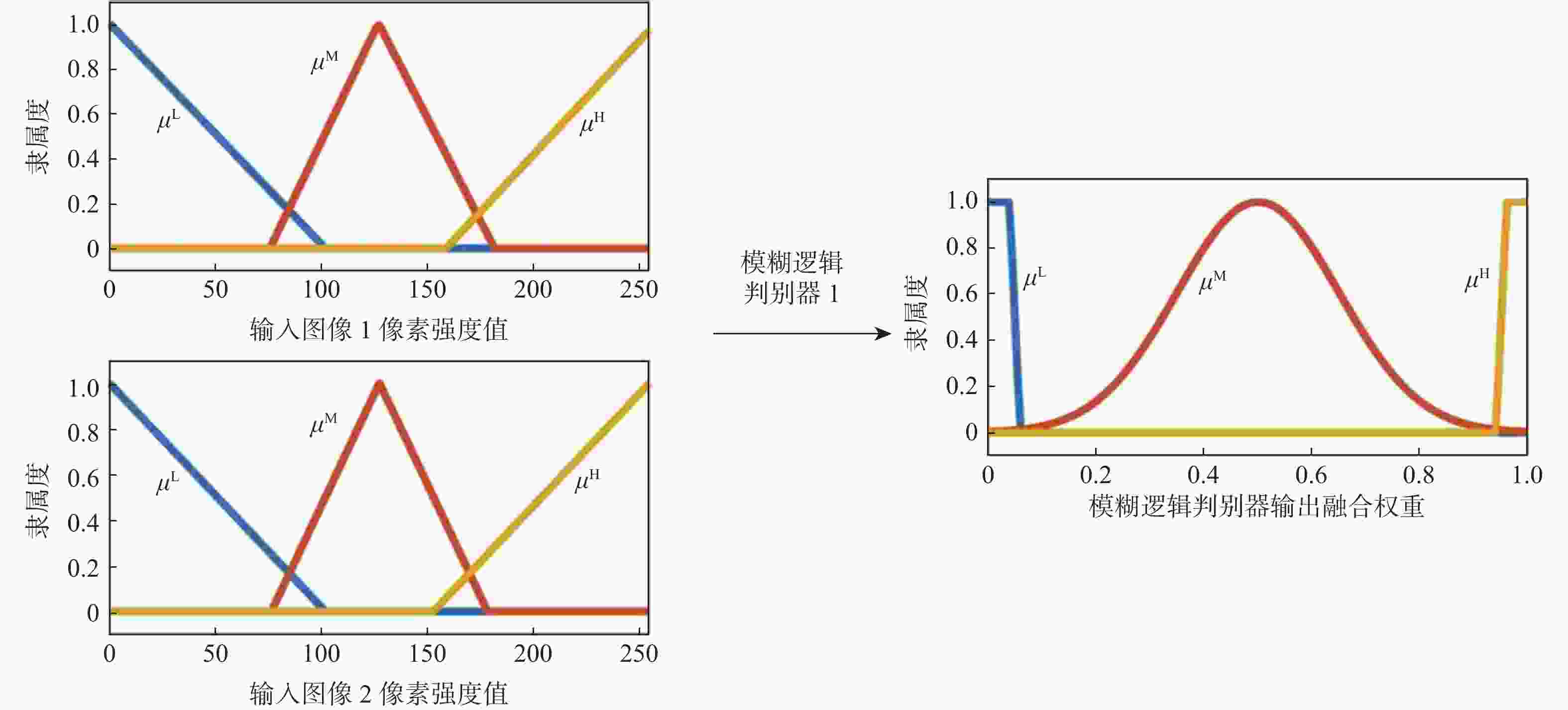

表 1 模糊逻辑判别器1的MF-ss模糊规则

Table 1. MF-ss fuzzy rules for fuzzy logic discriminator 1

$ {\text{MF}}{{\text{-sf}}}^{} $ $ {\text{MF}} {{\text{-ss}}}^{} $ $ \mu _{{\text{in\_1}}}^{\text{L}} $ $ \mu _{{\text{in\_1}}}^{\text{M}} $ $ \mu _{{\text{in\_1}}}^{\text{H}} $ $ \mu _{{\text{in\_1}}}^{\text{L}} $ $ \mu _ {{\rm{w}}1} $ $ \mu _ {{\rm{w}}1} $ $ \mu _ {{\rm{w}}1} $ $ \mu _{{\text{in\_1}}}^{\text{M}} $ $ \mu _ {{\rm{w}}2} $ $ \mu _ { {\rm{w}}2} $ $ \mu _{{\rm{w}}1} $ $ \mu _{{\text{in\_1}}}^{\text{H}} $ $ \mu _{{\rm{w}}3 } $ $ \mu _{{\rm{w}}3 } $ $ \mu _{{\rm{w}}3 } $ 表 2 模糊逻辑判别器1的MF-so模糊规则

Table 2. MF-so fuzzy rules for fuzzy logic discriminator 1

$ {\text{MF}} {{\text{-sf}}}^{} $ $ {\text{MF}} {{\text{-so}}}^{} $ $ \mu _{{\text{in\_1}}}^{\text{L}} $ $ \mu _{{\text{in\_1}}}^{\text{M}} $ $ \mu _{{\text{in\_1}}}^{\text{H}} $ $ \mu _{{\text{in\_1}}}^{\text{L}} $ $ \mu _{{\rm{w}}1} $ $ \mu _{{\rm{w}}1} $ $ \mu _{{\rm{w}}1}$ $ \mu _{{\text{in\_1}}}^{\text{M}} $ $ \mu _{{\rm{w}}2} $ $ \mu _{{\rm{w}}2} $ $ \mu _{{\rm{w}}1} $ $ \mu _{{\text{in\_1}}}^{\text{H}} $ $ \mu _{{\rm{w}}3} $ $ \mu _{{\rm{w}}3} $ $ \mu _{{\rm{w}}3} $ 表 3 模糊逻辑判别器2的模糊规则

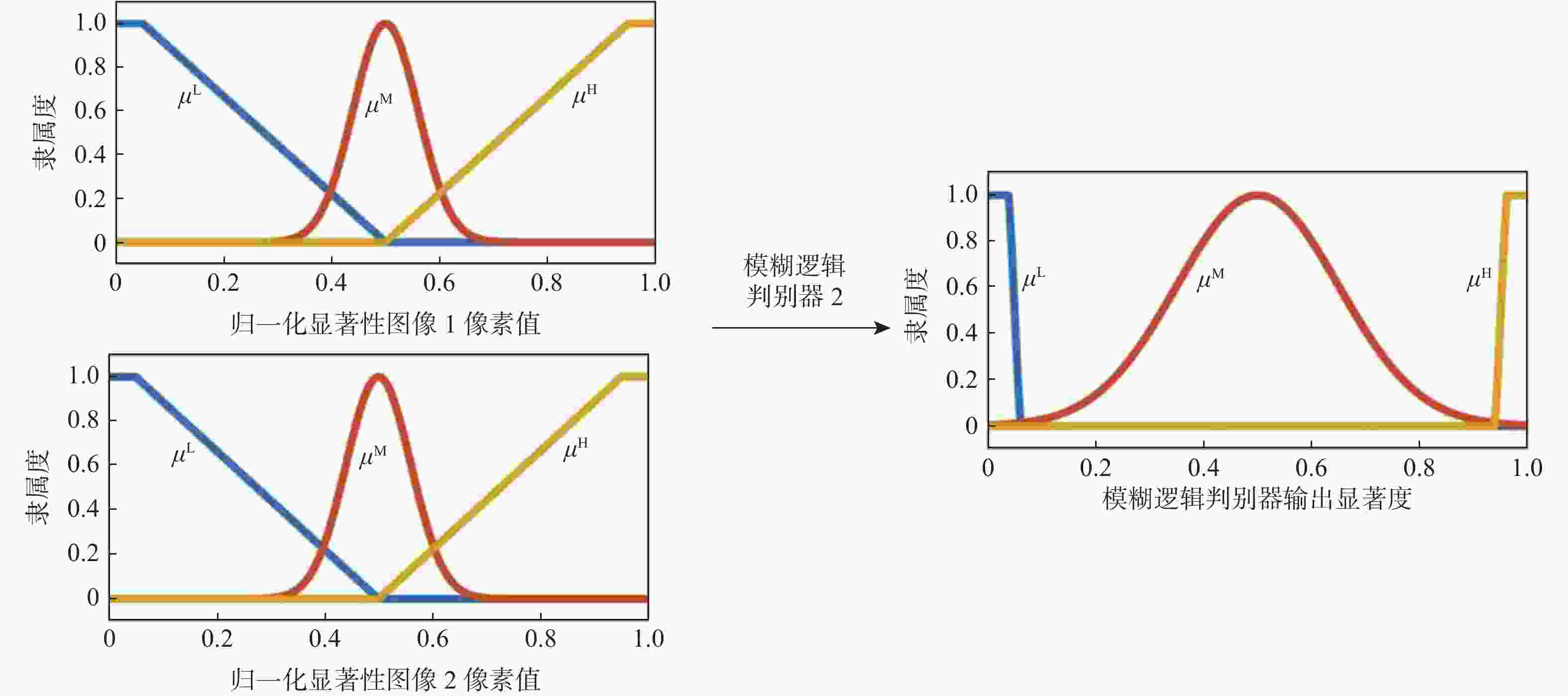

Table 3. Fuzzy rules for fuzzy logic discriminator 2

$ {\text{MF}} {\text{-sf}} $ $ {\text{MF}} {{\text{-sp}}}^{} $ $ \mu _{{\text{in\_2}}}^{\text{L}} $ $ \mu _{{\text{in\_2}}}^{\text{M}} $ $ \mu _{{\text{in\_2}}}^{\text{H}} $ $ \mu _{{\text{in\_2}}}^{\text{L}} $ $ \mu _{w1}^{} $ $ \mu _{w2}^{} $ $ \mu _{w3}^{} $ $ \mu _{{\text{in\_2}}}^{\text{M}} $ $ \mu _{w1}^{} $ $ \mu _{w2}^{} $ $ \mu _{w3}^{} $ $ \mu _{{\text{in\_2}}}^{\text{H}} $ $ \mu _{w3}^{} $ $ \mu _{w3}^{} $ $ \mu _{w3}^{} $ 表 4 模糊逻辑判别器1输入隶属度函数的参数

Table 4. Fuzzy logic discriminator 1 parameters of input membership function

隶属度函数 l m u $ \mu _{{\text{in\_1}}}^{\text{L}} $ −102 76.5 153 $ \mu _{{\text{in\_1}}}^{\text{M}} $ 0 127.5 255 $ \mu _{{\text{in\_1}}}^{\text{H}} $ 102 178.5 357 表 5 模糊逻辑判别器1输出隶属度函数的参数

Table 5. Fuzzy logic discriminator 1 parameters of output membership function

隶属度函数 a b c d $ \mu _{{\text{out\_1}}}^{\text{L}} $ −0.36 −0.04 0.04 0.06 $ \mu _{{\text{out\_1}}}^{\text{M}} $ $ \mu _{{\text{out\_1}}}^{\text{H}} $ 0.94 0.96 1.04 1.36 表 6 模糊逻辑判别器2输入隶属度函数的参数

Table 6. Fuzzy logic discriminator 2 parameters of input membership function

隶属度函数 a b c d $ \mu _{{\text{in\_2}}}^{\text{L}} $ − 0.2711 − 0.1289 0.05 0.5 $ \mu _{{\text{in\_2}}}^{\text{M}} $ $ \mu _{{\text{in\_2}}}^{\text{H}} $ 0.5 0.95 1.129 1.271 表 7 模糊逻辑判别器2输出隶属度函数的参数

Table 7. Fuzzy logic discriminator 2 parameters of output membership function

隶属度函数 a b c d $ \mu _{{\text{out\_2}}}^{\text{L}} $ −0.36 −0.04 0.025 0.05 $ \mu _{{\text{out\_2}}}^{\text{M}} $ $ \mu _{{\text{out\_2}}}^{\text{H}} $ 0.95 0.975 1.04 1.36 表 8 不同融合方法在TNO数据集上的客观指标对比

Table 8. Comparison of objective indicators of different fusion methods on TNO dataset

方法 E FMIw FMIdct SSIM-F QAB/F SD LatLRR[39] 6.5075 0.3795 0.3264 0.7419 0.4102 28.0106 GTF[37] 6.5799 0.4393 0.4280 0.7586 0.4445 28.2344 DeepFuse[38] 6.9073 0.4216 0.4118 0.7532 0.4590 36.8281 MDLatLRR[40] 6.8711 0.4459 0.4001 0.7979 0.5004 34.4431 FusionGAN[12] 6.3708 0.3681 0.3573 0.5223 0.2155 25.0899 DenseFuse[9] 6.8381 0.4272 0.4166 0.7550 0.4610 36.4431 U2Fusion[15] 6.8546 0.3624 0.3400 0.7522 0.4451 32.8386 MFD-APFS 7.0301 0.5242 0.4280 0.9669 0.6407 48.7431 注:加粗数值表示最优结果。 表 9 不同融合方法在RoadScenes数据集上的客观指标对比

Table 9. Comparison of objective indicators of different fusion methods on RoadScenes dataset

方法 E FMIw FMIdct SSIM-F QAB/F SD LatLRR[39] 6.8162 0.3408 0.2880 0.6855 0.4200 36.7768 GTF[37] 7.7020 0.3811 0.4184 0.7032 0.4454 62.4172 DeepFuse[38] 7.3193 0.4047 0.3646 0.7273 0.4771 50.7207 MDLatLRR[40] 6.5869 0.3900 0.3457 0.6898 0.4826 41.3768 FusionGAN[12] 7.3122 0.3691 0.3717 0.5695 0.3296 48.1683 DenseFuse[9] 7.3185 0.4074 0.3657 0.7293 0.4786 50.6692 U2Fusion[15] 7.2966 0.4034 0.3524 0.7432 0.4770 47.1512 MFD-APFS 7.0024 0.4990 0.3167 0.9185 0.5323 47.4502 注:加粗数值表示最优结果。 -

[1] MA W H, WANG K, LI J W, et al. Infrared and visible image fusion technology and application: a review[J]. Sensors, 2023, 23(2): 599. doi: 10.3390/s23020599 [2] LI H, MANJUNATH B S, MITRA S K. Multisensor image fusion using the wavelet transform[J]. Graphical Models and Image Processing, 1995, 57(3): 235-245. doi: 10.1006/gmip.1995.1022 [3] SINGH S, SINGH H, GEHLOT A, et al. IR and visible image fusion using DWT and bilateral filter[J]. Microsystem Technologies, 2023, 29(4): 457-467. doi: 10.1007/s00542-022-05315-7 [4] REN Z G, REN G Q, WU D H. Fusion of infrared and visible images based on discrete cosine wavelet transform and high pass filter[J]. Soft Computing, 2023, 27(18): 13583-13594. doi: 10.1007/s00500-022-07175-9 [5] AGHAMALEKI J A, GHORBANI A. Image fusion using dual tree discrete wavelet transform and weights optimization[J]. The Visual Computer, 2023, 39(3): 1181-1191. doi: 10.1007/s00371-021-02396-9 [6] HUANG Z H, LI X, WANG L, et al. Spatially adaptive multi-scale image enhancement based on nonsubsampled contourlet transform[J]. Infrared Physics & Technology, 2022, 121: 104014. [7] YANG C X, HE Y N, SUN C, et al. Infrared and visible image fusion based on QNSCT and guided filter[J]. Optik, 2022, 253: 168592. doi: 10.1016/j.ijleo.2022.168592 [8] XU M L, TANG L F, ZHANG H, et al. Infrared and visible image fusion via parallel scene and texture learning[J]. Pattern Recognition, 2022, 132: 108929. doi: 10.1016/j.patcog.2022.108929 [9] LI H, WU X J. DenseFuse: a fusion approach to infrared and visible images[J]. IEEE Transactions on Image Processing, 2019, 28(5): 2614-2623. doi: 10.1109/TIP.2018.2887342 [10] TANG L F, YUAN J T, ZHANG H, et al. PIAFusion: a progressive infrared and visible image fusion network based on illumination aware[J]. Information Fusion, 2022, 83: 79-92. [11] TANG L F, XIANG X Y, ZHANG H, et al. DIVFusion: darkness-free infrared and visible image fusion[J]. Information Fusion, 2023, 91: 477-493. doi: 10.1016/j.inffus.2022.10.034 [12] MA J Y, YU W, LIANG P W, et al. FusionGAN: a generative adversarial network for infrared and visible image fusion[J]. Information Fusion, 2019, 48: 11-26. doi: 10.1016/j.inffus.2018.09.004 [13] YANG Y, LIU J X, HUANG S Y, et al. Infrared and visible image fusion via texture conditional generative adversarial network[J]. IEEE Transactions on Circuits and Systems for Video Technology, 2021, 31(12): 4771-4783. doi: 10.1109/TCSVT.2021.3054584 [14] YANG X, HUO H T, LI J, et al. DSG-Fusion: infrared and visible image fusion via generative adversarial networks and guided filter[J]. Expert Systems with Applications, 2022, 200: 116905. doi: 10.1016/j.eswa.2022.116905 [15] XU H, MA J Y, JIANG J J, et al. U2Fusion: a unified unsupervised image fusion network[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022, 44(1): 502-518. doi: 10.1109/TPAMI.2020.3012548 [16] YANG Y, LIU J X, HUANG S Y, et al. VMDM-fusion: a saliency feature representation method for infrared and visible image fusion[J]. Signal, Image and Video Processing, 2021, 15(6): 1221-1229. doi: 10.1007/s11760-021-01852-2 [17] ZHANG C F, ZHANG Z Y, FENG Z L. Image fusion using online convolutional sparse coding[J]. Journal of Ambient Intelligence and Humanized Computing, 2023, 14(10): 13559-13570. doi: 10.1007/s12652-022-03822-z [18] LI H Y, ZHANG C F, HE S D, et al. A novel fusion method based on online convolutional sparse coding with sample-dependent dictionary for visible-infrared images[J]. Arabian Journal for Science and Engineering, 2023, 48(8): 10605-10615. doi: 10.1007/s13369-023-07716-w [19] TAO T W, LIU M X, HOU Y K, et al. Latent low-rank representation with sparse consistency constraint for infrared and visible image fusion[J]. Optik, 2022, 261: 169102. doi: 10.1016/j.ijleo.2022.169102 [20] GUO Z Y, YU X T, DU Q L. Infrared and visible image fusion based on saliency and fast guided filtering[J]. Infrared Physics & Technology, 2022, 123: 104178. [21] YANG Y, LIU J X, HUANG S Y, et al. Infrared and visible image fusion based on modal feature fusion network and dual visual decision[C]//Proceedings of the IEEE International Conference on Multimedia and Expo. Piscataway: IEEE Press, 2021: 1-6. [22] MA K D, LI H, YONG H W, et al. Robust multi-exposure image fusion: a structural patch decomposition approach[J]. IEEE Transactions on Image Processing, 2017, 26(5): 2519-2532. doi: 10.1109/TIP.2017.2671921 [23] WANG Z, BOVIK A C, SHEIKH H R, et al. Image quality assessment: from error visibility to structural similarity[J]. IEEE Transactions on Image Processing, 2004, 13(4): 600-612. doi: 10.1109/TIP.2003.819861 [24] YANG Y, QUE Y, HUANG S Y, et al. Multimodal sensor medical image fusion based on type-2 fuzzy logic in NSCT domain[J]. IEEE Sensors Journal, 2016, 16(10): 3735-3745. doi: 10.1109/JSEN.2016.2533864 [25] ZAND M D, ANSARI A H, LUCAS C, et al. Risk assessment of coronary arteries heart disease based on neuro-fuzzy classifiers[C]//Proceedings of the 17th Iranian Conference of Biomedical Engineering. Piscataway: IEEE Press, 2010: 1-4. [26] RAHMAN M A, LIU S, WONG C Y, et al. Multi-focal image fusion using degree of focus and fuzzy logic[J]. Digital Signal Processing, 2017, 60: 1-19. doi: 10.1016/j.dsp.2016.08.004 [27] BALASUBRAMANIAM P, ANANTHI V P. Image fusion using intuitionistic fuzzy sets[J]. Information Fusion, 2014, 20: 21-30. doi: 10.1016/j.inffus.2013.10.011 [28] MANCHANDA M, SHARMA R. A novel method of multimodal medical image fusion using fuzzy transform[J]. Journal of Visual Communication and Image Representation, 2016, 40: 197-217. doi: 10.1016/j.jvcir.2016.06.021 [29] RIZZI A, GATTA C, PIACENTINI B, et al. Human-visual-system-inspired tone mapping algorithm for HDR images[C]//Proceedings of the Human Vision and Electronic Imaging IX. Bellingham: SPIE, 2004. [30] WANG Z J, TONG X Y. Consistency analysis and group decision making based on triangular fuzzy additive reciprocal preference relations[J]. Information Sciences, 2016, 361: 29-47. [31] CHAIRA T. A rank ordered filter for medical image edge enhancement and detection using intuitionistic fuzzy set[J]. Applied Soft Computing, 2012, 12(4): 1259-1266. doi: 10.1016/j.asoc.2011.12.011 [32] YANG Y, WU J H, HUANG S Y, et al. Multimodal medical image fusion based on fuzzy discrimination with structural patch decomposition[J]. IEEE Journal of Biomedical and Health Informatics, 2019, 23(4): 1647-1660. doi: 10.1109/JBHI.2018.2869096 [33] CUI G M, FENG H J, XU Z H, et al. Detail preserved fusion of visible and infrared images using regional saliency extraction and multi-scale image decomposition[J]. Optics Communications, 2015, 341: 199-209. doi: 10.1016/j.optcom.2014.12.032 [34] WANG Z, SIMONCELLI E P, BOVIK A C. Multiscale structural similarity for image quality assessment[C]//Proceedings of the Asilomar Conference on Signals, Systems & Computers. Piscataway: IEEE Press, 2003: 1398-1402. [35] TOET. TNO image fusion dataset[EB/OL]. (2022-10-15)[2023-06-01]. http://figshare.com/articles/TN_Image_Fusion_Dataset/1008029. [36] XU H, MA J Y, LE Z L, et al. FusionDN: a unified densely connected network for image fusion[J]. Proceedings of the AAAI Conference on Artificial Intelligence, 2020, 34(7): 12484-12491. doi: 10.1609/aaai.v34i07.6936 [37] MA J Y, CHEN C, LI C, et al. Infrared and visible image fusion via gradient transfer and total variation minimization[J]. Information Fusion, 2016, 31: 100-109. doi: 10.1016/j.inffus.2016.02.001 [38] PRABHAKAR K R, SRIKAR V S, BABU R V. DeepFuse: a deep unsupervised approach for exposure fusion with extreme exposure image pairs[C]//Proceedings of the IEEE International Conference on Computer Vision. Piscataway: IEEE Press, 2017: 4724-4732. [39] LI H, WU X J. Multi-focus image fusion using dictionary learning and low-rank representation[C]//Proceedings of the International Conference on Image and Graphics. Berlin: Springer, 2017: 675-686. [40] LI H, WU X J, KITTLER J. MDLatLRR: a novel decomposition method for infrared and visible image fusion[J]. IEEE Transactions on Image Processing, 2020, 29: 4733-4746. [41] VAN AARDT J. Assessment of image fusion procedures using entropy, image quality, and multispectral classification[J]. Journal of Applied Remote Sensing, 2008, 2(1): 023522. doi: 10.1117/1.2945910 [42] HAGHIGHAT M, RAZIAN M A. Fast-FMI: non-reference image fusion metric[C]//Proceedings of the IEEE 8th International Conference on Application of Information and Communication Technologies. Piscataway: IEEE Press, 2014: 1-3. [43] YANG C, ZHANG J Q, WANG X R, et al. A novel similarity based quality metric for image fusion[J]. Information Fusion, 2008, 9(2): 156-160. doi: 10.1016/j.inffus.2006.09.001 [44] QU G H, ZHANG D L, YAN P F. Information measure for performance of image fusion[J]. Electronics Letters, 2002, 38(7): 313. doi: 10.1049/el:20020212 [45] MA J Y, MA Y, LI C. Infrared and visible image fusion methods and applications: a survey[J]. Information Fusion, 2019, 45: 153-178. doi: 10.1016/j.inffus.2018.02.004 -

下载:

下载: