-

摘要:

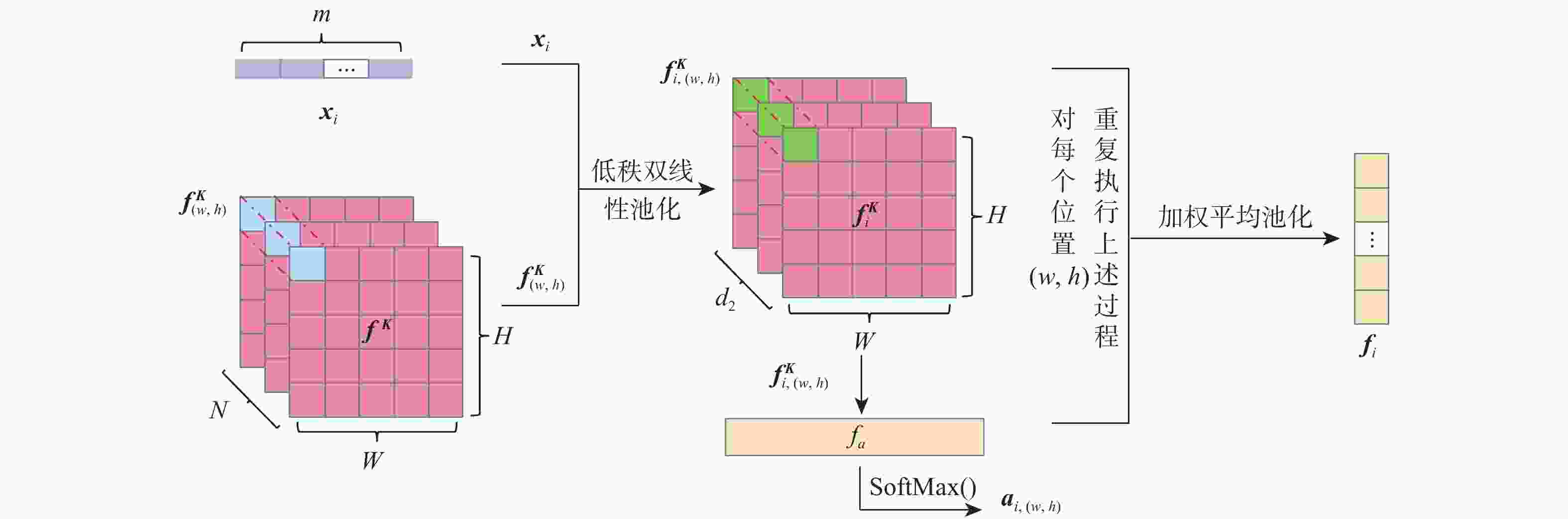

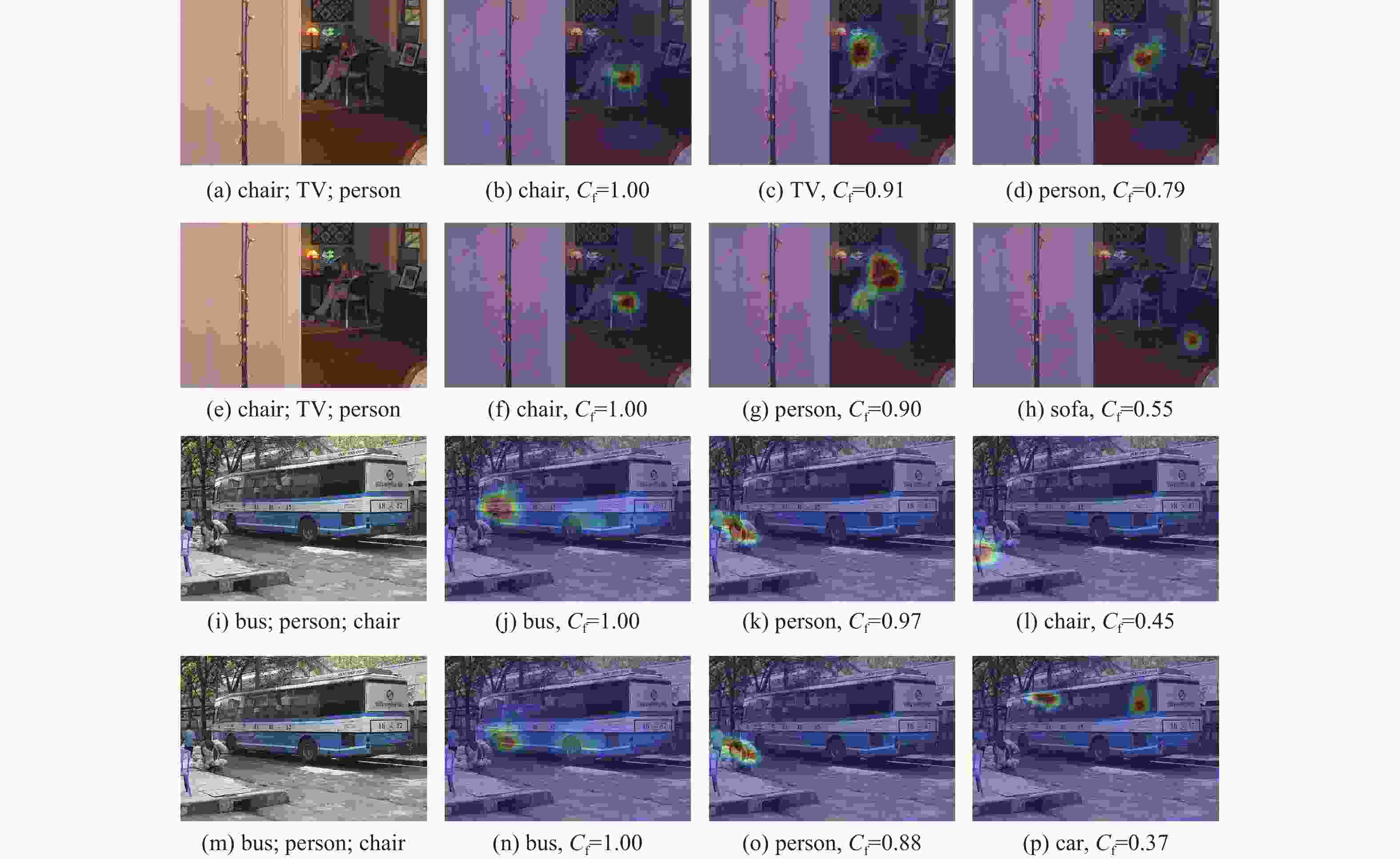

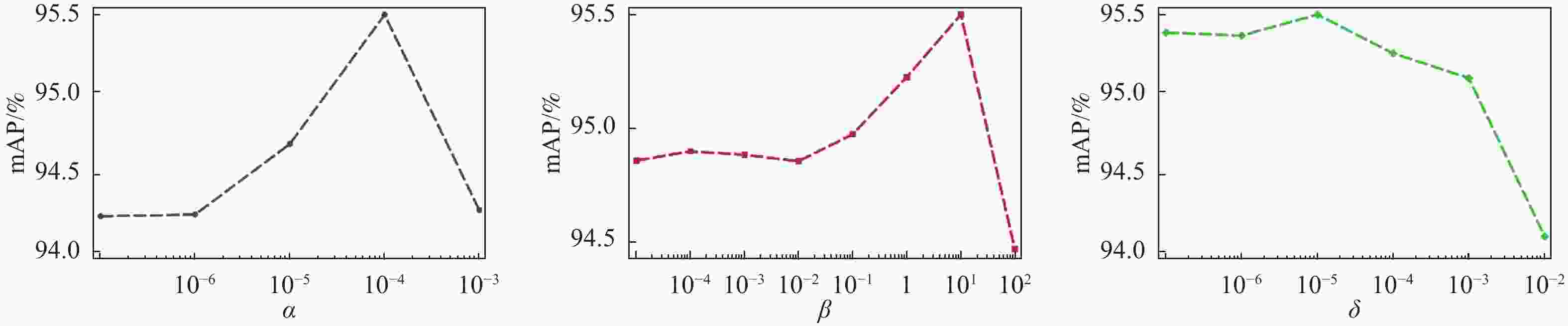

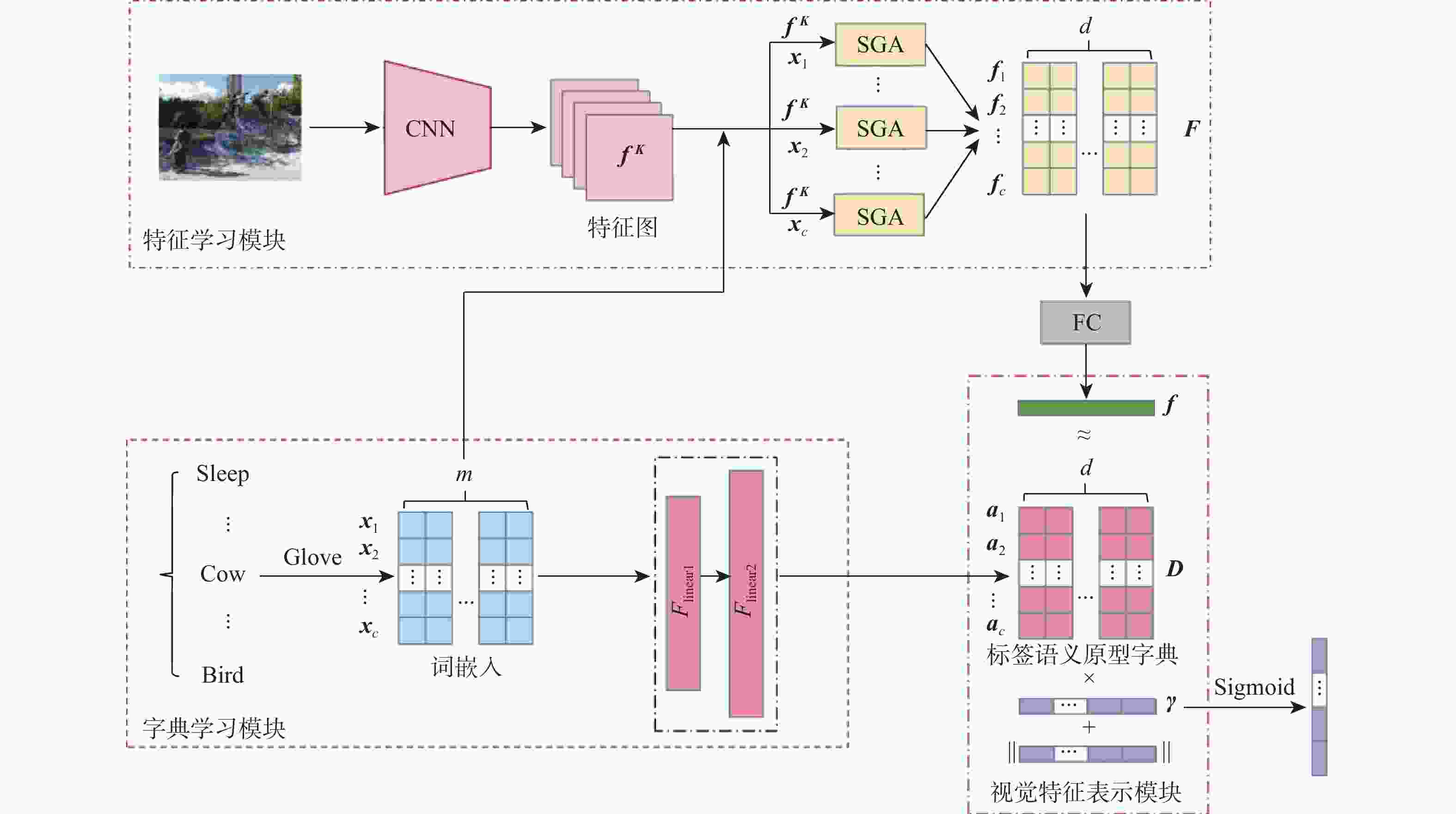

多标签图像分类旨在为给定的输入图像预测一组标签,基于语义信息的研究主要利用语义和视觉空间的相关性指导特征提取过程生成有效的特征表示,或利用语义和标签空间的相关性学习能够捕获标签相关性的加权分类器,未能同时建模语义、视觉和标签空间相关性。针对该问题,提出一种基于语义信息引导的多标签图像分类 (SIG-MLIC)方法,SIG-MLIC方法可以同时利用语义、视觉和标签空间,通过语义引导的注意力(SGA)机制增强标签与图像区域的关联性而生成语义特定的特征表示,同时利用标签的语义信息生成一个具有标签相关性约束的语义字典对视觉特征进行重建,获得归一化的表示系数作为标签出现的概率。在3个标准的多标签图像分类数据集上的实验结果表明:SIG-MLIC方法中的注意力机制和字典学习可以有效提高分类性能,验证了所提方法的有效性。

Abstract:Multi-label image classification aims to predict a set of labels for a given input image. Existing studies based on semantic information either use the correlation between semantic and visual space to guide the feature extraction process to generate effective feature representations or use the correlation between semantic and label spaces to learn weighted classifiers that capture label correlation. Most of these works use semantic information as auxiliary information for exploiting the visual space or label space, and few studies have exploited semantic, visual, and label space correlations simultaneously. However, these approaches fail to model the correlations across semantic, visual, and label spaces simultaneously. To solve this problem, a semantic information-guided multi-label image classification (SIG-MLIC) method was proposed. SIG-MLIC could simultaneously utilize semantic, visual, and label spaces, generating semantically specific feature representations via the association of image regions with labels reinforced by a semantic-guided attention (SGA) mechanism. Besides, the semantic information of labels was used to generate a semantic dictionary with label relevance constraints to reconstruct visual features, obtaining normalized representation coefficients as the probability of label occurrence. Experimental results on three standard multi-label image classification datasets show that both the attention mechanism and dictionary learning in SIG-MLIC can effectively improve classification performance, and the effectiveness of the proposed method has been verified.

-

表 1 在Pascal VOC 2007数据集上不同方法的比较

Table 1. Comparison with different methods on Pascal VOC 2007 dataset

% 方法 AP mAP aero bike bird boat bottle bus car cat chair cow table dog horse motor person plant sheep sofa train TV CPCL[6] 99.6 98.6 98.5 98.8 81.9 95.1 97.8 98.2 83.0 95.5 85.5 98.4 98.5 97.0 99.0 86.6 97.0 84.9 99.1 94.3 94.4 SSGRL[7]* 99.5 97.1 97.6 97.8 82.6 94.8 96.7 98.1 78.0 97.0 85.6 97.8 98.3 96.4 98.8 84.9 96.5 79.8 98.4 92.8 93.4 SSGRL(pre)[7]* 99.7 98.4 98.0 97.6 85.7 96.2 98.2 98.8 82.0 98.1 89.7 98.8 98.7 97.0 99.0 86.9 98.1 85.8 99.0 93.7 95.0 MSRN[19] 100.0 98.8 98.9 99.1 81.6 95.5 98.0 98.2 84.4 96.6 87.5 98.6 98.6 97.2 99.1 87.0 97.6 86.5 99.4 94.4 94.9 MSRN(pre)[19] 99.7 98.9 98.7 99.1 86.6 97.9 98.5 98.9 86.0 98.7 89.1 99.0 99.1 97.3 99.2 90.2 99.2 89.7 99.8 95.3 96.0 ML-GCN[8] 99.5 98.5 98.6 98.1 80.8 94.6 97.2 98.2 82.3 95.7 86.4 98.2 98.4 96.7 99.0 84.7 96.7 84.3 98.9 93.7 94.0 DSDL[29] 99.8 98.7 98.4 97.9 81.9 95.4 97.6 98.3 83.3 95.0 88.6 98.0 97.9 95.8 99.0 86.6 95.9 86.4 98.6 94.4 94.4 MCAR[21] 99.7 99.0 98.5 98.2 85.4 96.9 97.4 98.9 83.7 95.0 88.8 99.1 98.2 95.1 99.1 84.8 97.1 87.8 98.3 94.8 94.8 SIG-MLIC 99.9 98.6 98.9 98.3 83.6 98.2 97.9 99.1 82.7 97.5 89.6 99.3 99.1 98.8 99.1 86.9 99.5 86.6 99.4 97.2 95.5 SIG-MLIC(pre) 100.0 99.1 99.4 98.7 88.2 98.2 98.8 99.6 85.0 98.8 92.0 99.7 99.5 99.3 99.3 88.8 99.7 89.4 99.6 97.2 96.5 注:粗体数字为最优值,下划线数字为次优值,“pre”表示模型在MS-COCO数据集上进行了预训练,*表示输入图片像素大小为576×576。 表 2 在Pascal VOC 2012数据集上不同方法的比较

Table 2. Comparison with different methods on Pascal VOC 2012 dataset

% 方法 AP mAP aero bike bird boat bottle bus car cat chair cow table dog horse motor person plant sheep sofa train TV FeV+LV[35] 98.4 92.8 93.4 90.7 74.9 93.2 90.2 96.1 78.2 89.8 80.6 95.7 96.1 95.3 97.5 73.1 91.2 75.4 97.0 88.2 89.4 RCP[36] 99.3 92.2 97.5 94.9 82.3 94.1 92.4 98.5 83.8 93.5 83.1 98.1 97.3 96.0 98.8 77.7 95.1 79.4 97.7 92.4 92.2 RMIC[37] 98.0 85.5 92.6 88.7 64.0 86.8 82.0 94.9 72.7 83.1 73.4 95.2 91.7 90.8 95.5 58.3 87.6 70.6 93.8 83.0 84.4 SSGRL[7]* 99.5 95.1 97.4 96.4 85.8 94.5 93.7 98.9 86.7 96.3 84.6 98.9 98.6 96.2 98.7 82.2 98.2 84.2 98.1 93.5 93.9 SSGRL(pre)[7]* 99.7 96.1 97.7 96.5 86.9 95.8 95.0 98.9 88.3 97.6 87.4 99.1 99.2 97.3 99.0 84.8 98.3 85.8 99.2 94.1 94.8 MSRN[19] 99.7 95.2 98.3 96.3 84.8 96.5 93.3 99.6 87.4 96.0 86.3 98.9 98.3 96.9 98.8 80.6 97.7 80.5 99.4 94.7 94.0 MSRN(pre)[19] 99.8 96.3 98.4 96.8 85.2 97.5 95.2 99.6 88.0 96.6 89.8 99.0 98.8 96.8 99.0 84.5 97.1 85.8 99.3 95.9 95.0 DSDL[29] 99.4 95.3 97.6 95.7 83.5 94.8 93.9 98.5 85.7 94.5 83.8 98.4 97.7 95.9 98.5 80.6 95.7 82.3 98.2 93.2 93.2 MCAR[21] 99.6 97.1 98.3 96.6 87.0 95.5 94.4 98.8 87.0 96.9 85.0 98.7 98.3 97.3 99.0 83.8 96.8 83.7 98.3 93.5 94.3 SIG-MLIC 99.9 96.4 98.4 97.3 86.5 96.5 95.4 99.3 87.9 98.0 85.0 98.9 99.2 97.9 98.5 85.5 98.5 83.7 99.3 95.0 94.8 SIG-MLIC(pre) 100.0 97.8 98.8 98.1 88.9 97.5 96.0 99.5 89.7 98.8 88.1 99.3 99.7 98.4 98.9 88.4 98.7 87.0 99.6 96.7 96.0 注:粗体数字为每列最优值,下划线数字为每列次优值,“pre”表示模型在MS-COCO数据集上进行了预训练,*表示输入图片大小为576×576。 表 3 在MS-COCO数据集上不同方法的比较

Table 3. Comparison with different methods on MS-COCO dataset

% 方法 mAP CP CR CF1 OP OR OF1 得分

前3标签所有

标签得分

前3标签所有

标签得分

前3标签所有

标签得分

前3标签所有

标签得分

前3标签所有

标签得分

前3标签所有

标签CPCL[6] 82.8 89.0 85.6 63.5 71.1 74.1 77.6 90.5 86.1 65.9 74.5 76.3 79.9 SSGRL[7]* 83.8 91.9 89.9 62.5 68.5 72.7 76.8 93.8 91.3 64.1 70.8 76.2 79.7 MSRN[19] 83.4 84.5 86.5 72.9 71.5 78.3 78.3 84.3 86.1 76.8 75.5 80.4 80.4 ML-GCN[8] 83.0 89.2 85.1 64.1 72.0 74.6 78.0 90.5 85.8 66.5 75.4 76.7 80.3 DSDL[29] 81.7 88.1 84.1 62.9 70.4 73.4 76.7 89.6 85.1 65.3 73.9 75.6 79.1 MCAR[21] 83.8 88.1 85.0 65.5 72.1 75.1 78.0 91.0 88.0 66.3 73.9 76.7 80.3 SIG-MLIC 85.6 90.2 86.4 66.6 75.1 76.6 80.3 91.9 87.7 67.9 77.5 78.1 82.3 注:下划线数字为每列次优值。 表 4 在Pascal VOC 2007数据集上的消融实验

Table 4. Ablation experiment on Pascal VOC 2007 dataset

方案 名称 mAP/% 1 ResNet-101 92.6 2 SIG-MLIC w/o D 93.4 3 SIG-MLIC w/o SGA_1 95.1 4 SIG-MLIC w/o SGA_2 89.5 本文方法 SIG-MLIC 95.5 注:粗体数字为最优值。 -

[1] GE Z Y, JIANG X H, TONG Z, et al. Multi-label correlation guided feature fusion network for abnormal ECG diagnosis[J]. Knowledge-Based Systems, 2021, 233: 107508. doi: 10.1016/j.knosys.2021.107508 [2] CHEN L, LI Z D, ZENG T, et al. Predicting gene phenotype by multi-label multi-class model based on essential functional features[J]. Molecular Genetics and Genomics, 2021, 296(4): 905-918. doi: 10.1007/s00438-021-01789-8 [3] DING X M, LI B, XIONG W H, et al. Multi-instance multi-label learning combining hierarchical context and its application to image annotation[J]. IEEE Transactions on Multimedia, 2016, 18(8): 1616-1627. doi: 10.1109/TMM.2016.2572000 [4] LIU S L, ZHANG L, YANG X, et al. Query2Label: a simple trans- former way to multi-label classification[EB/OL]. (2021-07-22) [2023-06-02]. http://arxiv.org/abs/2107.10834. [5] WANG J, YANG Y, MAO J H, et al. CNN-RNN: a unified framework for multi-label image classification[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2016: 2285-2294. [6] ZHOU F T, HUANG S, LIU B, et al. Multi-label image classification via category prototype compositional learning[J]. IEEE Transactions on Circuits and Systems for Video Technology, 2022, 32(7): 4513-4525. doi: 10.1109/TCSVT.2021.3128054 [7] CHEN T S, XU M X, HUI X L, et al. Learning semantic-specific graph representation for multi-label image recognition[C]//Proceedings of the IEEE International Conference on Computer Vision. Piscataway: IEEE Press, 2019: 522-531. [8] CHEN Z M, WEI X S, WANG P, et al. Multi-label image recognition with graph convolutional networks[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2019: 5177-5186. [9] LI Q, PENG X J, QIAO Y, et al. Learning label correlations for multi-label image recognition with graph networks[J]. Pattern Recognition Letters, 2020, 138: 378-384. doi: 10.1016/j.patrec.2020.07.040 [10] WANG Y T, XIE Y Z, LIU Y, et al. Fast graph convolution network based multi-label image recognition via cross-modal fusion[C]//Proceedings of the 29th ACM International Conference on Information & Knowledge Management. New York: ACM, 2020: 1575-1584. [11] CHEN B Z, LI J X, LU G M, et al. Label co-occurrence learning with graph convolutional networks for multi-label chest X-ray image classification[J]. IEEE Journal of Biomedical and Health Informatics, 2020, 24(8): 2292-2302. doi: 10.1109/JBHI.2020.2967084 [12] NIU S J, XU Q, ZHU P F, et al. Coupled dictionary learning for multi-label embedding[C]//Proceedings of the International Joint Conference on Neural Networks. Piscataway: IEEE Press, 2019: 1-8. [13] ZHENG J Y, ZHU W C, ZHU P F. Multi-label quadruplet dictionary learning[C]//Proceedings of the Artificial Neural Networks and Machine Learning. Berlin: Springer, 2020: 119-131. [14] JING X Y, WU F, LI Z Q, et al. Multi-label dictionary learning for image annotation[J]. IEEE Transactions on Image Processing, 2016, 25(6): 2712-2725. doi: 10.1109/TIP.2016.2549459 [15] EVERINGHAM M, VAN GOOL L, WILLIAMS C K I, et al. The pascal visual object classes (VOC) challenge[J]. International Journal of Computer Vision, 2010, 88(2): 303-338. doi: 10.1007/s11263-009-0275-4 [16] LIN T-Y, MAIRE M, BELONGIE S, et al. Microsoft COCO: common objects in context[C]//Proceedings of the European conference on computer vision. Berlin: Springer, 2014: 740-755. [17] ZHU Y, KWOK J T, ZHOU Z H. Multi-label learning with global and local label correlation[J]. IEEE Transactions on Knowledge and Data Engineering, 2018, 30(6): 1081-1094. doi: 10.1109/TKDE.2017.2785795 [18] WENG W, WEI B W, KE W, et al. Learning label-specific features with global and local label correlation for multi-label classification[J]. Applied Intelligence, 2023, 53(3): 3017-3033. doi: 10.1007/s10489-022-03386-7 [19] QU X W, CHE H, HUANG J, et al. Multi-layered semantic representation network for multi-label image classification[J]. International Journal of Machine Learning and Cybernetics, 2023, 14(10): 3427-3435. [20] CHEN T S, WANG Z X, LI G B, et al. Recurrent attentional rein- forcement learning for multi-label image recognition[C]//Proceeding of the AAAI Conference on Artificial Intelligence. Palo Alto: AAAI Press, 2017, 6730-6737. [21] GAO B B, ZHOU H Y. Learning to discover multi-class attentional regions for multi-label image recognition[J]. IEEE Transactions on Image Processing, 2021, 30: 5920-5932. doi: 10.1109/TIP.2021.3088605 [22] WANG Y T, XIE Y Z, ZENG J F, et al. Cross-modal fusion for multi-label image classification with attention mechanism[J]. Computers and Electrical Engineering, 2022, 101: 108002. doi: 10.1016/j.compeleceng.2022.108002 [23] WU T, HUANG Q Q, LIU Z W, et al. Distribution-balanced loss for multi-label classification in long-tailed datasets[C]//Proceeding of the European Conference on Computer Vision. Berlin: Springer, 2020: 162-178. [24] RIDNIK T, BEN-BARUCH E, ZAMIR N, et al. Asymmetric loss for multi-label classification[C]//Proceedings of the IEEE/CVF Inter-national Conference on Computer Vision. Piscataway: IEEE Press, 2021: 82-91. [25] DONG J X. Focal loss improves the model performance on multi-label image classifications with imbalanced data[C]//Proceedings of the 2nd International Conference on Industrial Control Network and System Engineering Research. New York: ACM, 2020: 18-21. [26] CAO X C, ZHANG H, GUO X J, et al. SLED: semantic label embedding dictionary representation for multilabel image annotation[J]. IEEE Transactions on Image Processing, 2015, 24(9): 2746-2759. doi: 10.1109/TIP.2015.2428055 [27] ZHAO D D, YI M H, GUO J X, et al. A novel image classification method based on multi-layer dictionary learning[C]//Proceedings of the CAA Symposium on Fault Detection, Supervision, and Safety for Technical Processes. Piscataway: IEEE Press, 2021: 1-6. [28] OU L, HE Y, LIAO S L, et al. FaceIDP: face identification differential privacy via dictionary learning neural networks[J]. IEEE Access, 2023, 11: 31829-31841. doi: 10.1109/ACCESS.2023.3260260 [29] ZHOU F T, HUANG S, XING Y. Deep semantic dictionary learning for multi-label image classification[C]//Proceedings of the AAAI Conference on Artificial Intelligence. Palo Alto: AAAI Press, 2021: 3572-3580. [30] HUANG S, LIN J K, HUANGFU L W. Class-prototype discriminative network for generalized zero-shot learning[J]. IEEE Signal Processing Letters, 2020, 27: 301-305. doi: 10.1109/LSP.2020.2968213 [31] XING C, ROSTAMZADEH N, ORESHKIN B N, et al. Adaptive cross-modal few-shot learning[C]//Proceedings of the Annual Conference on Neural Information Processing Systems. La Jolla: NIPS, 2019: 4848-4858. [32] HE X T, PENG Y X. Fine-grained image classification via combining vision and language[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2017: 7332-7340. [33] PENNINGTON J, SOCHER R, MANNING C. Glove: global vectors for word representation[C]//Proceedings of the Conference on Empirical Methods in Natural Language Processing. Stroudsburg: Association for Computational Linguistics, 2014: 1532-1543. [34] WANG Z, FANG Z L, LI D D, et al. Semantic supplementary network with prior information for multi-label image classification[J]. IEEE Transactions on Circuits and Systems for Video Technology, 2022, 32(4): 1848-1859. doi: 10.1109/TCSVT.2021.3083978 [35] YANG H, ZHOU J T, ZHANG Y, et al. Exploit bounding box annotations for multi-label object recognition[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2016: 280-288. [36] WANG M, LUO C Z, HONG R C, et al. Beyond object proposals: random crop pooling for multi-label image recognition[J]. IEEE Transactions on Image Processing, 2016, 25(12): 5678-5688. doi: 10.1109/TIP.2016.2612829 [37] HE S, XU C, GUO T, et al. Reinforced multi-label image classifi- cation by exploring curriculum[C]//Proceedings of the AAAI Conference on Artificial Intelligence. Palo Alto: AAAI Press, 2018: 3183-3190. -

下载:

下载: