-

摘要:

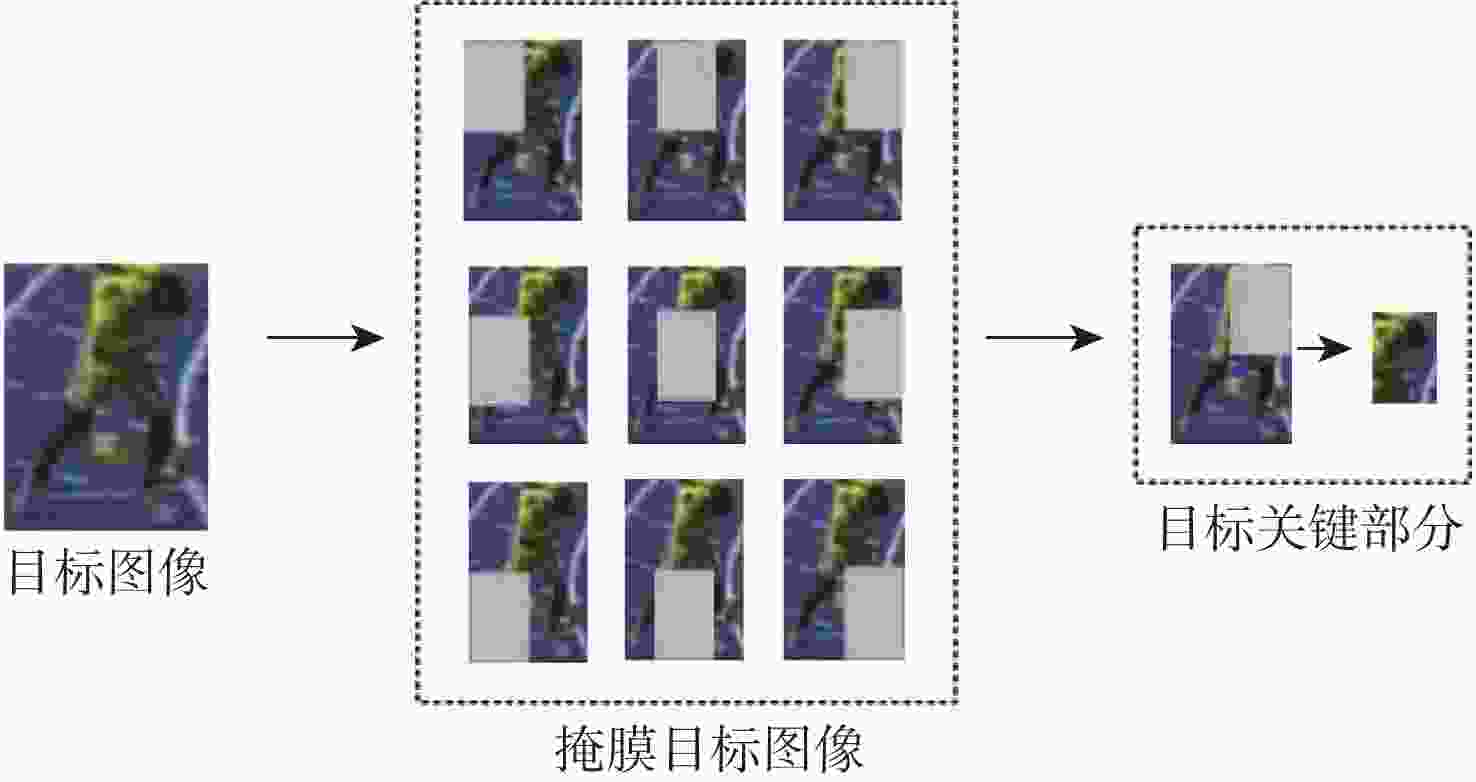

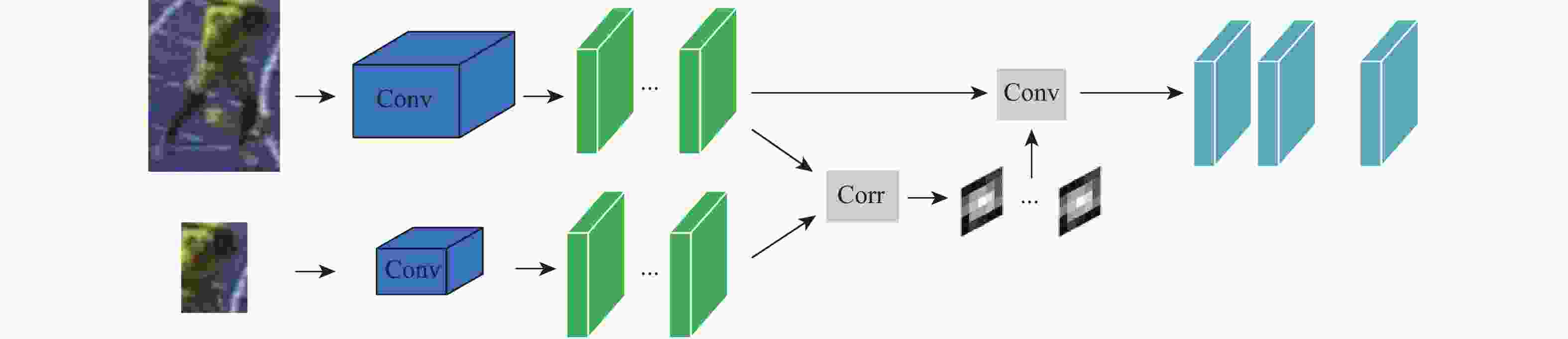

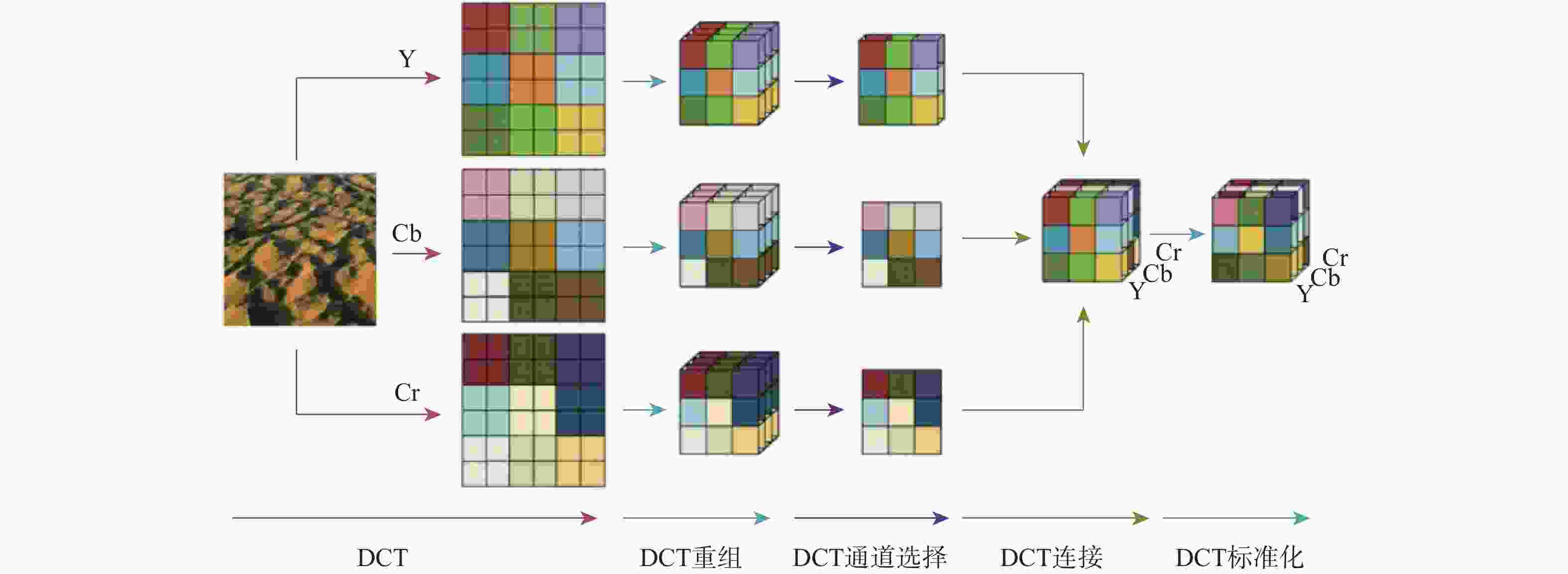

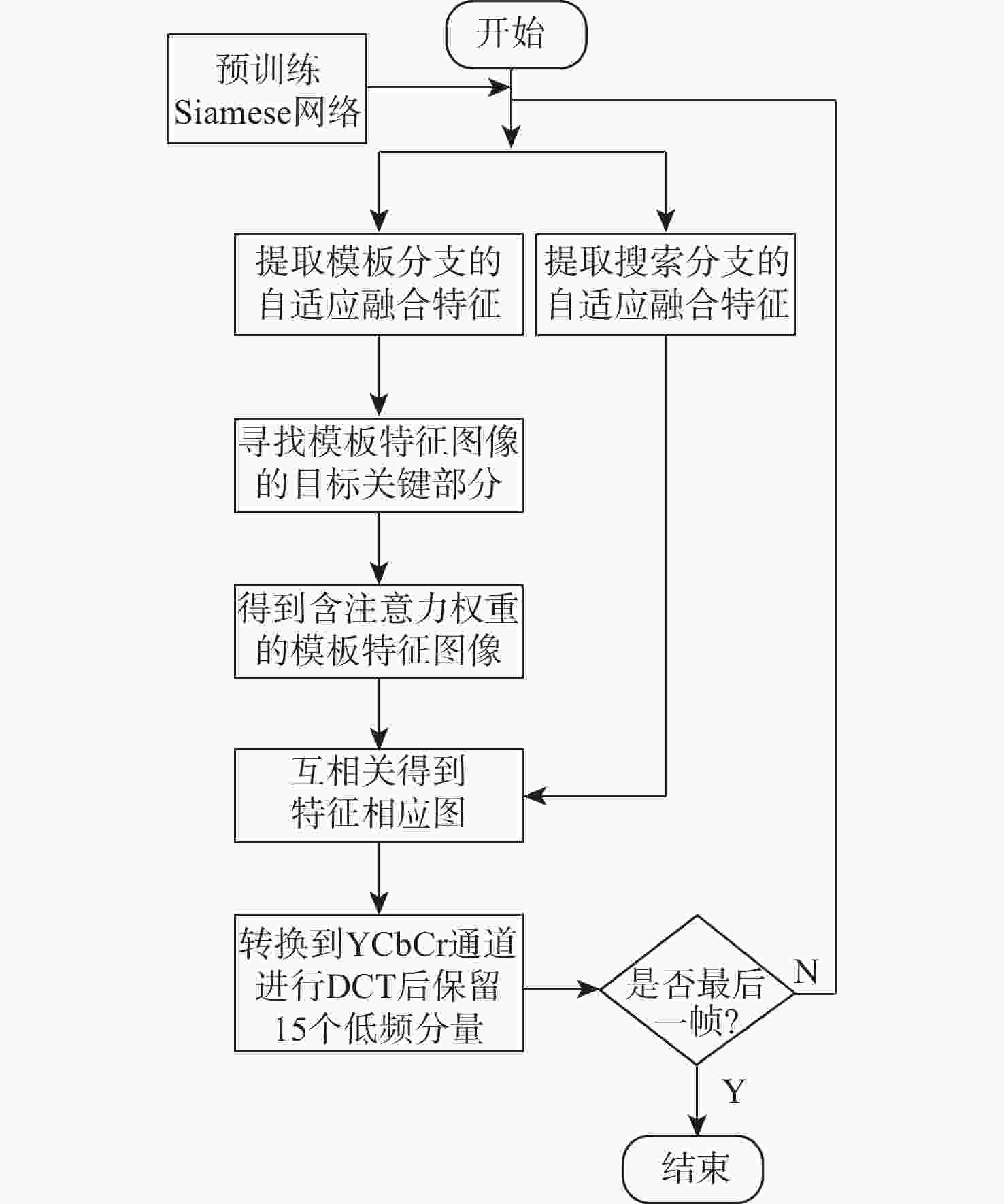

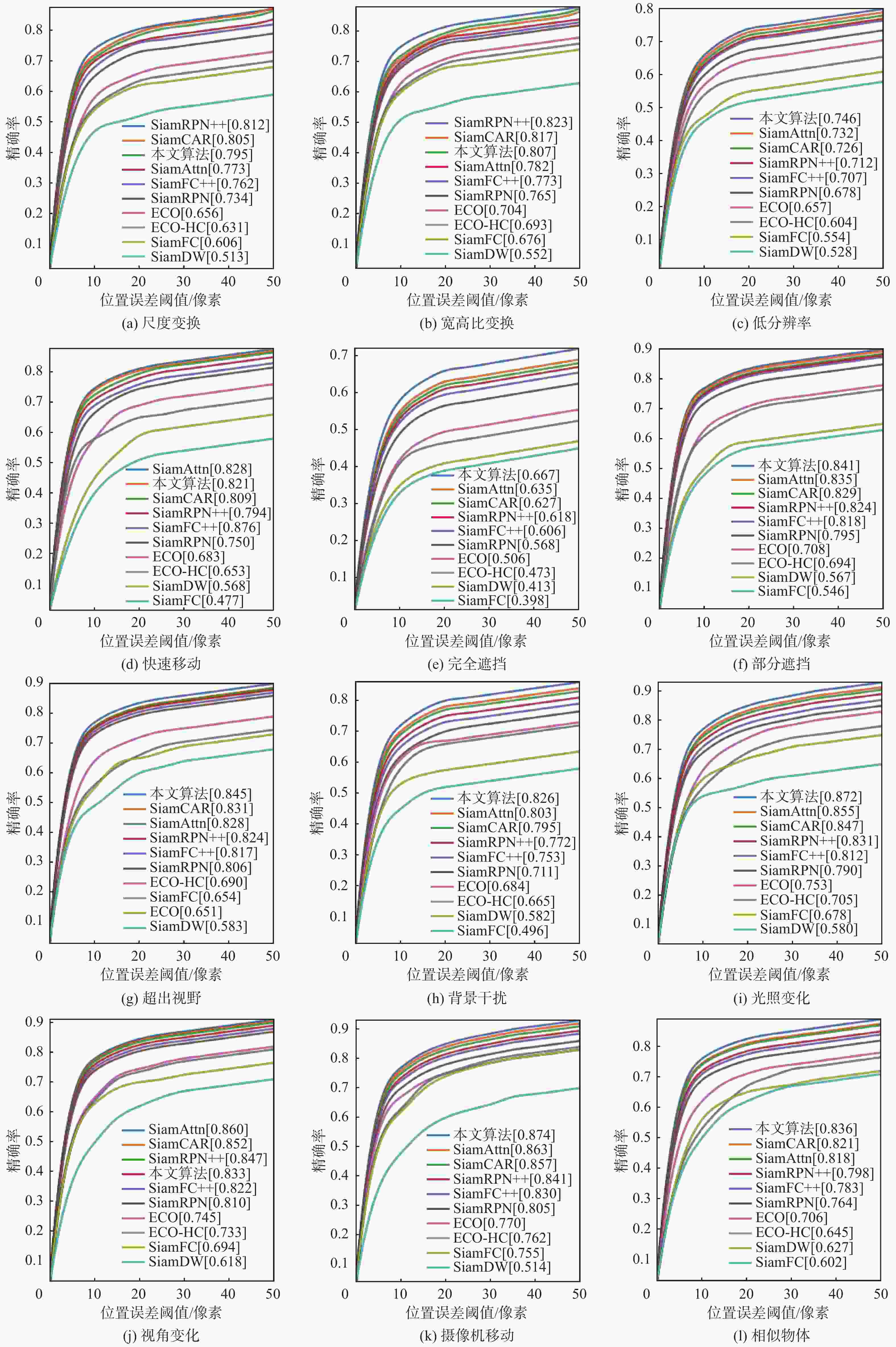

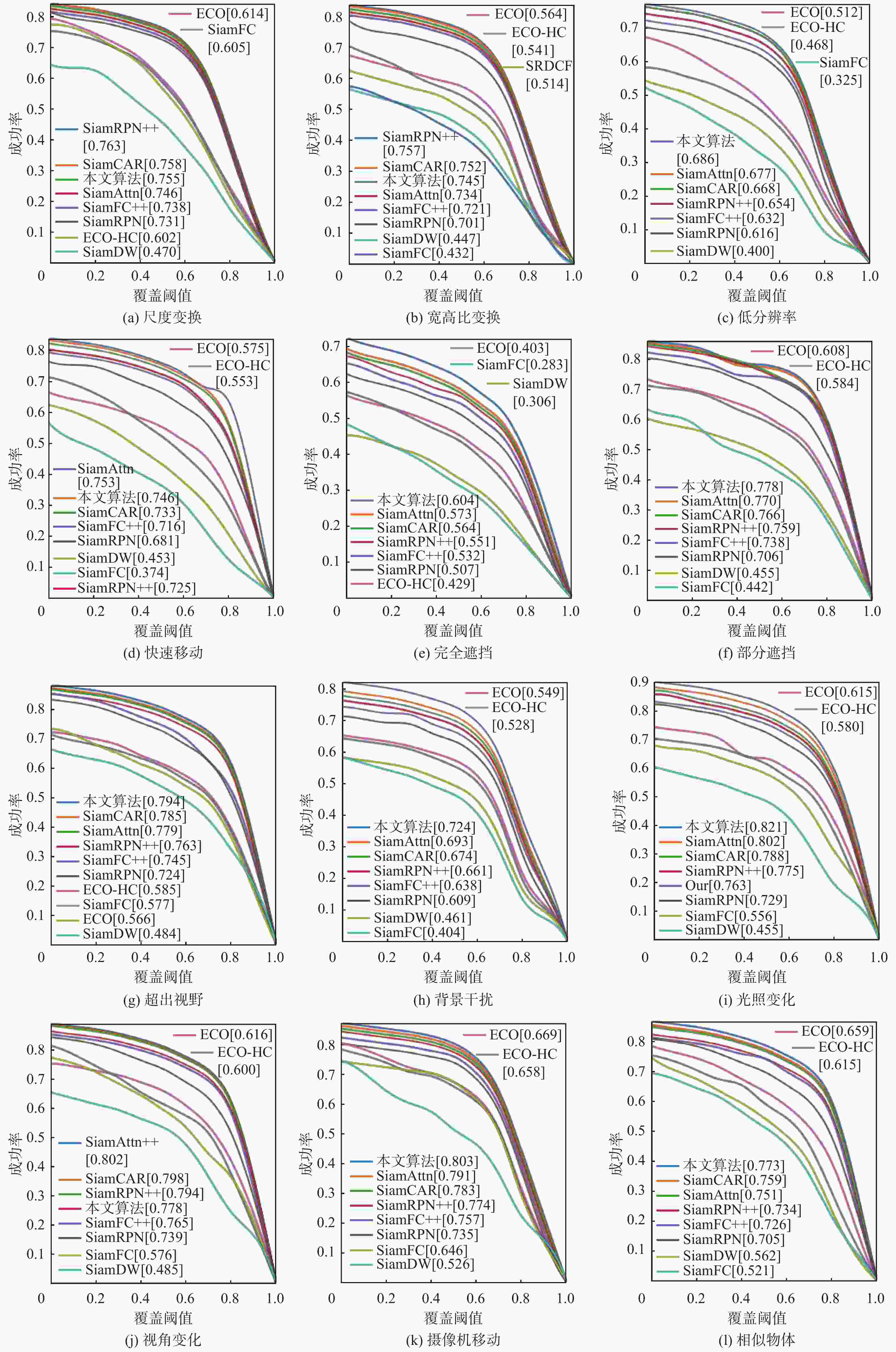

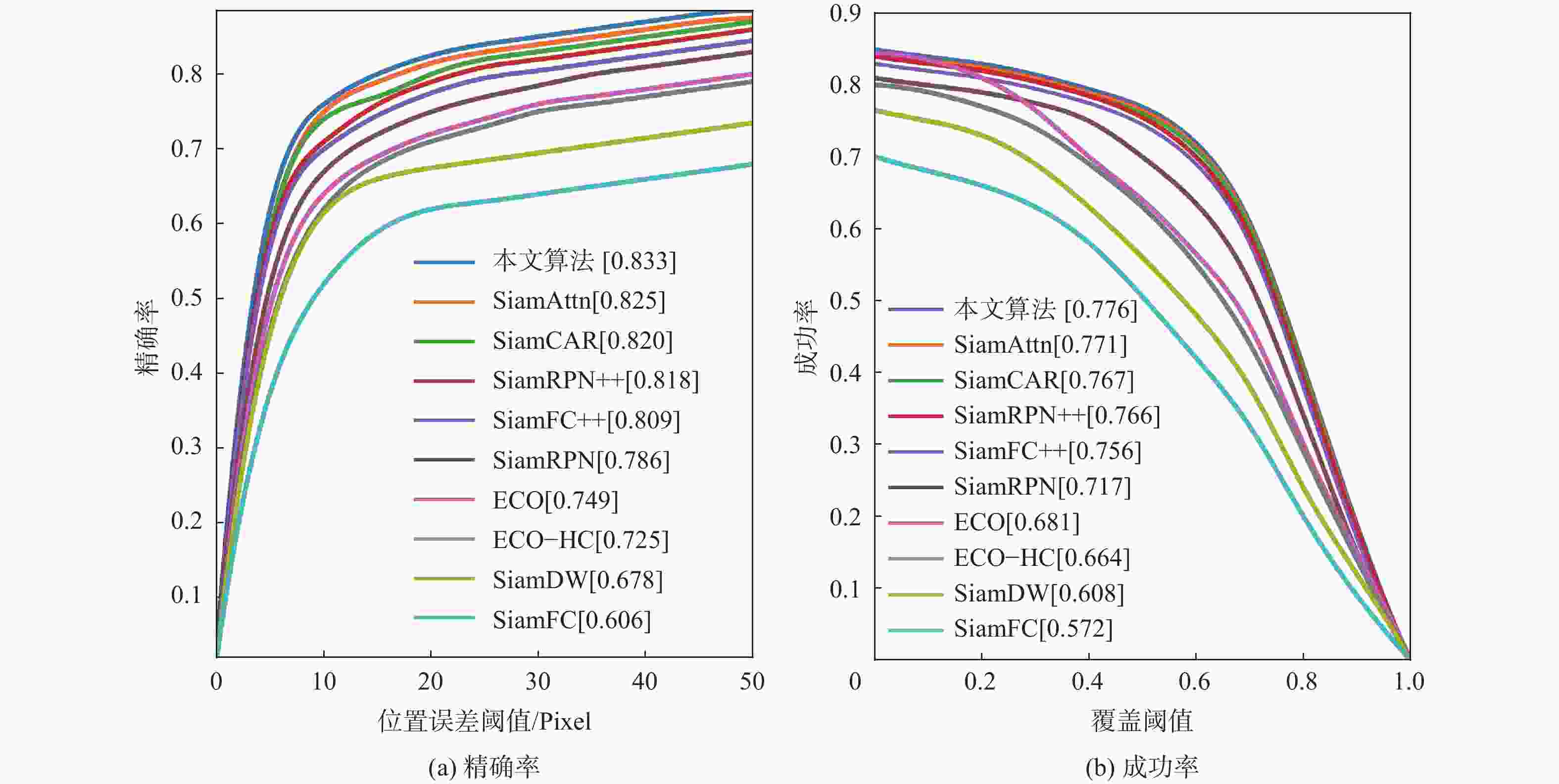

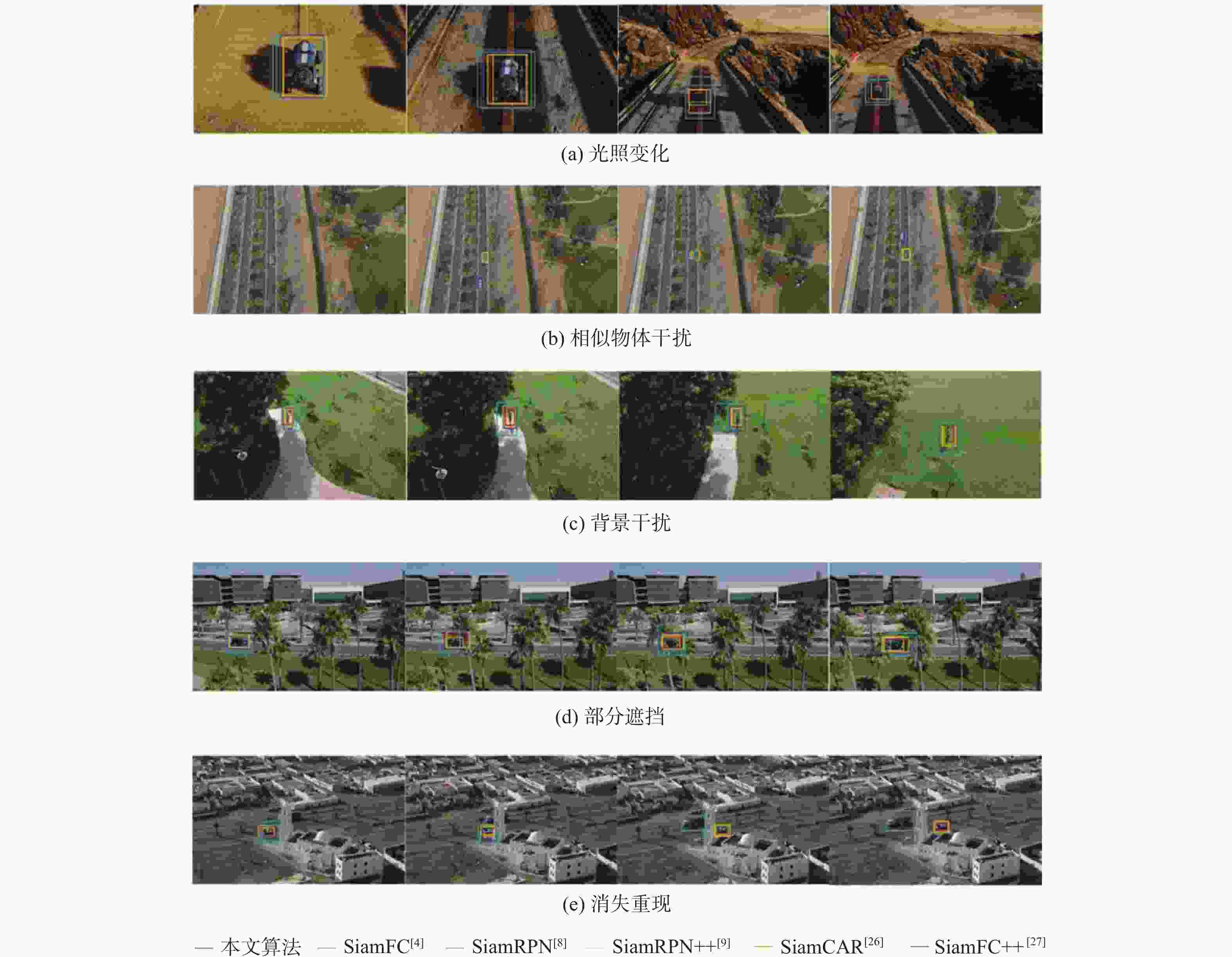

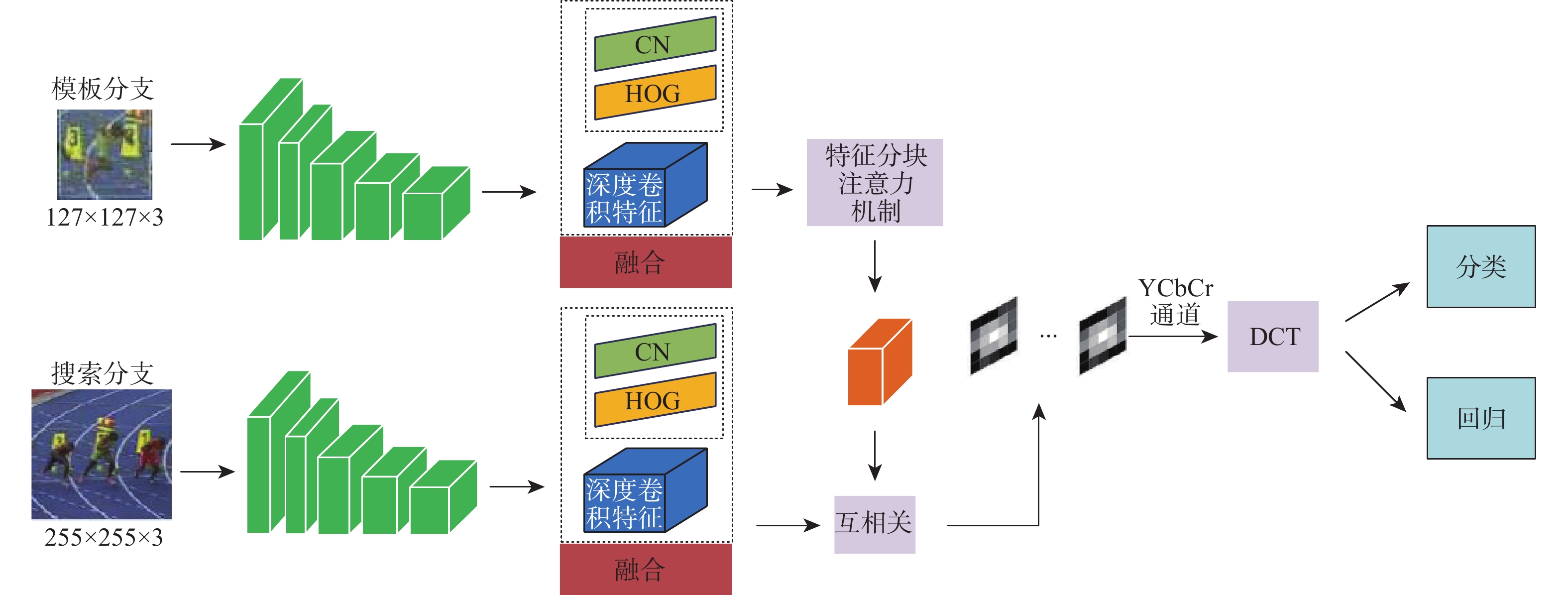

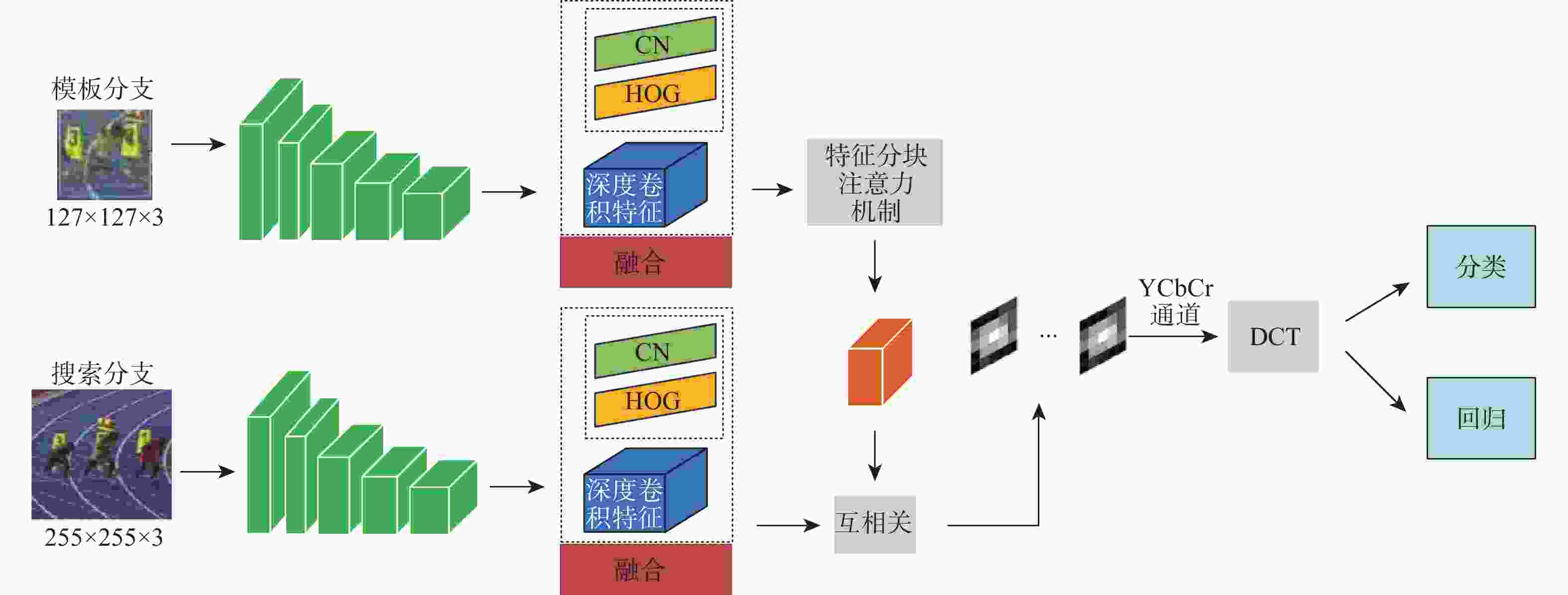

无人机(UAV)已被广泛应用于各类领域中,目标跟踪是无人机应用的关键技术之一。提出一种基于特征融合和分块注意力的无人机跟踪算法,旨在解决无人机在目标跟踪时面临的外观变化和外界因素干扰等问题。采用Siamese网络提取模板图像和搜索图像的方向梯度直方图(HOG)特征、颜色(CN)特征和深度卷积特征,自适应计算3种特征权重的大小,增强融合特征的表达能力。采用改进的特征分块注意力机制,增强模板图像特征信息中有效区域的关注度,实现更有效地目标相似度匹配。为降低计算成本,将输出特征向量转换到YCbCr空间后进行离散余弦变换(DCT)并保留低频分量,得到特征响应图,进行分类回归得到最终目标位置。实验表明:所提算法可以降低外观变化、外界因素干扰对跟踪性能的影响,提升目标跟踪的准确性。

Abstract:Unmanned aerial vehicle (UAV) has been widely used in various fields, target tracking is one of the key technologies of UAV applications. A UAV tracking algorithm based on feature fusion and segmented attention is proposed to solve the problems of UAV appearance changes and external interference when tracking targets. To improve the expression ability of fusion features, the weights of the three features are adaptively determined after the Siamese network has extracted histogram of oriented gradients (HOG), color names (CN), and deep convolution features from template and search images. Secondly, the improved feature segmentation attention mechanism is used to enhance the attention of the effective region in the template image feature information, so as to achieve more effective target similarity matching. The resultant feature vector is then transformed to YCbCr space to lower the computation cost. The feature response graph is then obtained using the discrete cosine transform (DCT), and classification regression is used to determine the final target location. Experiments show that the algorithm can reduce the influence of appearance change and external factors on tracking performance, and improve the accuracy of target tracking.

-

表 1 消融实验

Table 1. Ablation experiment

算法 精确率 成功率 Siamese 0.704 0.672 Siamese+FA 0.792 0.734 Siamese+PA 0.769 0.715 本文算法 0.832 0.771 -

[1] 管皓, 薛向阳, 安志勇. 深度学习在视频目标跟踪中的应用进展与展望[J]. 自动化学报, 2016, 42(6): 834-847.GUAN H, XUE X Y, AN Z Y. Advances on application of deep learning for video object tracking[J]. Acta Automatica Sinica, 2016, 42(6): 834-847(in Chinese). [2] 李靖, 马晓东, 陈怀民, 等. 无人机视觉导航着陆地标实时检测跟踪方法[J]. 西北工业大学学报, 2018, 36(2): 294-301. doi: 10.3969/j.issn.1000-2758.2018.02.014LI J, MA X D, CHEN H M, et al. Real-time detection and tracking method of landmark based on UAV visual navigation[J]. Journal of Northwestern Polytechnical University, 2018, 36(2): 294-301(in Chinese). doi: 10.3969/j.issn.1000-2758.2018.02.014 [3] DANELLJAN M, HÄGER G, KHAN F S, et al. Convolutional features for correlation filter based visual tracking[C]//Proceedings of the IEEE International Conference on Computer Vision Workshop. Piscataway: IEEE Press, 2015: 621-629. [4] BERTINETTO L, VALMADRE J, HENRIQUES J F, et al. Fully-convolutional Siamese networks for object tracking[C]//Proceedings of the Computer Vision–ECCV 2016 Workshops. Berlin: Springer, 2016: 850-865. [5] VALMADRE J, BERTINETTO L, HENRIQUES J, et al. End-to-end representation learning for correlation filter based tracking[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2017: 5000-5008. [6] HU W M, WANG Q, GAO J, et al. DCFNet: discriminant correlation filters network for visual tracking[J]. Journal of Computer Science and Technology, 2024, 39(3): 691-714. doi: 10.1007/s11390-023-3788-3 [7] 刘芳, 杨安喆, 吴志威. 基于自适应Siamese网络的无人机目标跟踪算法[J]. 航空学报, 2020, 41(1): 323423.LIU F, YANG A Z, WU Z W. Adaptive Siamese network based UAV target tracking algorithm[J]. Acta Aeronautica et Astronautica Sinica, 2020, 41(1): 323423(in Chinese). [8] LI B, YAN J J, WU W, et al. High performance visual tracking with Siamese region proposal network[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2018: 8971-8980. [9] LI B, WU W, WANG Q, et al. SiamRPN: evolution of Siamese visual tracking with very deep networks[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2019: 4277-4286. [10] 白鑫宇. 基于特征融合的相关滤波目标跟踪算法研究[D]. 重庆: 重庆邮电大学, 2022.BAI X Y. Research on target tracking algorithm of correlation filtering based on feature fusion[D]. Chongqing: Chongqing University of Posts and Telecommunications, 2022(in Chinese). [11] 韩江龙. 基于相关滤波框架下多特征融合目标跟踪算法的研究[D]. 秦皇岛: 燕山大学, 2019.HAN J L. Research on multi-feature fusion target tracking algorithm based on correlation filtering framework[D]. Qinhuangdao: Yanshan University, 2019(in Chinese). [12] DALAL N, TRIGGS B. Histograms of oriented gradients for human detection[C]//Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2005: 886-893. [13] VAN DE WEIJER J, SCHMID C, VERBEEK J, et al. Learning color names for real-world applications[J]. IEEE Transactions on Image Processing, 2009, 18(7): 1512-1523. doi: 10.1109/TIP.2009.2019809 [14] 胡阳光, 肖明清, 张凯, 等. 传统特征和深度特征融合的红外空中目标跟踪[J]. 系统工程与电子技术, 2019, 41(12): 2675-2683.HU Y G, XIAO M Q, ZHANG K, et al. Infrared aerial target tracking based on fusion of traditional feature and deep feature[J]. Systems Engineering and Electronics, 2019, 41(12): 2675-2683(in Chinese). [15] 司海平, 万里, 王云鹏, 等. 基于特征融合的玉米品种识别[J]. 中国粮油学报, 2023, 38(12): 191-196. doi: 10.3969/j.issn.1003-0174.2023.12.027SI H P, WAN L, WANG Y P, et al. Maize variety recognition based on feature fusion[J]. China Journal of Grain and Oils, 2023, 38(12): 191-196 (in Chinese). doi: 10.3969/j.issn.1003-0174.2023.12.027 [16] ZHANG W C, CHEN Z, LIU P Z, et al. Siamese visual tracking with robust adaptive learning[C]//Proceedings of the IEEE 13th International Conference on Anti-counterfeiting, Security, and Identification. Piscataway: IEEE Press, 2019: 153-157. [17] SHEN J B, TANG X, DONG X P, et al. Visual object tracking by hierarchical attention Siamese network[J]. IEEE Transactions on Cybernetics, 2020, 50(7): 3068-3080. doi: 10.1109/TCYB.2019.2936503 [18] 路丽霞. 基于深度网络的无人机地貌图像分类算法研究[D]. 北京: 北京工业大学, 2018.LU L X. Research on UAV landform image classification algorithm based on depth network[D]. Beijing: Beijing University of Technology, 2018(in Chinese). [19] CHEN Z D, ZHONG B N, LI G R, et al. Siamese box adaptive network for visual tracking[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2020: 6667-6676. [20] ZHENG Z H, WANG P, LIU W, et al. Distance-IoU loss: faster and better learning for bounding box regression[J]. Proceedings of the AAAI Conference on Artificial Intelligence, 2020, 34(7): 12993-13000. doi: 10.1609/aaai.v34i07.6999 [21] YU J H, JIANG Y N, WANG Z Y, et al. UnitBox[C]//Proceedings of the 24th ACM International Conference on Multimedia. New York: ACM, 2016: 516-520. [22] FAN H, LIN L T, YANG F, et al. LaSOT: a high-quality benchmark for large-scale single object tracking[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2019: 5369-5378. [23] MUELLER M, SMITH N, GHANEM B. A benchmark and simulator for UAV tracking[J]. Springer International Publishing, 2016, 9905: 445-461. [24] DANELLJAN M, BHAT G, KHAN F S, et al. ECO: efficient convolution operators for tracking[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2017: 6931-6939. [25] ZHANG Z P, PENG H W. Deeper and wider Siamese networks for real-time visual tracking[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2019: 4586-4595. [26] GUO D Y, WANG J, CUI Y, et al. SiamCAR: Siamese fully convolutional classification and regression for visual tracking[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2020: 6268-6276. [27] XU Y D, WANG Z Y, LI Z X, et al. SiamFC++: towards robust and accurate visual tracking with target estimation guidelines[C]//Proceedings of the AAAI Conference on Artificial Intelligence. Menlo Park: AAAI, 2020, 34(7): 12549-12556. [28] YU Y C, XIONG Y L, HUANG W L, et al. Deformable Siamese attention networks for visual object tracking[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Piscataway: IEEE Press, 2020: 6727-6736. -

下载:

下载: